Objective Functions: A Simple Example with Matrix Factorisation

University of Sheffield

Objective Function

- Last week we motivated the importance of probability.

- This week we motivate the idea of the ‘objective function’.

Introduction to Classification

Classification

- Wake word classification (Global Pulse Project).

Breakthrough in 2012 with ImageNet result of Alex Krizhevsky, Ilya Sutskever and Geoff Hinton

- We are given a data set containing ‘inputs’, \(\inputMatrix\) and ‘targets’, \(\dataVector\).

- Each data point consists of an input vector \(\inputVector_i\) and a class label, \(\dataScalar_i\).

- For binary classification assume \(\dataScalar_i\) should be either \(1\) (yes) or \(-1\) (no).

Input vector can be thought of as features.

Discrete Probability

- Algorithms based on prediction function and objective function.

- For regression the codomain of the functions, \(f(\inputMatrix)\) was the real numbers or sometimes real vectors.

- In classification we are given an input vector, \(\inputVector\), and an associated label, \(\dataScalar\) which either takes the value \(-1\) or \(1\).

Classification

- Inputs, \(\inputVector\), mapped to a label, \(\dataScalar\), through a function \(\mappingFunction(\cdot)\) dependent on parameters, \(\weightVector\), \[ \dataScalar = \mappingFunction(\inputVector; \weightVector). \]

- \(\mappingFunction(\cdot)\) is known as the prediction function.

Classification Examples

- Classifiying hand written digits from binary images (automatic zip code reading)

- Detecting faces in images (e.g. digital cameras).

- Who a detected face belongs to (e.g. Facebook, DeepFace)

- Classifying type of cancer given gene expression data.

- Categorization of document types (different types of news article on the internet)

Hyperplane

predict the class label, \(\dataScalar_i\), given the features associated with that data point, \(\inputVector_i\), using the prediction function:

\[\mappingFunction(\inputScalar_i) = \text{sign}\left(\mappingVector^\top \inputVector_i + b\right)\]

- Decision boundary for the classification is given by a hyperplane.

- Vector \(\mappingVector\) is the [normal vector](http://en.wikipedia.org/wiki/Normal_(geometry) to the hyperplane.

Hyperplane is described by the formula \(\mappingVector^\top \inputVector = -b\)

Toy Data

- Need to draw a decision boundary that separates red crosses from green circles.

Mathematical Drawing of Decision Boundary

Refresher: draw a hyper plane at decision boundary. - Decision boundary: plane where a point moves from being classified as -1 to +1. - We have

\[\text{sign}(\inputVector^\top \mappingVector) = \text{sign}(w_0 + w_1\inputScalar_{i,1} + w_2 \inputScalar_{i, 2})\]

\(\inputScalar_{i, 1}\) is first feature \(\inputScalar_{i, 2}\) is second feature assume \(\inputScalar_{0,i}=1\).

Set \(w_0 = b\) we have

\[\text{sign}\left(w_1 \inputScalar_{i, 1} + w_2 \inputScalar_{i, 2} + b\right)\]

Equation of Plane

\[\text{sign}\left(w_1 \inputScalar_{i, 1} + w_2 \inputScalar_{i, 2} + b\right)\]

Equation of plane is

\[w_1 \inputScalar_{i, 1} + w_2 \inputScalar_{i, 2} + b = 0\]

or

\[w_1 \inputScalar_{i, 1} + w_2 \inputScalar_{i, 2} = -b\]

Next we will initialise the model and draw a decision boundary.

Perceptron Algorithm: Initialisation Maths

For a randomly chosen data point, \(i\), set \[\mappingVector = \dataScalar_i \inputVector_i\]

If predicted label of the \(i\)th point is \[\text{sign}(\mappingVector^\top\inputVector_i)\]

Setting \(\mappingVector\) to \(\dataScalar_i\inputVector_i\) implies \[\text{sign}(\mappingVector^\top\inputVector_i) = \text{sign}(\dataScalar_i\inputVector_i^\top \inputVector_i) = \dataScalar_i\]

Computing Decision Boundary

Drawing Decision Boundary

Switching Formulae

Code for Perceptron

Objective Functions and Regression

- Classification: map feature to class label.

Regression: map feature to real value our prediction function is

\[\mappingFunction(\inputScalar_i) = m\inputScalar_i + c\]

Need an algorithm to fit it.

Least squares: minimize an error.

\[\errorFunction(m, c) = \sum_{i=1}^\numData (\dataScalar_i * \mappingFunction(\inputScalar_i))^2\]

Regression

- Create an artifical data set.

We now need to decide on a true value for \(m\) and a true value for \(c\) to use for generating the data.

We can use these values to create our artificial data. The formula \[\dataScalar_i = m\inputScalar_i + c\] is translated to code as follows:

Plot of Data

We can now plot the artifical data we’ve created.

These points lie exactly on a straight line, that’s not very realistic, let’s corrupt them with a bit of Gaussian ‘noise’.

Noise Corrupted Plot

Contour Plot of Error Function

Visualise the error function surface, create vectors of values.

create a grid of values to evaluate the error function in 2D.

compute the error function at each combination of \(c\) and \(m\).

Contour Plot of Error

- We can now make a contour plot.

Steepest Descent

- Minimize the sum of squares error function.

- One way of doing that is gradient descent.

- Initialize with a guess for \(m\) and \(c\)

- update that guess by subtracting a portion of the gradient from the guess.

- Like walking down a hill in the steepest direction of the hill to get to the bottom.

Algorithm

- We start with a guess for \(m\) and \(c\).

Offset Gradient

Now we need to compute the gradient of the error function, firstly with respect to \(c\),

\[\frac{\text{d}\errorFunction(m, c)}{\text{d} c} = -2\sum_{i=1}^\numData (\dataScalar_i - m\inputScalar_i - c)\]

This is computed in python as follows

Deriving the Gradient

To see how the gradient was derived, first note that the \(c\) appears in every term in the sum. So we are just differentiating \((\dataScalar_i - m\inputScalar_i - c)^2\) for each term in the sum. The gradient of this term with respect to \(c\) is simply the gradient of the outer quadratic, multiplied by the gradient with respect to \(c\) of the part inside the quadratic. The gradient of a quadratic is two times the argument of the quadratic, and the gradient of the inside linear term is just minus one. This is true for all terms in the sum, so we are left with the sum in the gradient.

Slope Gradient

The gradient with respect tom \(m\) is similar, but now the gradient of the quadratic’s argument is \(-\inputScalar_i\) so the gradient with respect to \(m\) is

\[\frac{\text{d}\errorFunction(m, c)}{\text{d} m} = -2\sum_{i=1}^\numData \inputScalar_i(\dataScalar_i - m\inputScalar_i - c)\]

which can be implemented in python (numpy) as

Update Equations

- Now we have gradients with respect to \(m\) and \(c\).

- Can update our inital guesses for \(m\) and \(c\) using the gradient.

- We don’t want to just subtract the gradient from \(m\) and \(c\),

- We need to take a small step in the gradient direction.

- Otherwise we might overshoot the minimum.

- We want to follow the gradient to get to the minimum, the gradient changes all the time.

Move in Direction of Gradient

Update Equations

The step size has already been introduced, it’s again known as the learning rate and is denoted by \(\learnRate\). \[ c_\text{new}\leftarrow c_{\text{old}} - \learnRate \frac{\text{d}\errorFunction(m, c)}{\text{d}c} \]

- gives us an update for our estimate of \(c\) (which in the code we’ve been calling

c_starto represent a common way of writing a parameter estimate, \(c^*\)) and \[ m_\text{new} \leftarrow m_{\text{old}} - \learnRate \frac{\text{d}\errorFunction(m, c)}{\text{d}m} \] Giving us an update for \(m\).

Update Code

- These updates can be coded as

Iterating Updates

- Fit model by descending gradient.

Gradient Descent Algorithm

Stochastic Gradient Descent

- If \(\numData\) is small, gradient descent is fine.

- But sometimes (e.g. on the internet \(\numData\) could be a billion.

- Stochastic gradient descent is more similar to perceptron.

- Look at gradient of one data point at a time rather than summing across all data points)

- This gives a stochastic estimate of gradient.

Stochastic Gradient Descent

The real gradient with respect to \(m\) is given by

\[\frac{\text{d}\errorFunction(m, c)}{\text{d} m} = -2\sum_{i=1}^\numData \inputScalar_i(\dataScalar_i - m\inputScalar_i - c)\]

but it has \(\numData\) terms in the sum. Substituting in the gradient we can see that the full update is of the form

\[m_\text{new} \leftarrow m_\text{old} + 2\learnRate \left[\inputScalar_1 (\dataScalar_1 - m_\text{old}\inputScalar_1 - c_\text{old}) + (\inputScalar_2 (\dataScalar_2 - m_\text{old}\inputScalar_2 - c_\text{old}) + \dots + (\inputScalar_n (\dataScalar_n - m_\text{old}\inputScalar_n - c_\text{old})\right]\]

This could be split up into lots of individual updates \[m_1 \leftarrow m_\text{old} + 2\learnRate \left[\inputScalar_1 (\dataScalar_1 - m_\text{old}\inputScalar_1 - c_\text{old})\right]\] \[m_2 \leftarrow m_1 + 2\learnRate \left[\inputScalar_2 (\dataScalar_2 - m_\text{old}\inputScalar_2 - c_\text{old})\right]\] \[m_3 \leftarrow m_2 + 2\learnRate \left[\dots\right]\] \[m_n \leftarrow m_{n-1} + 2\learnRate \left[\inputScalar_n (\dataScalar_n - m_\text{old}\inputScalar_n - c_\text{old})\right]\]

which would lead to the same final update.

Updating \(c\) and \(m\)

- In the sum we don’t \(m\) and \(c\) we use for computing the gradient term at each update.

- In stochastic gradient descent we do change them.

- This means it’s not quite the same as steepest desceint.

- But we can present each data point in a random order, like we did for the perceptron.

- This makes the algorithm suitable for large scale web use (recently this domain is know as ‘Big Data’) and algorithms like this are widely used by Google, Microsoft, Amazon, Twitter and Facebook.

Stochastic Gradient Descent

- Or more accurate, since the data is normally presented in a random order we just can write \[ m_\text{new} = m_\text{old} + 2\learnRate\left[\inputScalar_i (\dataScalar_i - m_\text{old}\inputScalar_i - c_\text{old})\right] \]

SGD for Linear Regression

Putting it all together in an algorithm, we can do stochastic gradient descent for our regression data.

Reflection on Linear Regression and Supervised Learning

Think about:

What effect does the learning rate have in the optimization? What’s the effect of making it too small, what’s the effect of making it too big? Do you get the same result for both stochastic and steepest gradient descent?

The stochastic gradient descent doesn’t help very much for such a small data set. It’s real advantage comes when there are many, you’ll see this in the lab.

Lab Class

- You will take the ideas you have learnt.

- You will apply them in the domain of matrix factorisation.

- Matrix factorization presents a different error function.

for loss functions.

Further Reading

- Section 1.1.3 of Rogers and Girolami (2011)

Movie Body Count Example

Recommender Systems

Inner Products for Representing Similarity

The Library on an Infinite Plane

\[ \mathbf{v}_j = \begin{bmatrix} v_{j,1} \\ v_{j,2}\end{bmatrix}, \]

\[ \mathbf{u}_i = \begin{bmatrix} u_{i,1} \\ u_{i,2}\end{bmatrix}. \]

Obtaining the Data

import pods

import pandas as pd

import numpy as np

user_data = pd.DataFrame(index=movies.index, columns=['title', 'year', 'rating', 'prediction'])

user_data['title']=movies.Film

user_data['year']=movies.Year

accumulator=pods.lab.distributor(spreadsheet_title='COM4509/6509 Movie Ratings:', user_sep='\t')

# function to apply to data before giving it to user

# Select 50 movies at random and order them according to year.

max_movies = 50

function = lambda x: x.loc[np.random.permutation(x.index)[:max_movies]].sort(columns='year')

accumulator.write(data_frame=user_data, comment='Film Ratings',

function=function)

accumulator.write_comment('Rate Movie Here (score 1-5)', row=1, column=4)

accumulator.share(share_type='writer', send_notifications=True)Once you have placed your ratings we can download the data from your spreadsheets to a central file where the ratings of the whole class can be stored. We will build an algorithm on these ratings and use them to make predictions for the rest of the class. Firstly, here’s the code for reading the ratings from each of the spreadsheets.

import numpy as np

import pandas as pd

import os

accumulator = pods.lab.distributor(user_sep='\t')

data = accumulator.read(usecols=['rating'], dtype={'index':int, 'rating':np.float64}, header=2)

for user in data:

if data[user].rating.count()>0: # only add user if they rated something

# add a new field to movies with that user's ratings.

movies[user] = data[user]['rating']

# Store the csv on disk where it will be shared through dropbox.

movies.to_csv(os.path.join(pods.lab.class_dir,'movies.csv'), index_label='index')Now we will convert our data structure into a form that is appropriate for processing. We will convert the movies object into a data base which contains the movie, the user and the score using the following command.

Processing the Data

We will now prepare the data set for processing. To do this we are going to conver the data into a new format using the melt command.

What is a pivot table? What does the pandas command

pd.melt do?

Measuring Similarity

\[ \mathbf{a}^\top\mathbf{b} = \sum_{i} a_i b_i \]

\[ \mathbf{a}^\top\mathbf{b} = |\mathbf{a}||\mathbf{b}| \cos(\theta) \]

Objective Function

The error function (or objective function, or cost function) we will choose is known as ‘sum of squares’, we will aim to minimize the sum of squared squared error between the inner product of \(\mathbf{u}_i\) and \(\mathbf{v}_i\) and the observed score for the user/item pairing, given by \(y_{i, j}\).

The total objective function can be written as \[ E(\mathbf{U}, \mathbf{V}) = \sum_{i,j} s_{i,j} (y_{i,j} - \mathbf{u}_i^\top \mathbf{v}_j)^2 \] where \(s_{i,j}\) is an indicator variable that is 1 if user \(i\) has rated item \(j\) and is zero otherwise. Here \(\mathbf{U}\) is the matrix made up of all the vectors \(\mathbf{u}\), \[ \mathbf{U} = \begin{bmatrix} \mathbf{u}_1 \dots \mathbf{u}_n\end{bmatrix}^\top \] where we note that \(i\)th row of \(\mathbf{U}\) contains the vector associated with the \(i\)th user and \(n\) is the total number of users. This form of matrix is known as a design matrix. Similarly, we define the matrix \[ \mathbf{V} = \begin{bmatrix} \mathbf{v}_1 \dots \mathbf{v}_m\end{bmatrix}^\top \] where again the \(j\)th row of \(\mathbf{V}\) contains the vector associated with the \(j\)th item and \(m\) is the total number of items in the data set.

Objective Optimization

The idea is to mimimize this objective. A standard, simple, technique for minimizing an objective is gradient descent or steepest descent. In gradient descent we simply choose to update each parameter in the model by subtracting a multiple of the objective function’s gradient with respect to the parameters. So for a parameter \(u_{i,j}\) from the matrix \(\mathbf{U}\) we would have an update as follows: \[ u_{k,\ell} \leftarrow u_{k,\ell} - \eta \frac{\text{d} E(\mathbf{U}, \mathbf{V})}{\text{d}u_{k,\ell}} \] where \(\eta\) (which is pronounced eta in English) is a Greek letter representing the learning rate.

We can compute the gradient of the objective function with respect to \(u_{k,\ell}\) as \[ \frac{\text{d}E(\mathbf{U}, \mathbf{V})}{\text{d}u_{k,\ell}} = -2 \sum_j s_{k,j}v_{j,\ell}(y_{k, j} - \mathbf{u}_k^\top\mathbf{v}_{j}). \] Similarly each parameter \(v_{i,j}\) needs to be updated according to its gradient.

Steepest Descent Algorithm

In the steepest descent algorithm we aim to minimize the objective function by subtacting the gradient of the objective function from the parameters.

Initialisation

To start with though, we need initial values for the matrix \(\mathbf{U}\) and the matrix \(\mathbf{V}\). Let’s create them as pandas data frames and initialise them randomly with small values.

We also will subtract the mean from the rating before we try and predict them predictions. Have a think about why this might be a good idea (hint, what will the gradients be if we don’t subtract the mean).

Now that we have the initial values set, we can start the optimization. First we define a function for the gradient of the objective and the objective function itself.

Now we can write our simple optimisation route. This allows us to observe the objective function as the optimization proceeds.

Stochastic Gradient Descent or Robbins Monroe Algorithm

Stochastic gradient descent involves updating separating each gradient update according to each separate observation, rather than summing over them all. It is an approximate optimization method, but it has proven convergence under certain conditions and can be much faster in practice. It is used widely by internet companies for doing machine learning in practice. For example, Facebook’s ad ranking algorithm uses stochastic gradient descent.

Making Predictions

Predictions can be made from the model of the appropriate rating for a given user, \(i\), for a given film, \(j\), by simply taking the inner product between their vectors \(\mathbf{u}_i\) and \(\mathbf{v}_j\).

Is Our Map Enough? Are Our Data Enough?

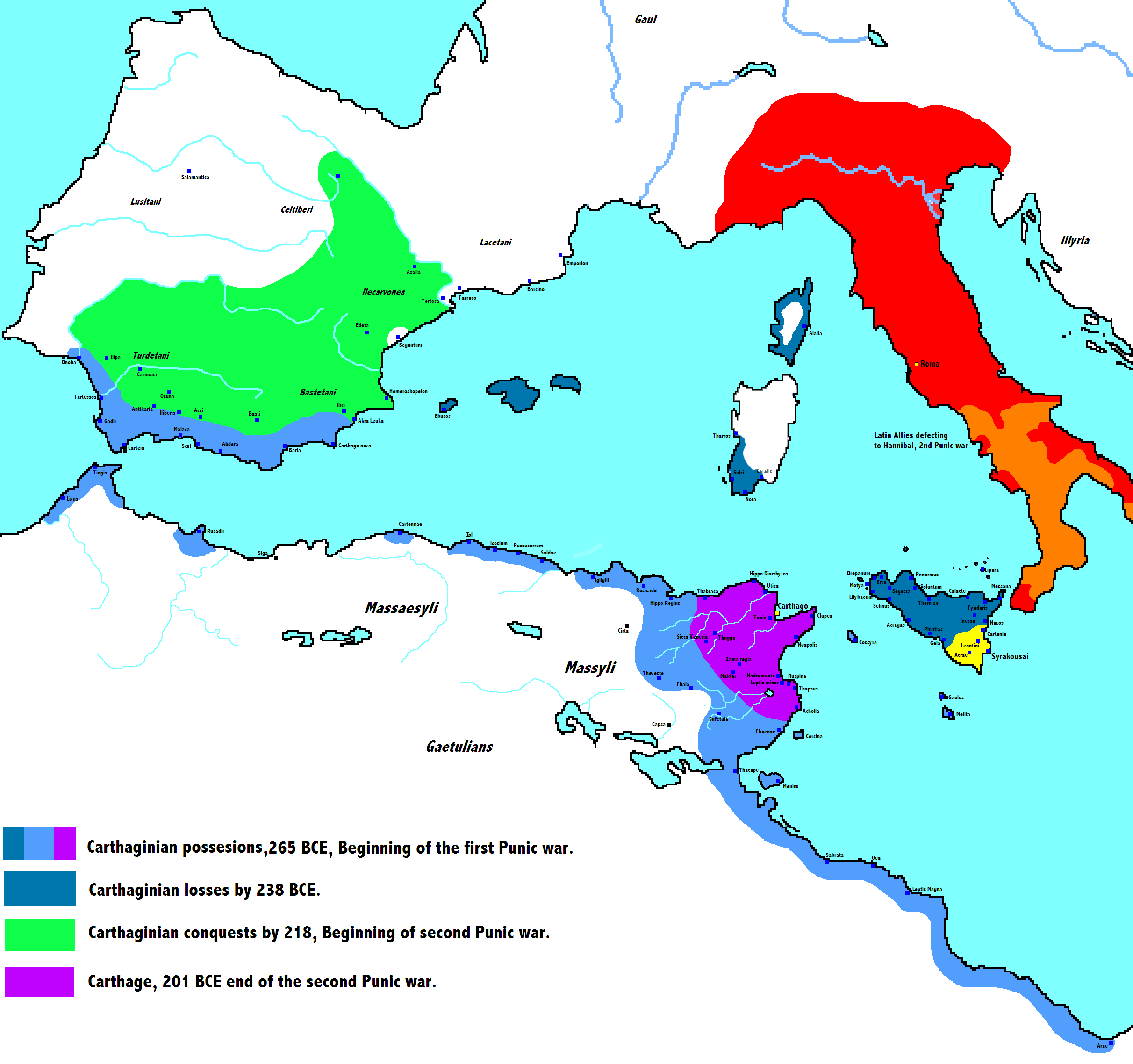

Is two dimensions really enough to capture the complexity of humans and their artforms? Perhaps we need even more dimensions to capture that complexity. Extending our books analogy further, consider how we should place books that have a historical timeframe as well as some geographical location. Do we really want books from the 2nd World War to sit alongside books from the Roman Empire? Books on the American invasion of Sicily in 1943 are perhaps less related to books about Carthage than those that study the Jewish Revolt from 66-70 (in the Roman Province of Judaea). So books that relate to subjects which are closer in time should be stored together. However, a student of rebellion against empire may also be interested in the relationship between the Jewish Revolt of 66-70 and the Indian Rebellion of 1857, nearly 1800 years later. Whilst the technologies are different, the psychology of the people is shared: a rebellious nation angainst their imperial masters, triggered by misrule with a religious and cultural background. To capture such complexities we would need further dimensions in our latent representation. But are further dimensions justified by the amount of data we have? Can we really understand the facets of a film that only has at most three or four ratings?

Going Further

If you want to take this model further then you’ll need more data. One possible source of data is the movielens data set. They have data sets containing up to ten million movie ratings. The few ratings we were able to collect in the class are not enough to capture the rich structure underlying these films. Imagine if we assume that the ratings are uniformly distributed between 1 and 5. If you know something about information theory then you could use that to work out the maximum number of bits of information we could gain per rating.

Now we’ll download the movielens 100k data and see if we can extract information about these movies.

Thanks!

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com

References

Rogers, S., Girolami, M., 2011. A first course in machine learning. CRC Press.