Personalized Health: Challenges in Data Science

NIPS Workshop on Machine Learning for Health

2016-12-09

Barcelona, Spain

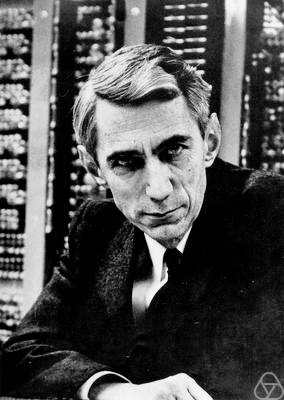

Neil D. Lawrence

Amazon and University of Sheffield

@lawrennd inverseprobability.com

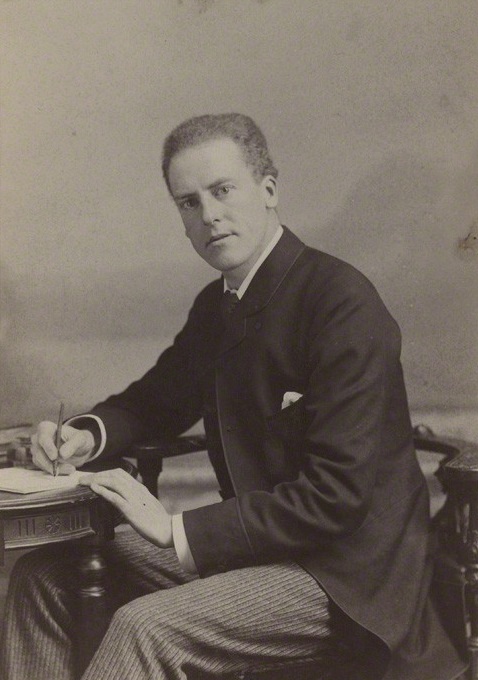

There are three types of lies: lies, damned lies and statistics

??

There are three types of lies: lies, damned lies and statistics

Benjamin Disraeli

There are three types of lies: lies, damned lies and statistics

Benjamin Disraeli 1804-1881

Mathematical Statistics

- ‘Founded’ by Karl Pearson (1857-1936)

There are three types of lies: lies, damned lies and ‘big data’

Neil Lawrence 1972-?

‘Mathematical Data Science’

- ‘Founded’ by ? (?-?)

Background: Big Data

The pervasiveness of data brings forward particular challenges.

Those challenges are most sharply in focus for personalized health.

Particular opportunities, in challenging areas such as mental health.

Evolved Relationship

Evolved Relationship

Evolved Relationship

“Embodiment Factors”

|

|

|

| compute | ~10 gigaflops | ~ 1000 teraflops? |

| communicate | ~1 gigbit/s | ~ 100 bit/s |

embodiment (compute/communicate) |

10 | ~ 1013 |

Evolved Relationship

Effects

This phenomenon has already revolutionised biology.

Large scale data acquisition and distribution.

Transcriptomics, genomics, epigenomics, ‘rich phenomics’.

Great promise for personalized health.

Societal Effects

Automated decision making within the computer based only on the data.

A requirement to better understand our own subjective biases to ensure that the human to computer interface formulates the correct conclusions from the data.

Particularly important where treatments are being prescribed.

But what is a treatment in the modern era: interventions could be far more subtle.

Societal Effects

Shift in dynamic from the direct pathway between human and data to indirect pathway between human and data via the computer

This change of dynamics gives us the modern and emerging domain of data science

Challenges

Paradoxes of the Data Society

Quantifying the Value of Data

Privacy, loss of control, marginalization

Breadth vs Depth Paradox

Able to quantify to a greater and greater degree the actions of individuals

But less able to characterize society

As we measure more, we understand less

What?

Perhaps greater preponderance of data is making society itself more complex

Therefore traditional approaches to measurement are failing

Curate’s egg of a society: it is only ‘measured in parts’

Wood or Tree

- Can either see a wood or a tree.

Examples

Election polls (UK 2015 elections, EU referendum, US 2016 elections)

Clinical trials vs personalized medicine: Obtaining statistical power where interventions are subtle. e.g. social media

The Maths

\[ \mathbf{Y} = \begin{bmatrix} y_{1, 1} & y_{1, 2} &\dots & y_{1,p}\\ y_{2, 1} & y_{2, 2} &\dots & y_{2,p}\\ \vdots & \vdots &\dots & \vdots\\ y_{n, 1} & y_{n, 2} &\dots & y_{n,p} \end{bmatrix} \in \Re^{n\times p} \]

The Maths

\[ \mathbf{Y} = \begin{bmatrix} \mathbf{y}^\top_{1, :} \\ \mathbf{y}^\top_{2, :} \\ \vdots \\ \mathbf{y}^\top_{n, :} \end{bmatrix} \in \Re^{n\times p} \]

The Maths

\[ \mathbf{Y} = \begin{bmatrix} \mathbf{y}_{:, 1} & \mathbf{y}_{:, 2} & \dots & \mathbf{y}_{:, p} \end{bmatrix} \in \Re^{n\times p} \]

The Maths

\[p(\mathbf{Y}|\boldsymbol{\theta}) = \prod_{i=1}^n p(\mathbf{y}_{i, :}|\boldsymbol{\theta})\]

The Maths

\[p(\mathbf{Y}|\boldsymbol{\theta}) = \prod_{i=1}^n p(\mathbf{y}_{i, :}|\boldsymbol{\theta})\]

\[\log p(\mathbf{Y}|\boldsymbol{\theta}) = \sum_{i=1}^n \log p(\mathbf{y}_{i, :}|\boldsymbol{\theta})\]

Consistency

Typically \(\boldsymbol{\theta} \in \Re^{\mathcal{O}(p)}\)

Consistency reliant on large sample approximation of KL divergence

\[ \text{KL}(P(\mathbf{Y})|| p(\mathbf{Y}|\boldsymbol{\theta}))\]

Minimization is equivalent to maximization of likelihood.

A foundation stone of classical statistics.

Large \(p\)

For large \(p\) the parameters are badly determined.

Large \(p\) small \(n\) problem.

Easily dealt with through definition.

The Maths

\[p(\mathbf{Y}|\boldsymbol{\theta}) = \prod_{j=1}^p p(\mathbf{y}_{:, j}|\boldsymbol{\theta})\]

\[\log p(\mathbf{Y}|\boldsymbol{\theta}) = \sum_{j=1}^p \log p(\mathbf{y}_{:, j}|\boldsymbol{\theta})\]

Breadth vs Depth

Modern Measurement deals with depth (many subjects) … or breadth lots of detail about subject.

- But what about

- \(p\approx n\)?

- Stratification of populations: batch effects etc.

Multi-task learning (Natasha Jaques)

Does \(p\) Even Exist?

Massively missing data.

Classical bias towards tables.

Streaming data.

\[ \mathbf{Y} = \begin{bmatrix} y_{1, 1} & y_{1, 2} &\dots & y_{1,p}\\ y_{2, 1} & y_{2, 2} &\dots & y_{2,p}\\ \vdots & \vdots &\dots & \vdots\\ y_{n, 1} & y_{n, 2} &\dots & y_{n,p} \end{bmatrix} \in \Re^{n\times p} \]

General index on \(y\)

\[y_\mathbf{x}\]

where \(\mathbf{x}\) might include time, spatial location …

Streaming data. Joint model of past, \(\mathbf{y}\) and future \(\mathbf{y}_*\)

\[p(\mathbf{y}, \mathbf{y}_*)\]

Prediction through:

\[p(\mathbf{y}_*|\mathbf{y})\]

Kolmogorov Consistency — Exchangeability

- From the sum rule of probability we have

\begin{align*}

p(\mathbf{y}|n^*) = \int p(\mathbf{y}, \mathbf{y}^*) \text{d}\mathbf{y}^*

\end{align*}

\(n^*\) is length of \(\mathbf{y}^*\).

Consistent if \(p(\mathbf{y}|n^*) = p(\mathbf{y})\)

- Prediction then given by product rule \begin{align*} p(\mathbf{y}^*|\mathbf{y}) = \frac{p(\mathbf{y}, \mathbf{y}^*)}{p(\mathbf{y})} \end{align*}

\(p(\mathbf{y}^*|\mathbf{y})\)

Parametric Models

- Kolmogorov consistency trivial in parametric model. \begin{align*} p(\mathbf{y}, \mathbf{y}^*) = \int \prod_{i=1}^n p(y_{i} | \boldsymbol{\theta})\prod_{i=1}^{n^*}p(y^*_i|\boldsymbol{\theta}) p(\boldsymbol{\theta}) \text{d}\boldsymbol{\theta} \end{align*}

- Marginalizing \begin{align*} p(\mathbf{y}) = \int \prod_{i=1}^n p(y_{i} | \boldsymbol{\theta})\prod_{i=1}^{n^*} \int p(y^*_i|\boldsymbol{\theta}) \text{d}y^*_i p(\boldsymbol{\theta}) \text{d}\boldsymbol{\theta} \end{align*}

Parametric Bottleneck

- Bayesian methods suggest a prior over \(\boldsymbol{\theta}\) and use posterior, \(p(\boldsymbol{\theta}|\mathbf{y})\) for making predictions. \begin{align*} p(\mathbf{y}^*|\mathbf{y}) = \int \prod_i p(y_i^* | \boldsymbol{\theta}) p(\boldsymbol{\theta}|\mathbf{y})\text{d}\boldsymbol{\theta} \end{align*}

Design time problem: parametric bottleneck. \[p(\boldsymbol{\theta} | \mathbf{y})\]

Streaming data could turn out to be more complex than we imagine.

Finite Storage

Despite our large interconnected brains, we only have finite storage.

Similar for digital computers. So we need to assume that we can only store a finite number of things about the data \(\mathbf{y}\).

This pushes us back towards parametric models.

Also need

- More classical statistics!

- Like the ‘paperless office’

A better characterization of human (see later)

- Larger studies (100,000 genome)

- Combined with complex models: algorithmic challenges

Quantifying the Value of Data

There’s a sea of data, but most of it is undrinkable

We require data-desalination before it can be consumed!

Data

- 90% of our time is spent on validation and integration (Leo Anthony Celi)

- “The Dirty Work We Don’t Want to Think About” (Eric Xing)

- “Voodoo to get it decompressed” (Francisco Giminez?)

- In health care clinicians collect the data and often control the direction of research through guardianship of data.

Value

- How do we measure value in the data economy?

- How do we encourage data workers: curation and management

- Incentivization for sharing and production.

- Quantifying the value in the contribution of each actor.

Credit Allocation

Direct work on data generates an enormous amount of ‘value’ in the data economy but this is unaccounted in the economy

Hard because data is difficult to ‘embody’

Value of shared data: Wellcome Trust 2010 Joint Statement (from the “Foggy Bottom” meeting)

Solutions

Encourage greater interaction between application domains and data scientists

Encourage visualization of data

Adoption of ‘data readiness levels’

Implications for incentivization schemes

Privacy, Loss of Control and Marginalization

Society is becoming harder to monitor

Individual is becoming easier to monitor

Conversation

Conversation

Conversation

Modelling

Modelling

Hate Speech or Political Dissent?

- social media monitoring for ‘hate speech’ can be easily turned to political dissent monitoring

Marketing

- can become more sinister when the target of the marketing is well understood and the (digital) environment of the target is also so well controlled

Free Will

- What does it mean if a computer can predict our individual behavior better than we ourselves can?

Discrimination

Potential for explicit and implicit discrimination on the basis of race, religion, sexuality, health status

All prohibited under European law, but can pass unawares, or be implicit

Marginalization

- Credit scoring, insurance, medical treatment

- What if certain sectors of society are under-represented in our aanalysis?

- What if Silicon Valley develops everything for us?

Digital Revolution and Inequality?

Amelioration

- Work to ensure individual retains control of their own data

- We accept privacy in our real lives, need to accept it in our digital

Control of persona and ability to project

Need better technological solutions: trust and algorithms.

Awareness

- Need to increase awareness of the pitfalls among researchers

- Need to ensure that technological solutions are being delivered not merely for few (#FirstWorldProblems)

- Address a wider set of challenges that the greater part of the world’s population is facing

Conclusion

- Data science offers a great deal of promise for personalized health

- There are challenges and pitfalls

- It is incumbent on us to avoid them

- Need new ways of thinking!

- Mathematical Data Science

Many solutions rely on education and awareness

Thanks!

- twitter: @lawrennd

- blog: http://inverseprobability.com