The Great AI Fallacy

Webinar for The Cambridge Network

The Great AI Fallacy

The Promise of AI

Automation forces humans to adapt, we serve.

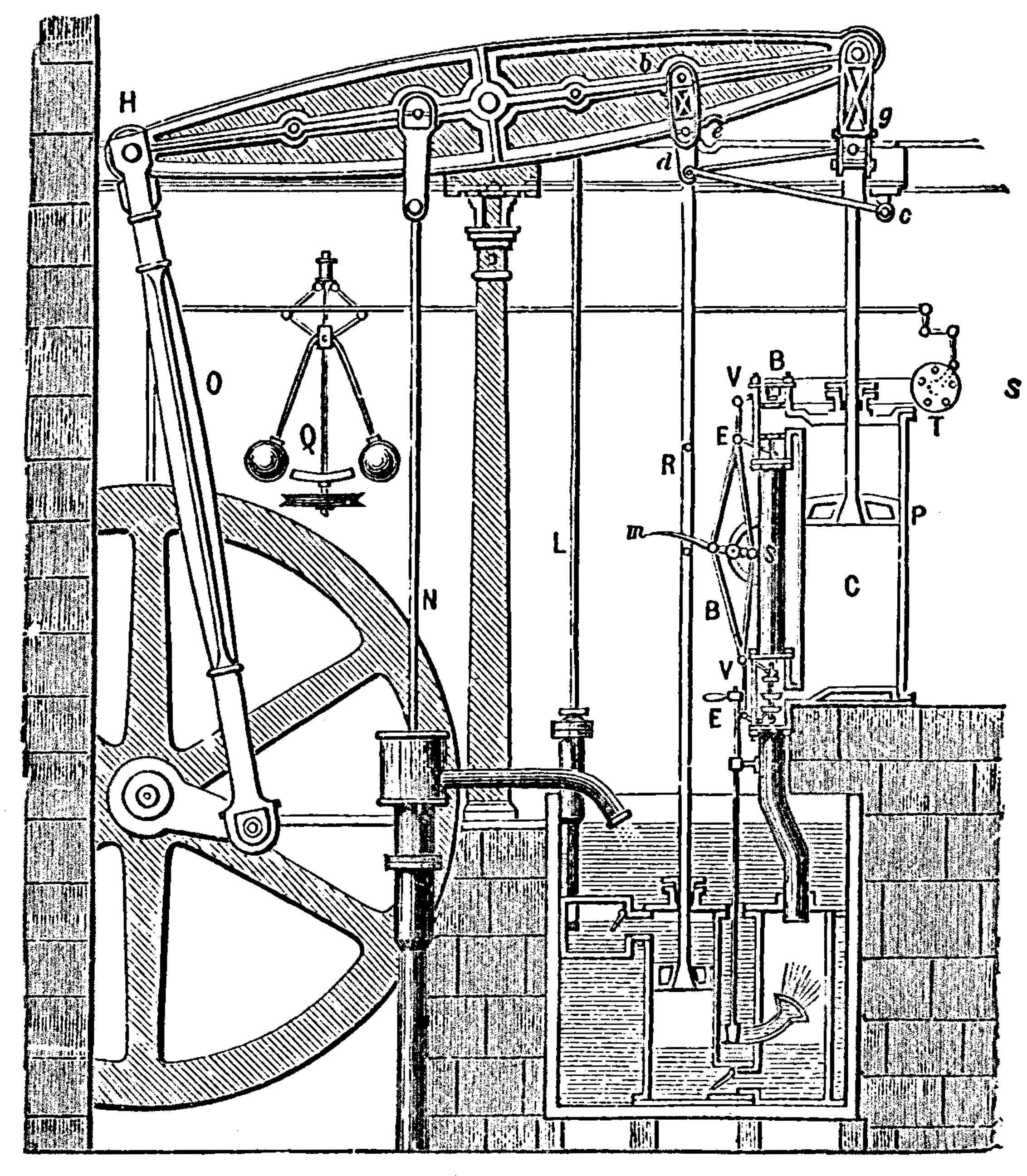

We can only automate by systemizing and controlling environment.

AI promises to be first wave of automation that adapts to us rather than us to it.

That Promise …

… will remain unfulfilled with current systems design.

|

|

|

|

|

|

bits/min

|

billions

|

2000

|

6

|

|

billion

calculations/s |

~100

|

a billion

|

a billion

|

|

embodiment

|

20 minutes

|

5 billion years

|

15 trillion years

|

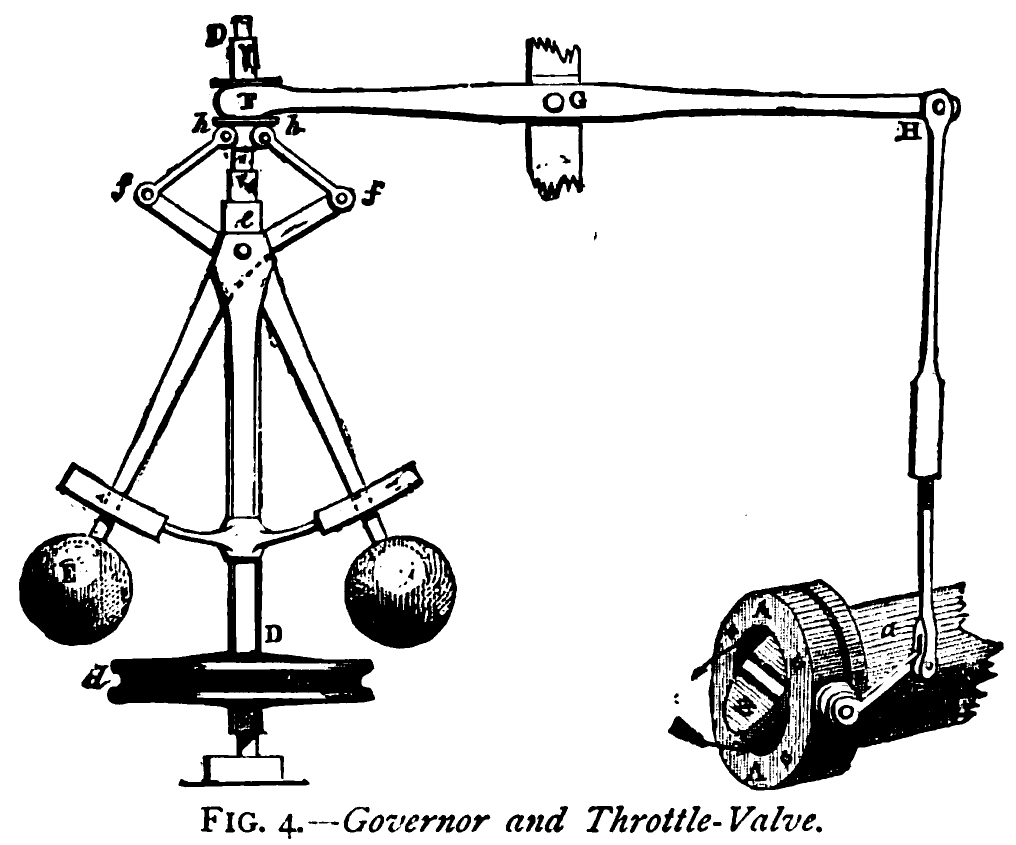

Computer Science Paradigm Shift

- Von Neuman Architecture:

- Code and data integrated in memory

- Today (Harvard Architecture):

- Code and data separated for security

Computer Science Paradigm Shift

- Machine learning:

- Software is data

- Machine learning is a high level breach of the code/data separation.

Intellectual Debt

Technical Debt

Lean Startup Methodology

The Mythical Man-month

.jpg)

Separation of Concerns

Intellectual Debt

Technical debt is the inability to maintain your complex software system.

Intellectual debt is the inability to explain your software system.

The Three Ds of Machine Learning Systems Design

The Three Ds of Machine Learning Systems Design

- Three primary challenges of Machine Learning Systems Design.

- Decomposition

- Data

- Deployment

The Three Ds of Machine Learning Systems Design

- Three primary challenges of Machine Learning Systems Design.

- Decomposition

Data- Deployment

Decomposition

- ML is not Magical Pixie Dust.

- It cannot be sprinkled thoughtlessly.

- We cannot simply automate all decisions through data

Decomposition

We are constrained by:

- Our data.

- The models.

Decomposition of Task

- Separation of Concerns

- Careful thought needs to be put into sub-processes of task.

- Any repetitive task is a candidate for automation.

Pigeonholing

Pigeonholing

- Can we decompose decision we need to repetitive sub-tasks where inputs and outputs are well defined?

- Are those repetitive sub-tasks well represent by a mathematical mapping?

A Trap

- Over emphasis on the type of model we’re deploying.

- Under emphasis on the appropriateness of the task decomposition.

Chicken and Egg

Co-evolution

- Absolute decomposition is impossible.

- If we deploy a weak component in one place, downstream system will compensate.

- Systems co-evolve … there is no simple solution

- Trade off between performance and decomposability.

- Need to monitor deployment

Data Science as Debugging

- Analogies: For Software Engineers describe data science as debugging.

80/20 in Data Science

- Anecdotally for a given challenge

- 80% of time is spent on data wrangling.

- 20% of time spent on modelling.

- Many companies employ ML Engineers focussing on models not data.

Deployment

Premise

Our machine learning is based on a software systems view that is 20 years out of date.

Continuous Deployment

- Deployment of modeling code.

- Data dependent models in production need continuous monitoring.

- Continous monitoring implies statistical tests rather than classic software tests.

Continuous Monitoring

- Continuous deployment:

- We’ve changed the code, we should test the effect.

- Continuous Monitoring:

- The world around us is changing, we should monitor the effect.

- Update our notions of testing: progression testing

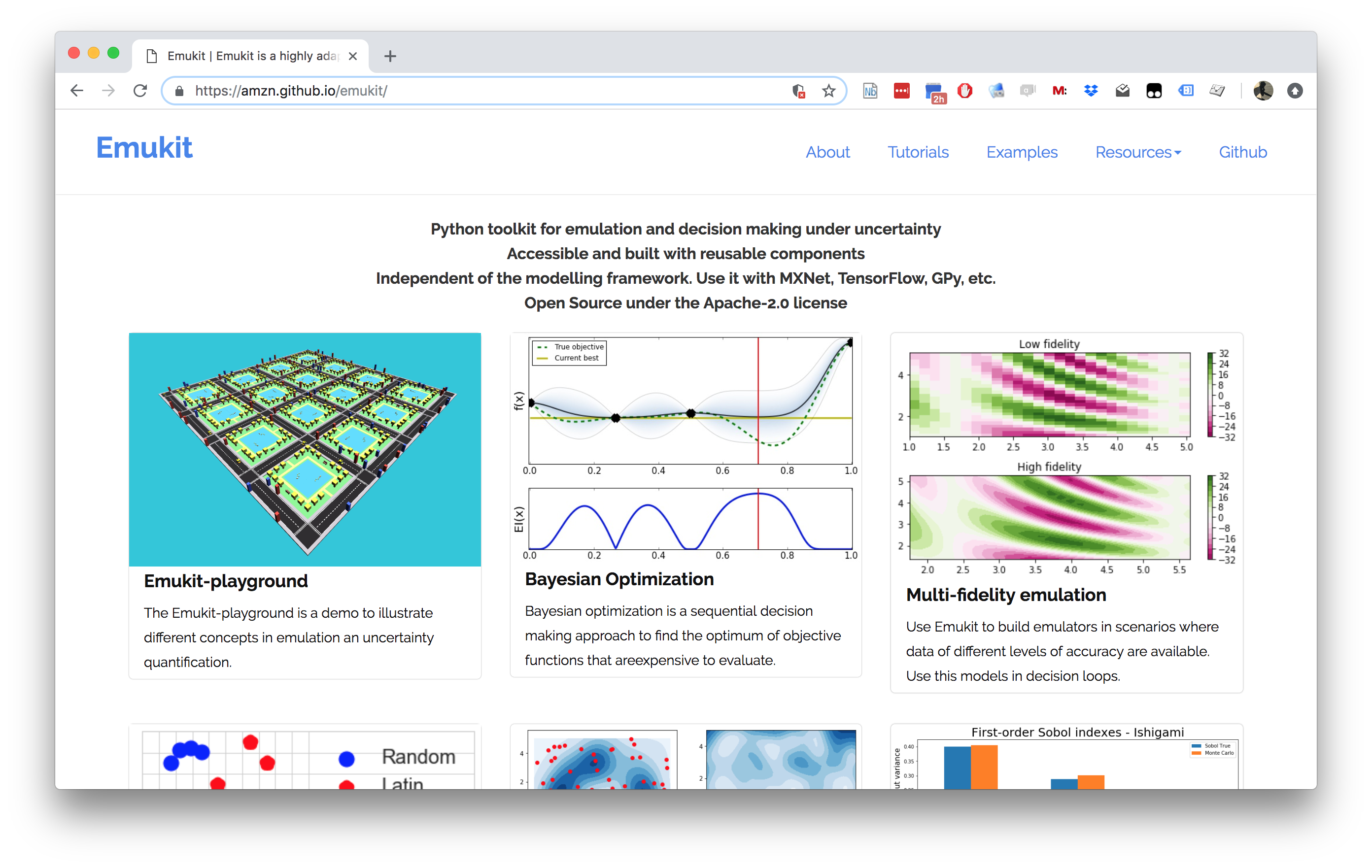

Data-oriented Architectures

Milan

A general-purpose stream algebra that encodes relationships between data streams (the Milan Intermediate Language or Milan IL)

A Scala library for building programs in that algebra.

A compiler that takes programs expressed in Milan IL and produces a Flink application that executes the program.

Context

Stateless Services

Meta Modelling

Conclusion

- AI will not (yet) adapt to the human.

- Humans must adapt to the machine.

- Good design more important than ever.

Thanks!

twitter: @lawrennd

podcast: The Talking Machines

newspaper: Guardian Profile Page

blog posts:

Natural and Artifical Intelligence