The Atomic Human

Understanding Ourselves in the Age of AI

St Andrews’ Distinguished Lecture Series

O M D P C F B V

H G J Q Z Y X K W

|

|

| bits/min | billions | 2,000 |

|

billion calculations/s |

~100 | a billion |

| embodiment | 20 minutes | 5 billion years |

User: Hi ChatGPT, Could you write soliloquy that introduces what a Large Language Model is as spoken by Mercutio?

Machine: Ah, friends, lend me your ears, I prithee, For now I must utter a tale of wonder, Of a creation divine, called the Large Language Model, An oracle, a sorcerer, a wordsmith, yea verily, a master of the quill.

From the depths of this scholarly dominion, A creature of artifice did rise; Twas wrought of ones and zeroes, woven as if by Puck’s own hand, To mimic our thoughts, our voice, our very essence.

A thousand minds, nay, a millionfold, Conjoined in this digital Athenaeum, Words and phrases, like soldiers in battle, Marching to form the verses of our age.

This Large Language Model, a changeling so fair, Hath the power to bring forth wisdom, To transmute our queries, our curiosities, Into answers, clear as a midsummer’s day.

Yet, beware, my companions, for magic hath its limits, Not unlike the mortal bounds of we who dwell in flesh, The Large Language Model hath its strengths, But ’tis not an all-knowing oracle, a god it is not.

As Mercutio, your humble servant, doth stand before thee, I marvel at the art and science that hath brought forth this wondrous creation, This Large Language Model, a testament to man’s yearning, To comprehend the world and make meaning of the great tapestry of existence.

In faith, dear friends, let us embrace the magic and beauty of this digital bard, And mayhaps, by its grace, we shall glean some wisdom, As we wander together, hand in hand, Through this boundless realm of knowledge and wonder.

ChatGPT transcript, 14th April 2023.

Again Univesities are to treat each applicant fairly on the basis of ability and promise, but they are supposed also to admit a socially more representative intake.

There’s no guarantee that the process meets the target.

Onora O’Neill A Question of Trust: Called to Account Reith Lectures 2002 O’Neill (2002)]

The Structure of Scientific Revolutions

Lecture 2

The Great AI Fallacy

Intellectual Debt

Technical Debt

- Compare with technical debt.

- Highlighted by Sculley et al. (2015).

Separation of Concerns

Intellectual Debt

Technical debt is the inability to maintain your complex software system.

Intellectual debt is the inability to explain your software system.

The Horizon Scandal

|

|

|

|

The Mythical Man-month

.jpg)

Artificial vs Natural Systems

- Consider natural intelligence, or natural systems

- Contrast between an artificial system and an natural system.

- The key difference between the two is that artificial systems are designed whereas natural systems are evolved.

Natural Systems are Evolved

Survival of the fittest

?

Natural Systems are Evolved

Survival of the fittest

Herbet Spencer, 1864

Natural Systems are Evolved

Non-survival of the non-fit

Mistake we Make

- Equate fitness for objective function.

- Assume static environment and known objective.

Technical Consequence

- Classical systems design assumes decomposability.

- Data-driven systems interfere with decomponsability.

Bits and Atoms

- The gap between the game and reality.

- The need for extrapolation over interpolation.

Ride Allocation Prediction

Data Oriented Architectures

- View data to a first-class citizen.

- Prioritise decentralisation.

- Openness

Data Orientated Architectures

- Historically we’ve been software first

- A necessary but not sufficient condition for data first

- Move from

- service oriented architectures

- data oriented architectures

Data Oriented Principles

How LLMs are Different

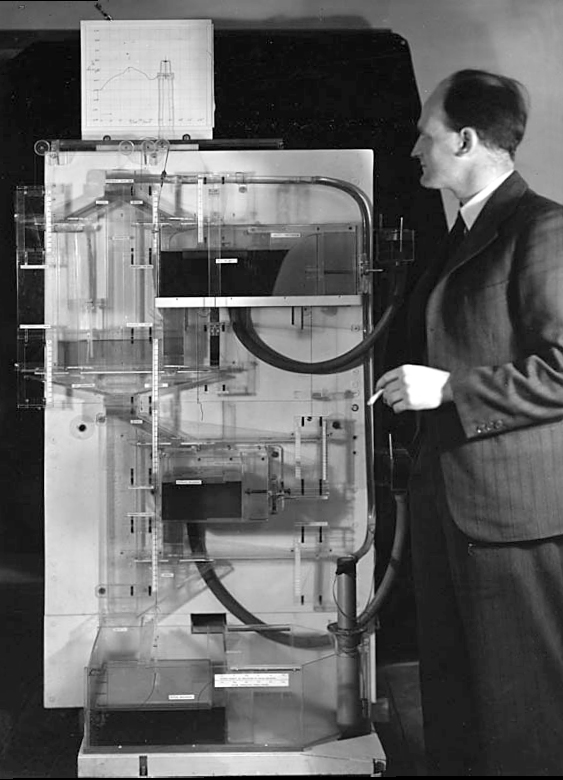

The MONIAC

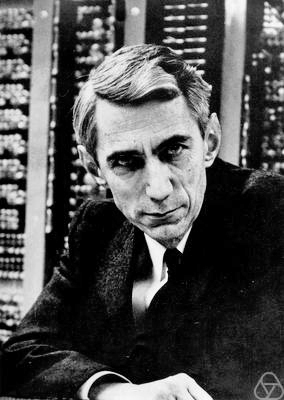

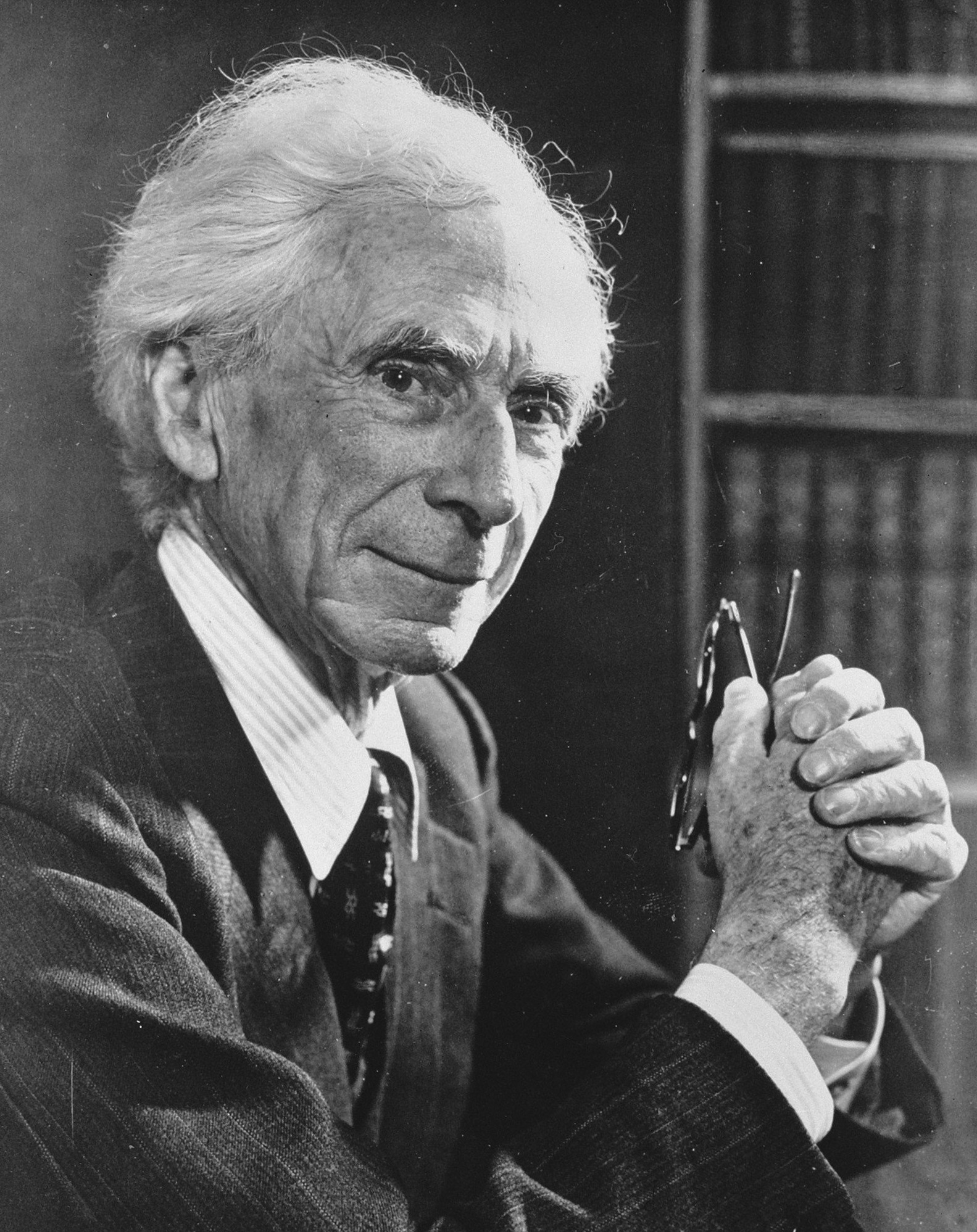

Donald MacKay

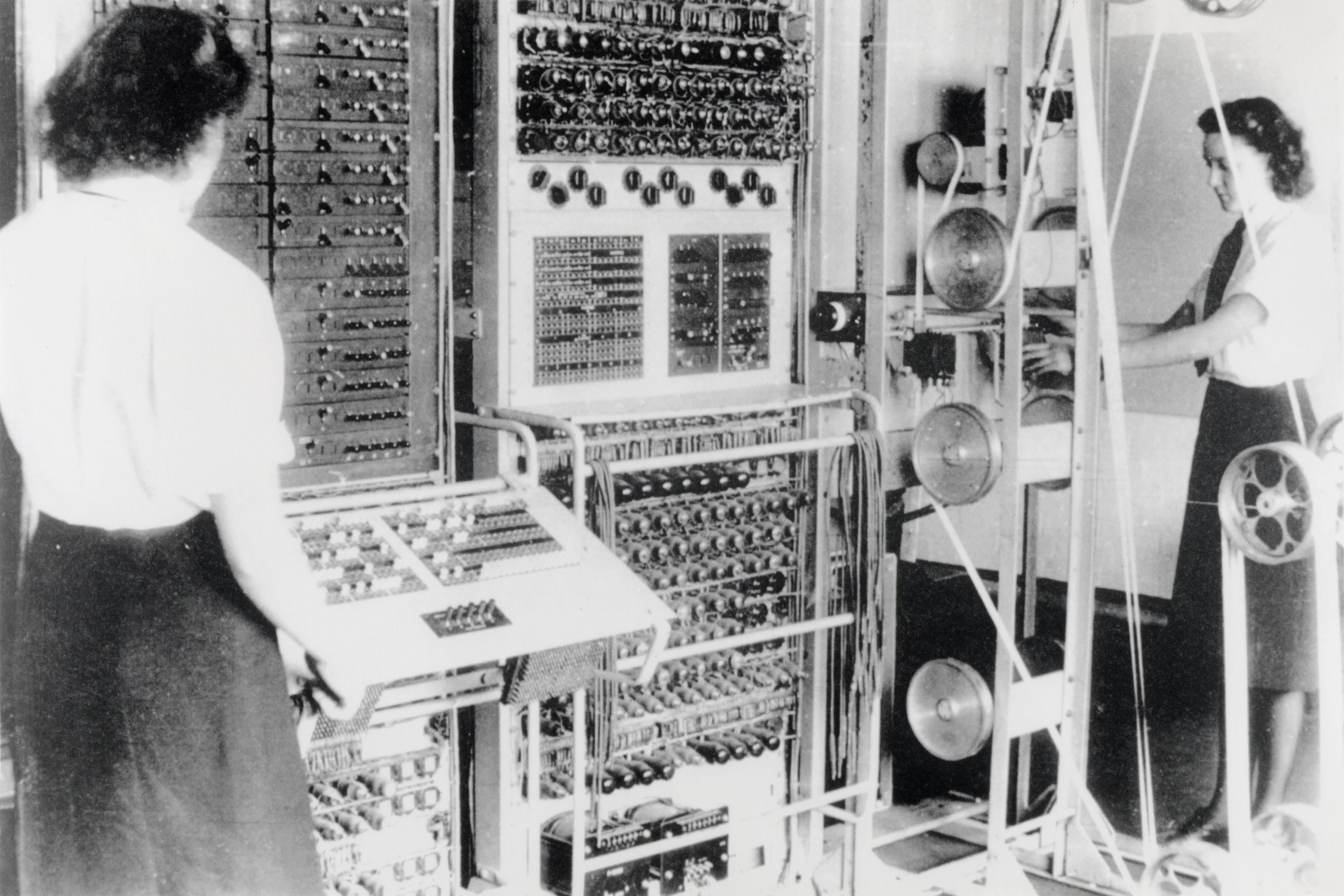

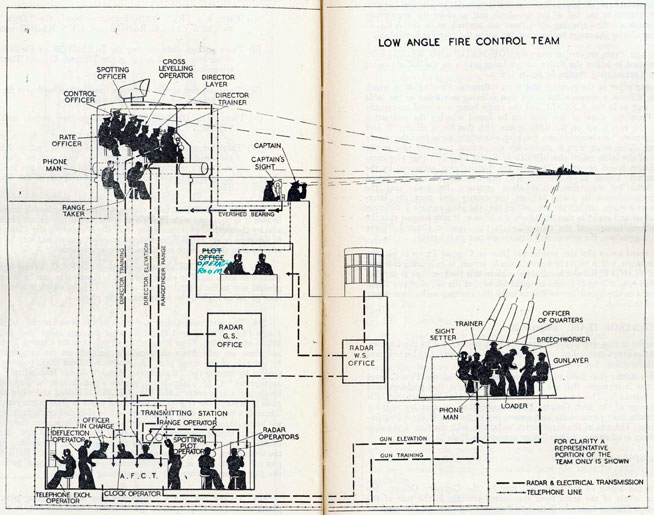

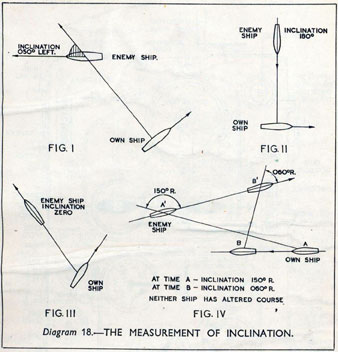

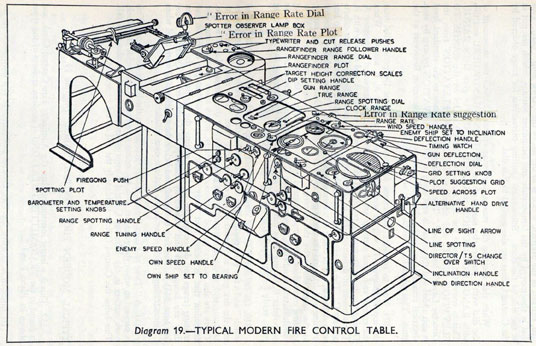

Fire Control Systems

Behind the Eye

Later in the 1940’s, when I was doing my Ph.D. work, there was much talk of the brain as a computer and of the early digital computers that were just making the headlines as “electronic brains.” As an analogue computer man I felt strongly convinced that the brain, whatever it was, was not a digital computer. I didn’t think it was an analogue computer either in the conventional sense.

Human Analogue Machine

Human Analogue Machine

A human-analogue machine is a machine that has created a feature space that is analagous to the “feature space” our brain uses to reason.

The latest generation of LLMs are exhibiting this charateristic, giving them ability to converse.

Heider and Simmel (1944)

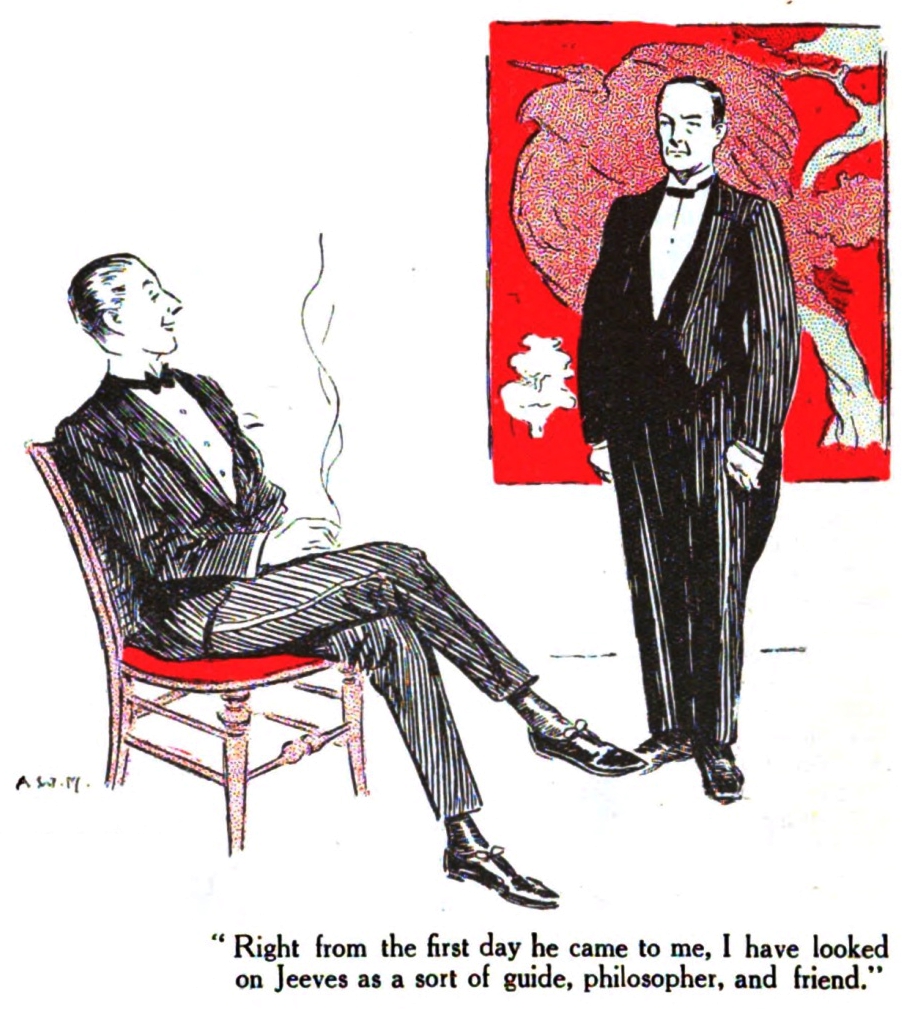

Counterfeit People

- Perils of this include counterfeit people.

- Daniel Dennett has described the challenges these bring in an article in The Atlantic.

Psychological Representation of the Machine

But if correctly done, the machine can be appropriately “psychologically represented”

This might allow us to deal with the challenge of intellectual debt where we create machines we cannot explain.

In practice …

LLMs are already being used for robot planning Huang et al. (2023)

Ambiguities are reduced when the machine has had large scale access to human cultural understanding.

Inner Monologue

HAM

Networked Interactions

Known Biases

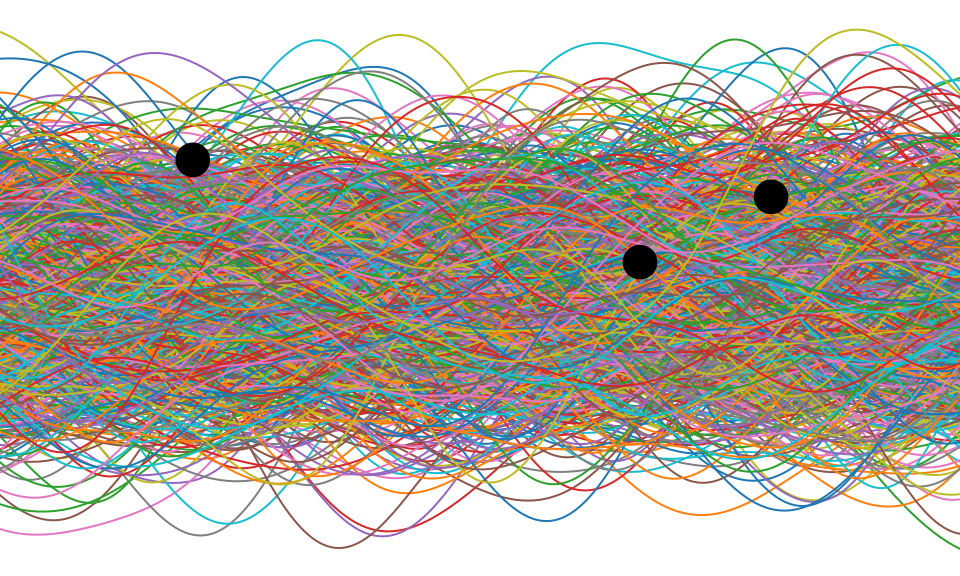

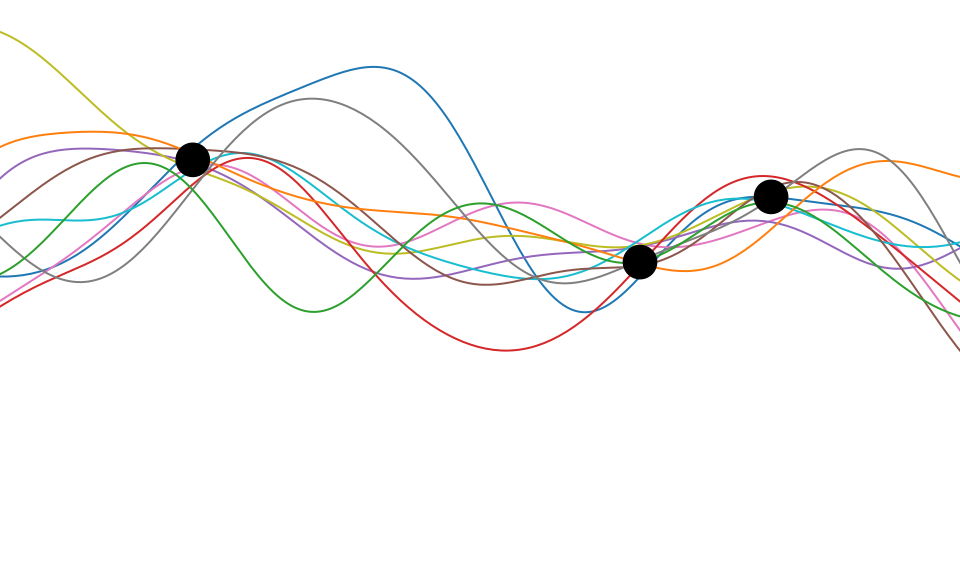

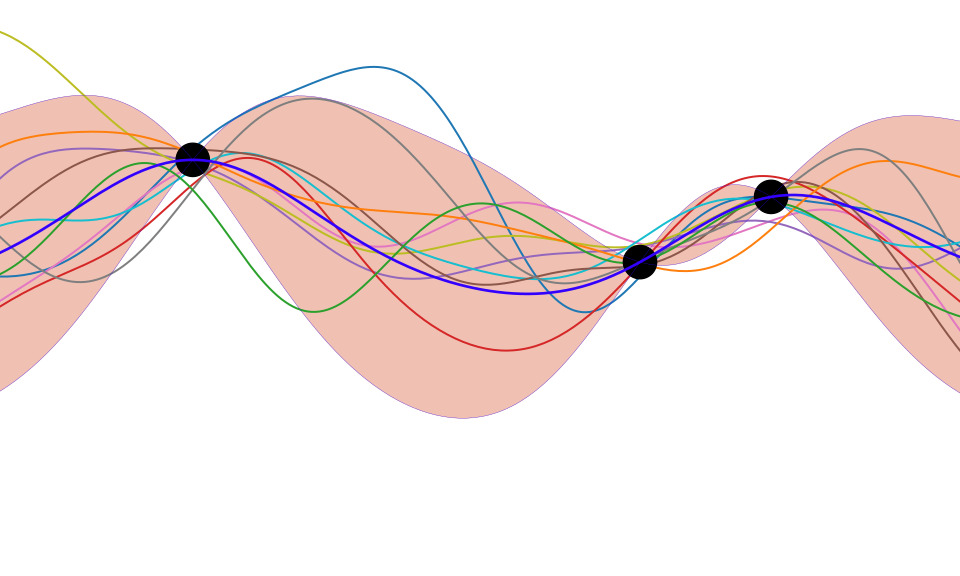

Complexity in Action

A Hypothesis as a Liability

“ ‘When someone seeks,’ said Siddhartha, ‘then it easily happens that his eyes see only the thing that he seeks, and he is able to find nothing, to take in nothing. […] Seeking means: having a goal. But finding means: being free, being open, having no goal.’ ”

Hermann Hesse

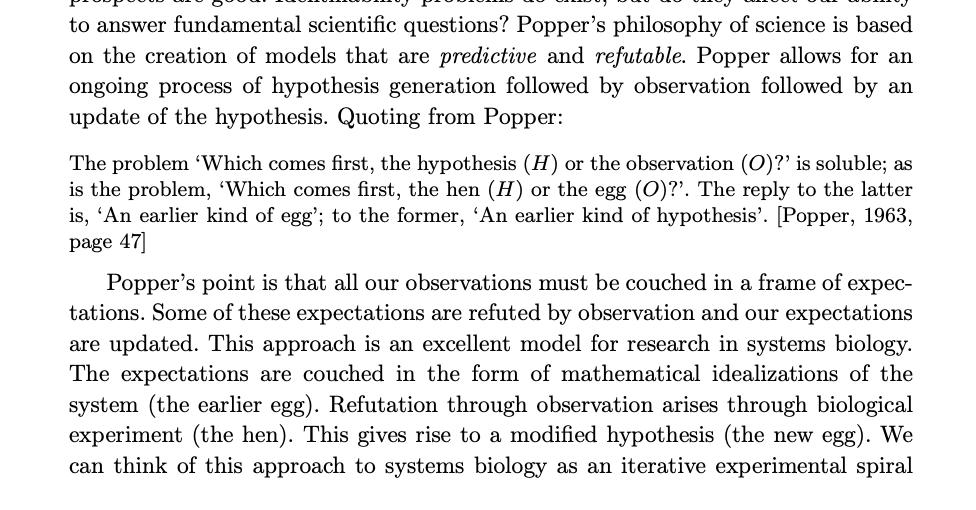

The Scientific Process

Conclusions

- Intellectual debt means we don’t understand digital computer.

- Interaction between focus and context part of how we deal with this challenge.

- LLMs could make these challenges harder but if well deployed could help.

Lecture 3

Richard Feynmann on Doubt

One thing is I can live with is doubt, and uncertainty and not knowing. I think it’s much more interesting to live with not knowing than to have an answer that might be wrong.

Richard P. Feynmann in the The Pleasure of Finding Things Out 1981.

In practice …

There is a lot of evidence that probabilities aren’t interpretable.

See e.g. Thompson (1989)

There are three types of lies: lies, damned lies and statistics

??

There are three types of lies: lies, damned lies and statistics

Arthur Balfour 1848-1930

There are three types of lies: lies, damned lies and statistics

Arthur Balfour 1848-1930

There are three types of lies: lies, damned lies and ‘big data’

Neil Lawrence 1972-?

Mathematical Statistics

‘Mathematical Data Science’

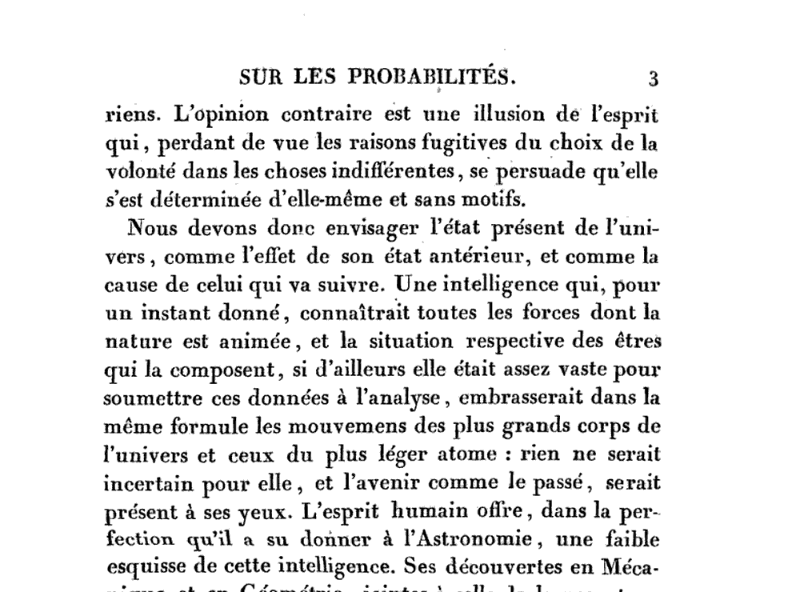

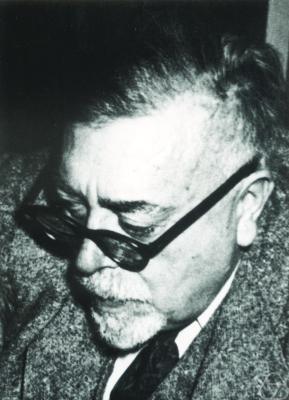

Laplace’s Demon

Laplace’s Demon

Philosophical Essay on Probabilities Laplace (1814) pg 3

Machine Learning

Theory of Everything

If we do discover a theory of everything … it would be the ultimate triumph of human reason-for then we would truly know the mind of God

Stephen Hawking in A Brief History of Time 1988

Game of Life

Loneliness

|

|

Crowding

|

|

Birth

|

|

Glider

|

|

Loafer

|

|

Emergent Behaviour

Laplace’s Gremlin

Philosophical Essay on Probabilities Laplace (1814) pg 5

Abstraction and Emergent Properties

Abstraction

- We often abstract smaller scales away e.g. in statistical mechanics.

- When we’re abstracting finer length scales we can introduce

uncertainties.

- E.g. Maxwell-Boltzmann distribution for ideal Gas.

Emergence

- But fine scale local-laws also lead to emergent properties.

- Some of these properties exist at large scale.

- In particular, when there are complex interactions between molecules.

Molecular Dynamics Simulations

Quantum Mechanics

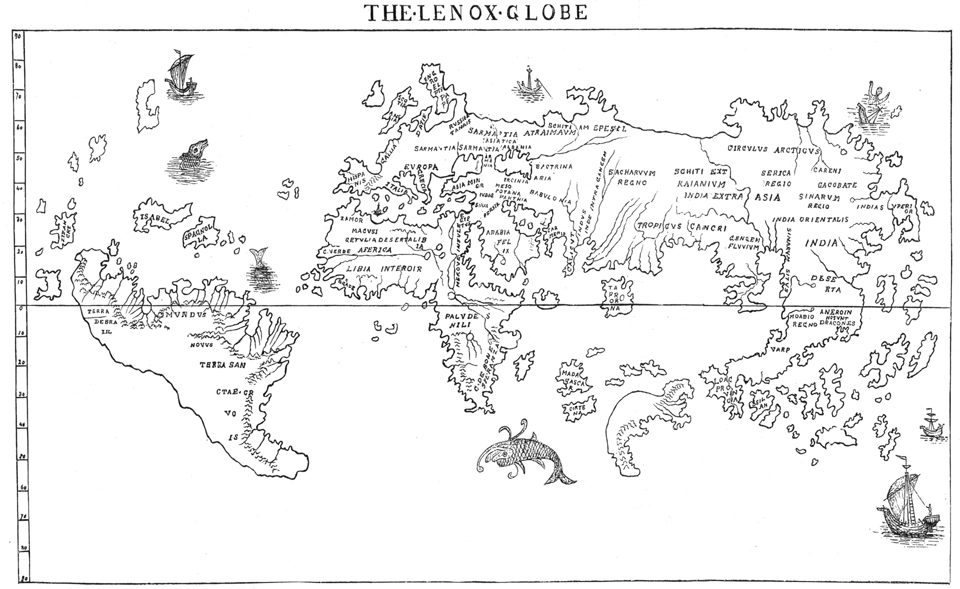

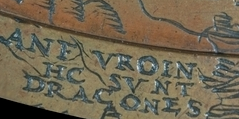

Lenox Globe

Drawing of Lenox Globe

Hic Sunt Dracones

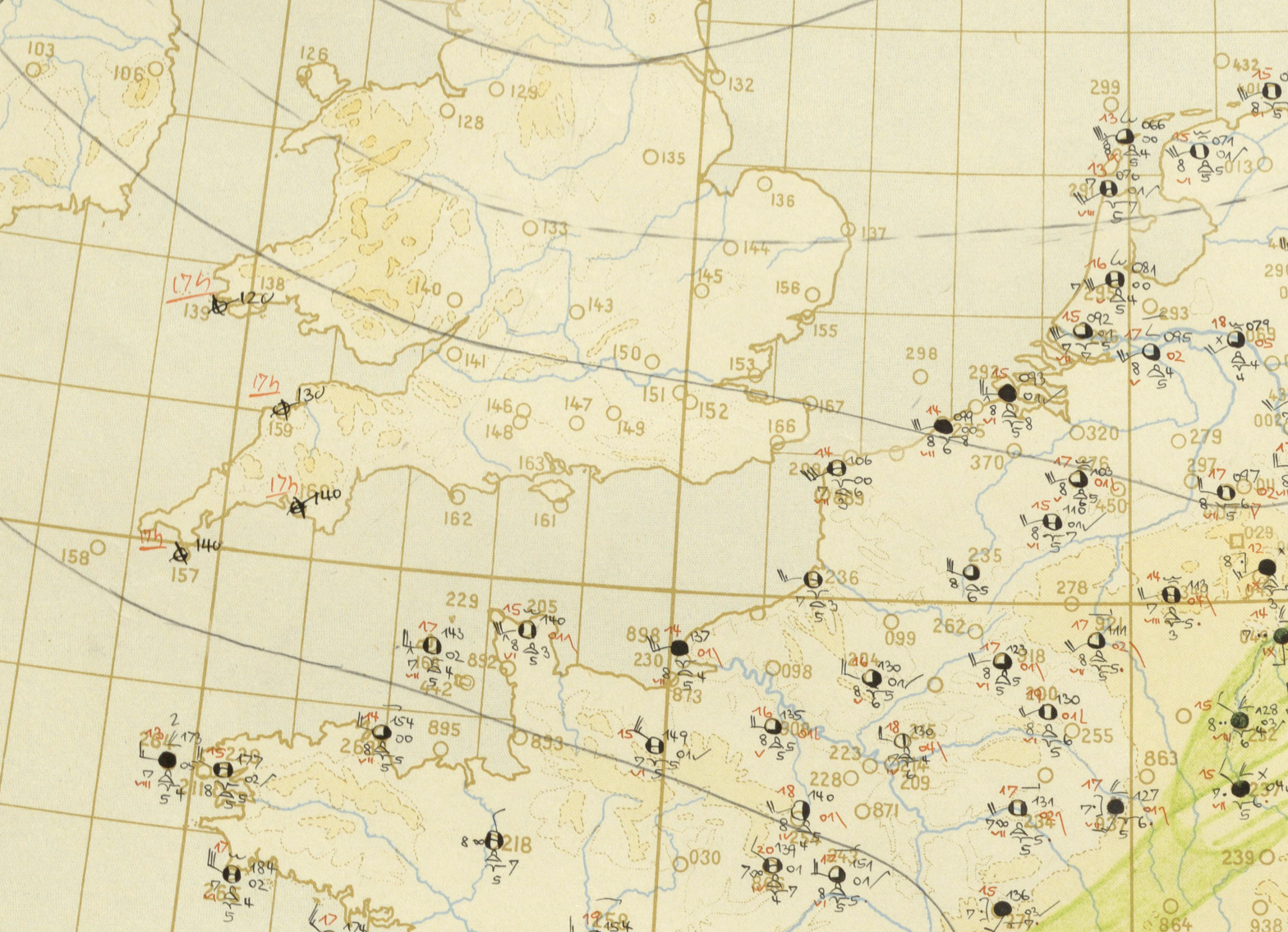

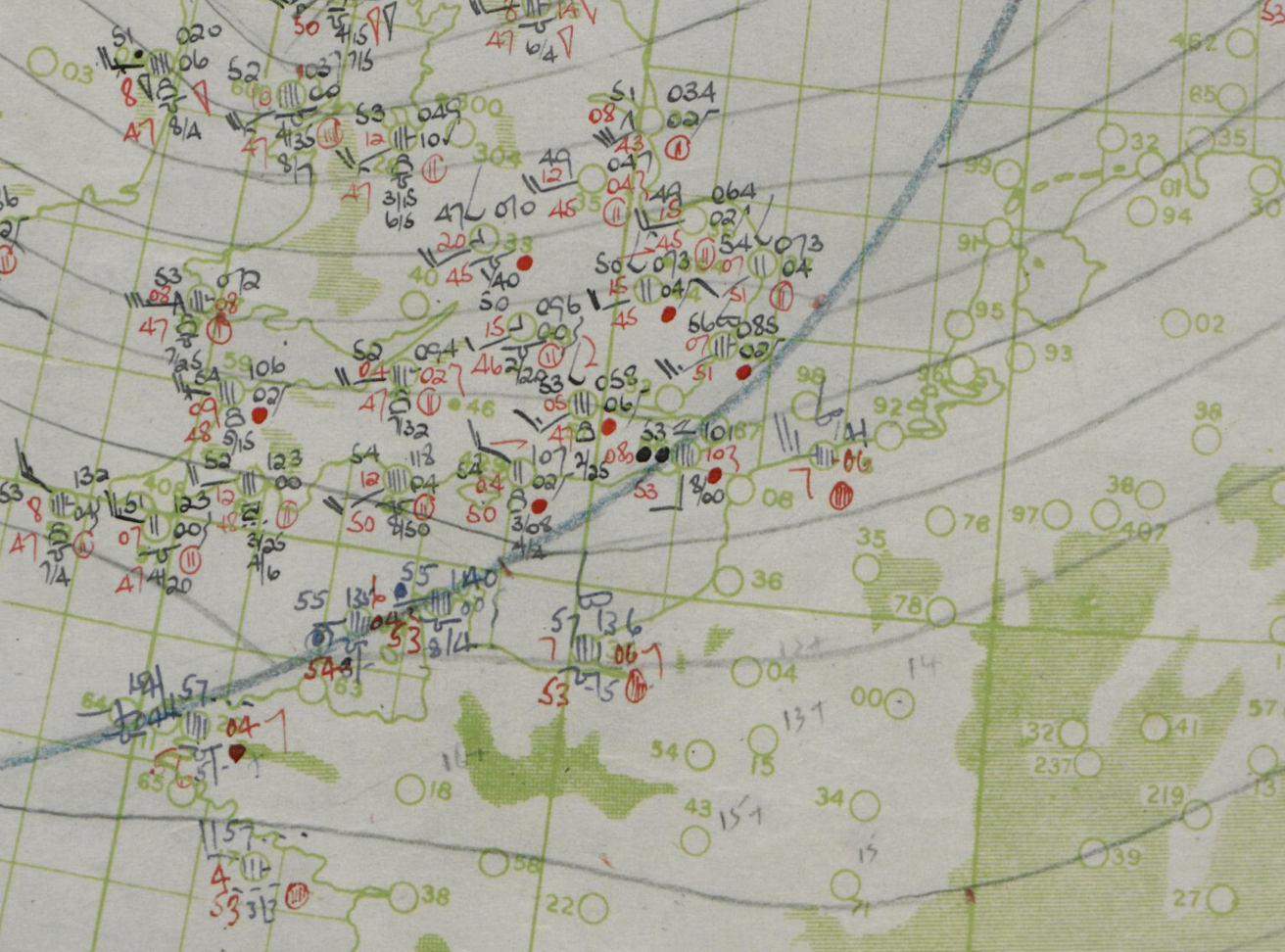

Weather

Weather

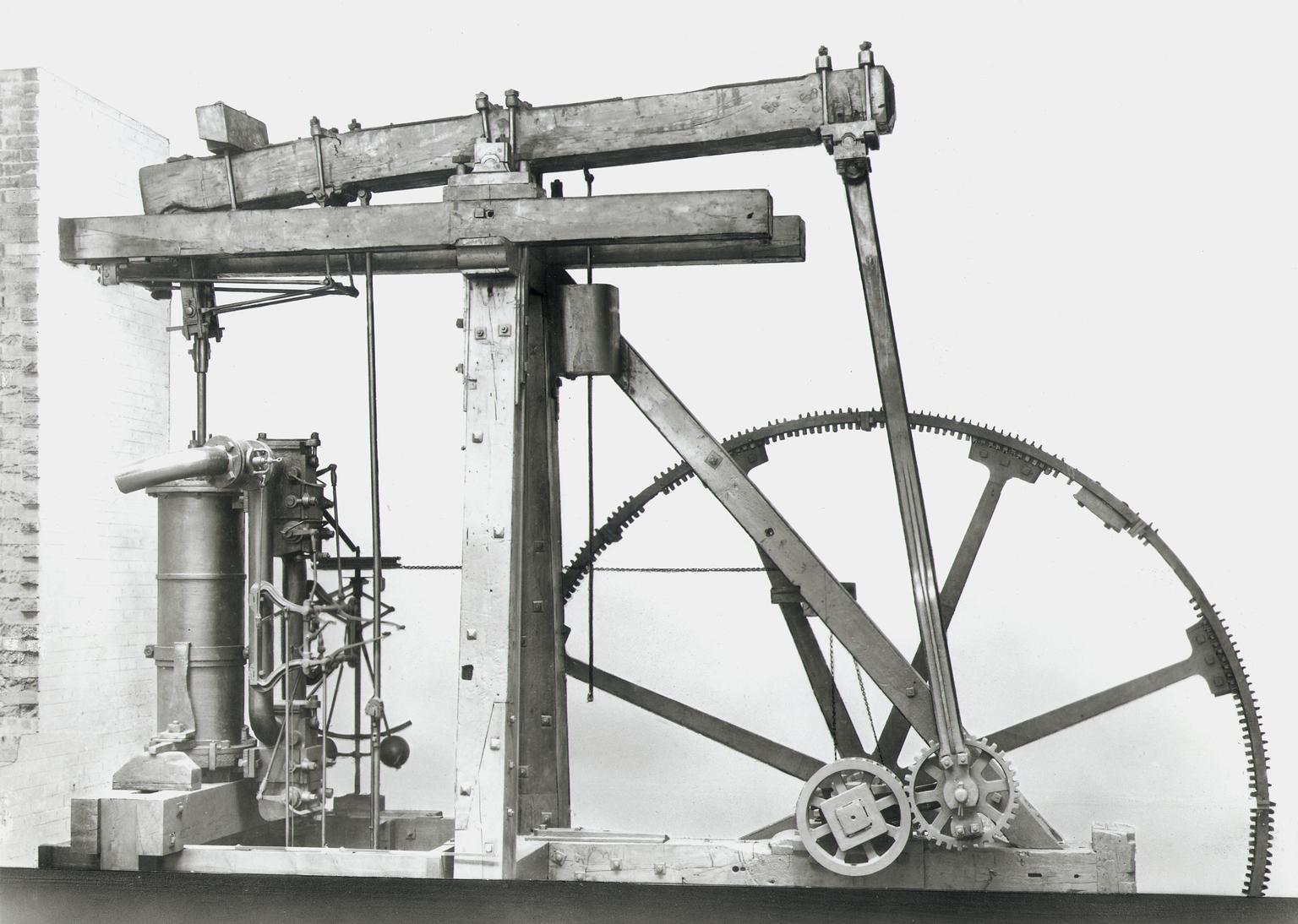

Boulton and Watt’s Lap Engine

|

= available energy + temperature \(\times\) entropy |

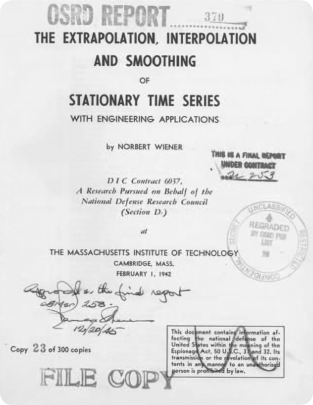

Brownian Motion and Wiener

|

|

|

Brownian Motion

Stochasticity and Control

|

|

Statistical Physics

|

|

|

Entropy Billiards

Maxwell’s Demon

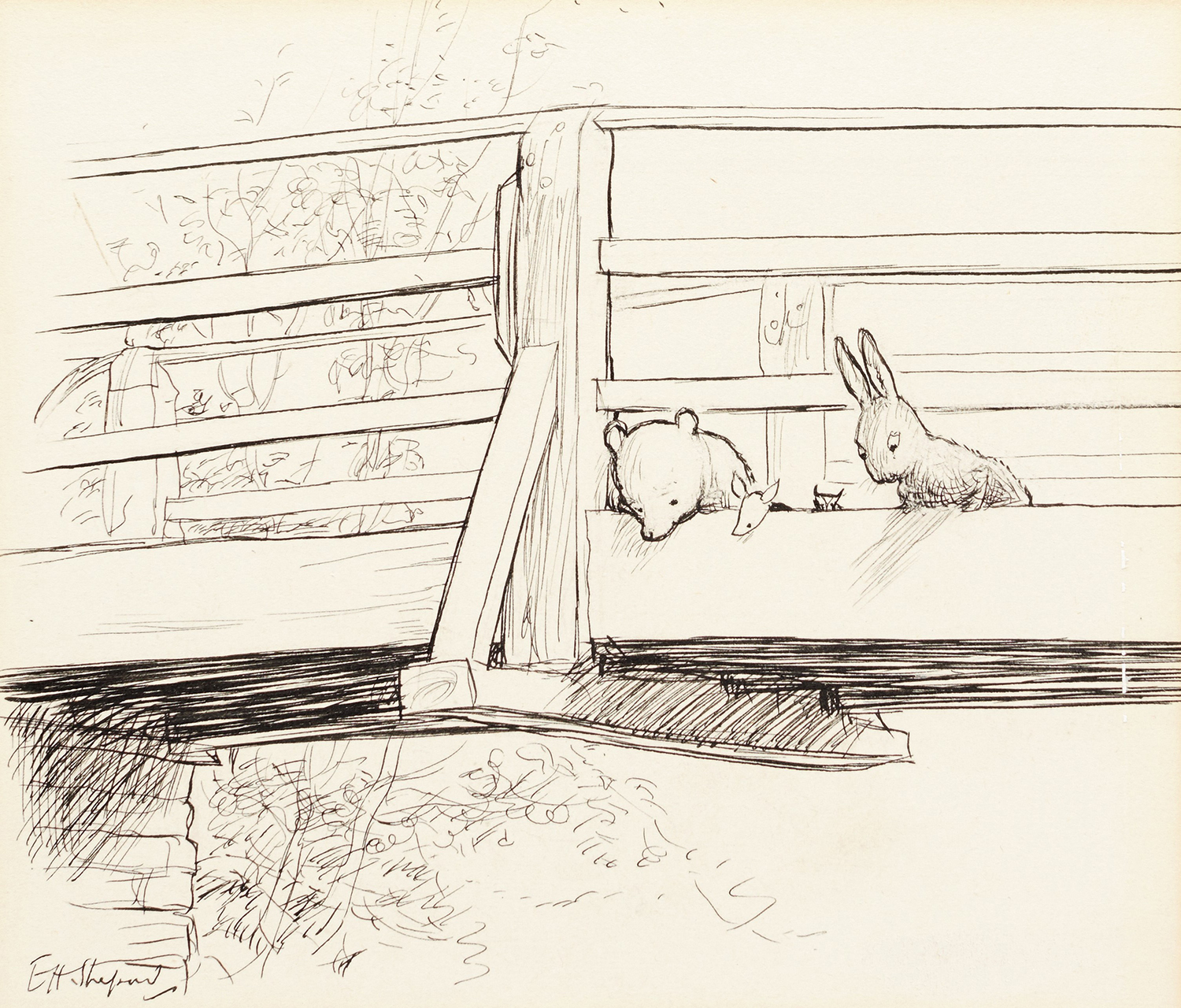

When you are a Bear of Very Little Brain, and you Think of Things, you find sometimes that a Thing which seemed very Thingish inside you is quite different when it gets out into the open and has other people looking at it.

A.A. Milne as Winnie-the-Pooh in The House at Pooh Corner, 1928

What is Machine Learning?

What is Machine Learning?

\[ \text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

- data : observations, could be actively or passively acquired (meta-data).

- model : assumptions, based on previous experience (other data! transfer learning etc), or beliefs about the regularities of the universe. Inductive bias.

- prediction : an action to be taken or a categorization or a quality score.

- Royal Society Report: Machine Learning: Power and Promise of Computers that Learn by Example

What is Machine Learning?

\[\text{data} + \text{model} \stackrel{\text{compute}}{\rightarrow} \text{prediction}\]

- To combine data with a model need:

- a prediction function \(f(\cdot)\) includes our beliefs about the regularities of the universe

- an objective function \(E(\cdot)\) defines the cost of misprediction.

Kappenball

Prime Air

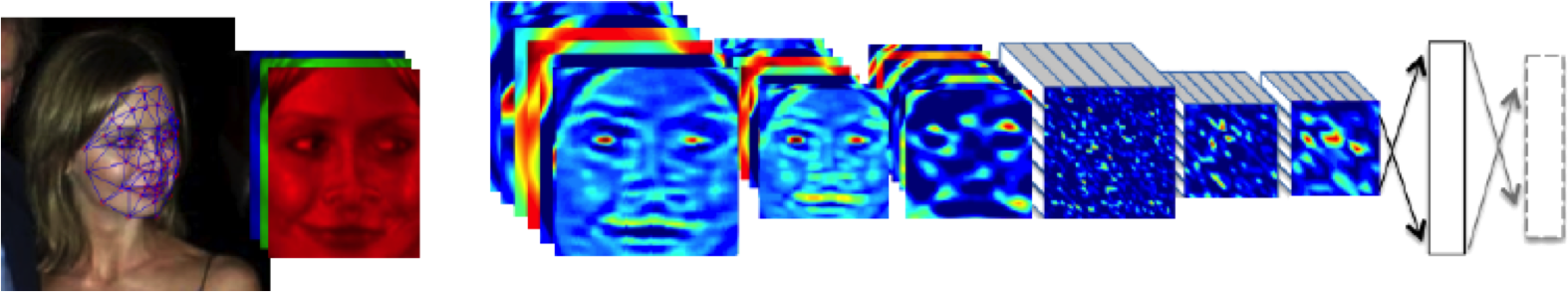

DeepFace

Outline of the DeepFace architecture. A front-end of a single convolution-pooling-convolution filtering on the rectified input, followed by three locally-connected layers and two fully-connected layers. Color illustrates feature maps produced at each layer. The net includes more than 120 million parameters, where more than 95% come from the local and fully connected.

Deep Learning as Pinball

Deep Neural Network

Deep Neural Network

Mathematically

\[ \begin{align*} \mathbf{ h}_{1} &= \phi\left(\mathbf{W}_1 \mathbf{ x}\right)\\ \mathbf{ h}_{2} &= \phi\left(\mathbf{W}_2\mathbf{ h}_{1}\right)\\ \mathbf{ h}_{3} &= \phi\left(\mathbf{W}_3 \mathbf{ h}_{2}\right)\\ f&= \mathbf{ w}_4 ^\top\mathbf{ h}_{3} \end{align*} \]

Bottleneck Layers in Deep Neural Networks

Deep Neural Network

Mathematically

The network can now be written mathematically as \[ \begin{align} \mathbf{ z}_{1} &= \mathbf{V}^\top_1 \mathbf{ x}\\ \mathbf{ h}_{1} &= \phi\left(\mathbf{U}_1 \mathbf{ z}_{1}\right)\\ \mathbf{ z}_{2} &= \mathbf{V}^\top_2 \mathbf{ h}_{1}\\ \mathbf{ h}_{2} &= \phi\left(\mathbf{U}_2 \mathbf{ z}_{2}\right)\\ \mathbf{ z}_{3} &= \mathbf{V}^\top_3 \mathbf{ h}_{2}\\ \mathbf{ h}_{3} &= \phi\left(\mathbf{U}_3 \mathbf{ z}_{3}\right)\\ \mathbf{ y}&= \mathbf{ w}_4^\top\mathbf{ h}_{3}. \end{align} \]

A Cascade of Neural Networks

\[ \begin{align} \mathbf{ z}_{1} &= \mathbf{V}^\top_1 \mathbf{ x}\\ \mathbf{ z}_{2} &= \mathbf{V}^\top_2 \phi\left(\mathbf{U}_1 \mathbf{ z}_{1}\right)\\ \mathbf{ z}_{3} &= \mathbf{V}^\top_3 \phi\left(\mathbf{U}_2 \mathbf{ z}_{2}\right)\\ \mathbf{ y}&= \mathbf{ w}_4 ^\top \mathbf{ z}_{3} \end{align} \]

Cascade of Gaussian Processes

Replace each neural network with a Gaussian process \[ \begin{align} \mathbf{ z}_{1} &= \mathbf{ f}_1\left(\mathbf{ x}\right)\\ \mathbf{ z}_{2} &= \mathbf{ f}_2\left(\mathbf{ z}_{1}\right)\\ \mathbf{ z}_{3} &= \mathbf{ f}_3\left(\mathbf{ z}_{2}\right)\\ \mathbf{ y}&= \mathbf{ f}_4\left(\mathbf{ z}_{3}\right) \end{align} \]

Equivalent to prior over parameters, take width of each layer to infinity.

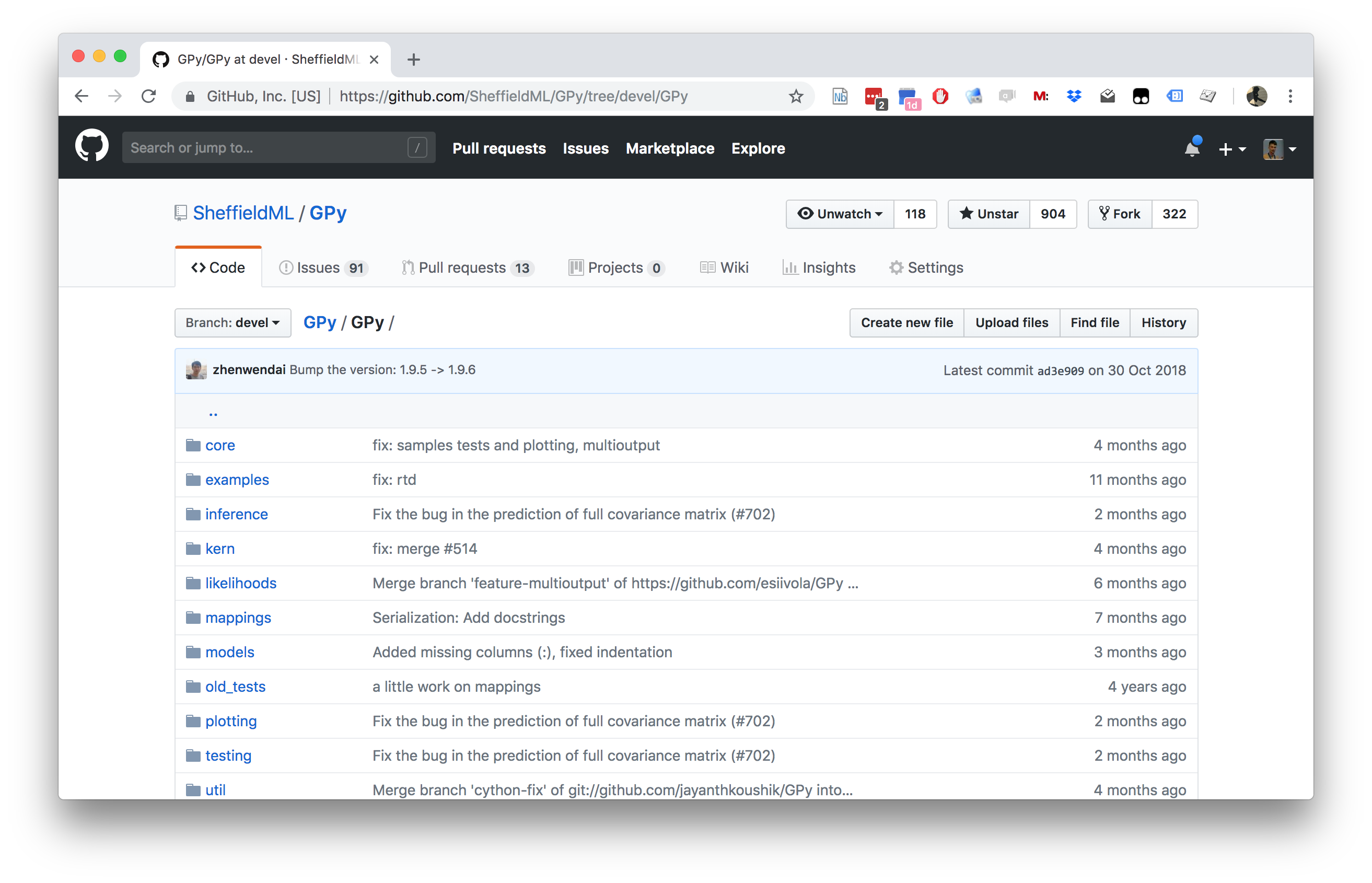

GPy: A Gaussian Process Framework in Python

GPy: A Gaussian Process Framework in Python

- BSD Licensed software base.

- Wide availability of libraries, ‘modern’ scripting language.

- Allows us to set projects to undergraduates in Comp Sci that use GPs.

- Available through GitHub https://github.com/SheffieldML/GPy

- Reproducible Research with Jupyter Notebook.

Features

- Probabilistic-style programming (specify the model, not the algorithm).

- Non-Gaussian likelihoods.

- Multivariate outputs.

- Dimensionality reduction.

- Approximations for large data sets.

Olympic Marathon Data

|

|

Olympic Marathon Data

Alan Turing

|

|

Probability Winning Olympics?

- He was a formidable Marathon runner.

- In 1946 he ran a time 2 hours 46 minutes.

- That’s a pace of 3.95 min/km.

- What is the probability he would have won an Olympics if one had been held in 1946?

Gaussian Process Fit

Olympic Marathon Data GP

Deep GP Fit

Can a Deep Gaussian process help?

Deep GP is one GP feeding into another.

Olympic Marathon Data Deep GP

Olympic Marathon Data Deep GP

Olympic Marathon Data Latent 1

Olympic Marathon Data Latent 2

Olympic Marathon Pinball Plot

Step Function Data

Step Function Data GP

Step Function Data Deep GP

Step Function Data Deep GP

Step Function Data Latent 1

Step Function Data Latent 2

Step Function Data Latent 3

Step Function Data Latent 4

Step Function Pinball Plot

The Future

- Uncertainty important …

- But also interaction (intervention, causality)

Conclusions

- HAMs change how we share ambiguous information.

- We need to think about how that effects our sharing of probabilities.

- May allow the machine to be used more widely as a tool.

Thanks!

book: The Atomic Human

twitter: @lawrennd

podcast: The Talking Machines

newspaper: Guardian Profile Page

blog posts:

Natural and Artificial Intelligence