How Do We Cope with Rapid Change Like AI/ML?

The Engineer in Society

Revolution

Coin Pusher

Henry Ford’s Faster Horse

Embodiment Factors

| bits/min | billions | 2,000 |

|

billion calculations/s |

~100 | a billion |

| embodiment | 20 minutes | 5 billion years |

New Flow of Information

Evolved Relationship

Evolved Relationship

Public Dialogue on AI in Public Services

- September 2024 convened public dialogues.

- Perspectives on AI in priority policy agendas.

“I think a lot of the ideas need to be about AI being like a co-pilot to someone. I think it has to be that. So not taking the human away.”

Public Participant, Liverpool pg 15 ai@cam and Hopkins Van Mil (2024)

AI in Healthcare: Public Perspectives

Key aspirations include:

- Reducing administrative burden on clinicians

- Supporting early diagnosis and prevention

- Improving research and drug development

- Better management of complex conditions

AI in Healthcare: Public Perspectives

Illustrative quotes show the nuanced views:

“My wife [an NHS nurse] says that the paperwork side takes longer than the actual care.”

Public Participant, Liverpool pg 9 ai@cam and Hopkins Van Mil (2024)

“I wouldn’t just want to rely on the technology for something big like that, because obviously it’s a lifechanging situation.”

Public Participant, Cambridge pg 10 ai@cam and Hopkins Van Mil (2024)

AI in Education: Public Perspectives

Key quotes illustrate these views:

“Education isn’t just about learning, it’s about preparing children for life, and you don’t do all of that in front of a screen.”

Public Participant, Cambridge ai@cam and Hopkins Van Mil (2024) pg 18

“Kids with ADHD or autism might prefer to interact with an iPad than they would a person, it could lighten the load for them.”

Public Participant, Liverpool ai@cam and Hopkins Van Mil (2024) pg 17

AI in Crime and Policing: Public Perspectives

- Complex attitudes towards AI use.

Key quotes reflect these concerns:

“Trust in the police has been undermined by failures in vetting and appalling misconduct of some officers. I think AI can help this, because the fact is that we, as a society, we know how to compile information.”

Public Participant, Liverpool pg 14 ai@cam and Hopkins Van Mil (2024)

“I’m brown skinned and my mouth will move a bit more or I’m constantly fiddling with my foot… I’ve got ADHD. If facial recognition would see my brown skin, and then I’m moving differently to other people, will they see me as a terrorist?”

Public Participant, Liverpool pg 15 ai@cam and Hopkins Van Mil (2024)

AI in Energy and Net Zero: Public Perspectives

Representative quotes include:

“Everybody being able to generate on their roofs or in their gardens, selling energy from your car back to the grid, power being thrown different ways at different times. You’ve got to be resilient and independent.”

Public Participant, Cambridge pg 20 ai@cam and Hopkins Van Mil (2024)

“Is the infrastructure not a more important aspect than putting in AI systems? Government for years now has known that we need that infrastructure, but it’s always been someone else’s problem, the next government to sort out.”

Public Participant, Liverpool pg 21 ai@cam and Hopkins Van Mil (2024)

Summary

- AI should enhance rather than replace human capabilities

- Strong governance frameworks need to be in place before deployment

- Public engagement and transparency are essential

- Benefits must be distributed fairly across society

- Human-centered service delivery must be maintained

“We need to look at the causes, we need to do some more thinking and not just start using AI to plaster over them [societal issues].”

Public Participant, Cambridge pg 13 ai@cam and Hopkins Van Mil (2024)

The Challenges of Modern Systems

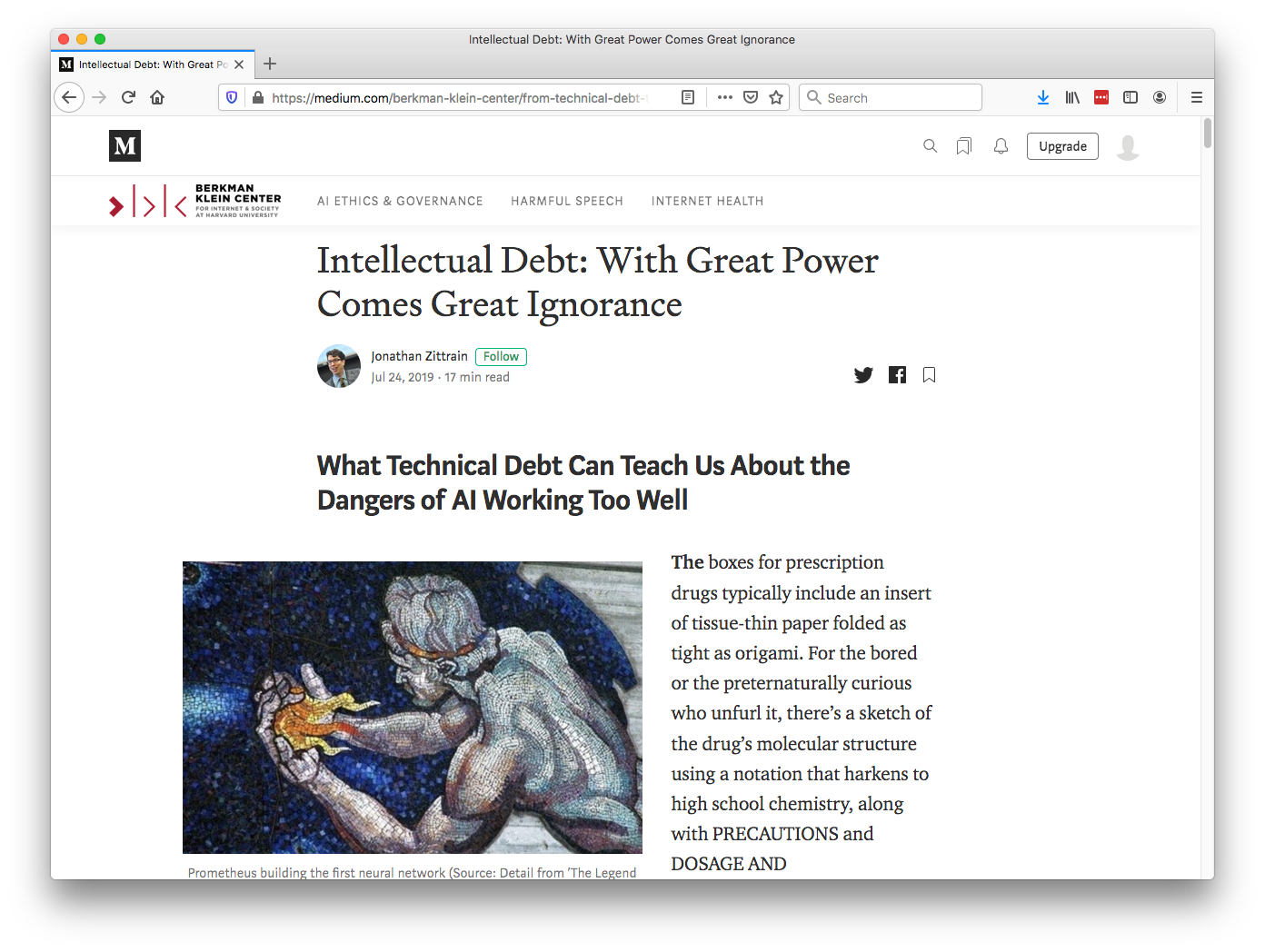

Intellectual Debt

Technical Debt

- Compare with technical debt.

- Highlighted by Sculley et al. (2015).

Separation of Concerns

Intellectual Debt

Technical debt is the inability to maintain your complex software system.

Intellectual debt is the inability to explain your software system.

Technical Consequence

- Classical systems design assumes decomposability.

- Data-driven systems interfere with decomponsability.

Bits and Atoms

- The gap between the game and reality.

- The need for extrapolation over interpolation.

Artificial vs Natural Systems

- First rule of a natural system: don’t fail

- Artificial systems tend to optimise performance under some criterion

- The key difference between the two is that artificial systems are designed whereas natural systems are evolved.

Natural Systems are Evolved

Survival of the fittest

?

Natural Systems are Evolved

Survival of the fittest

Herbet Spencer, 1864

Natural Systems are Evolved

Non-survival of the non-fit

Mistake we Make

- Equate fitness for objective function.

- Assume static environment and known objective.

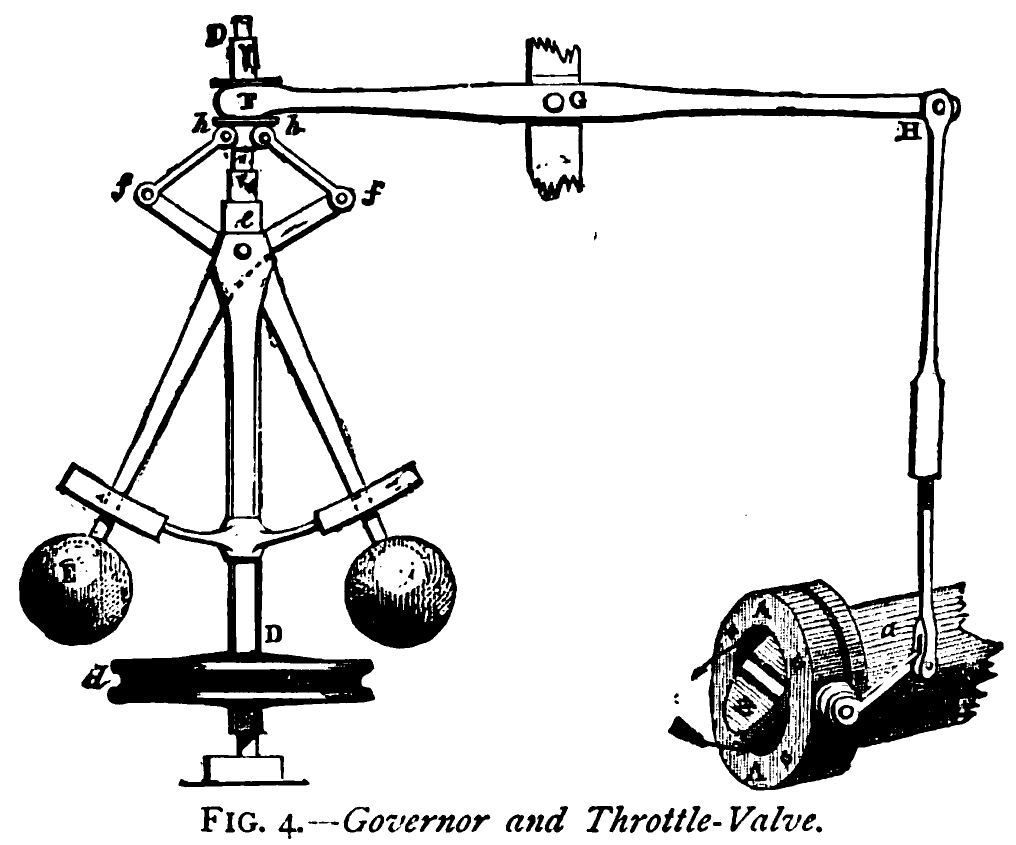

Understanding Through Feedback and Intervention

NACA Langley

Physical and Digital Feedback

- Physical Feedback:

- Test pilots feel aircraft response

- Watt’s governor directly senses speed

- Immediate physical connection

- Digital Feedback:

- Abstract measurements

- Delayed response

- Hidden failure modes

When Feedback Fails

The Horizon Scandal

|

|

|

|

When Feedback Fails

- Historical Examples:

- NACA test pilots: direct feedback

- Amelia Earhart: physical understanding

- Modern Failures:

- Horizon scandal: hidden errors

- Lorenzo system: disconnected feedback

- Lives ruined by lack of understanding

The Lorenzo Scandal

|

|

|

|

Summary

- Human-machine information bandwidth gap

- Complex systems through separation of concerns

- Engineering feedback enables tool responsiveness

- Challenge of feedback in digital (sociotechnical) systems

- Consequences of not listening

- Brittle systems

- User imposition

- Human impact of failures

Thanks!

book: The Atomic Human

twitter: @lawrennd

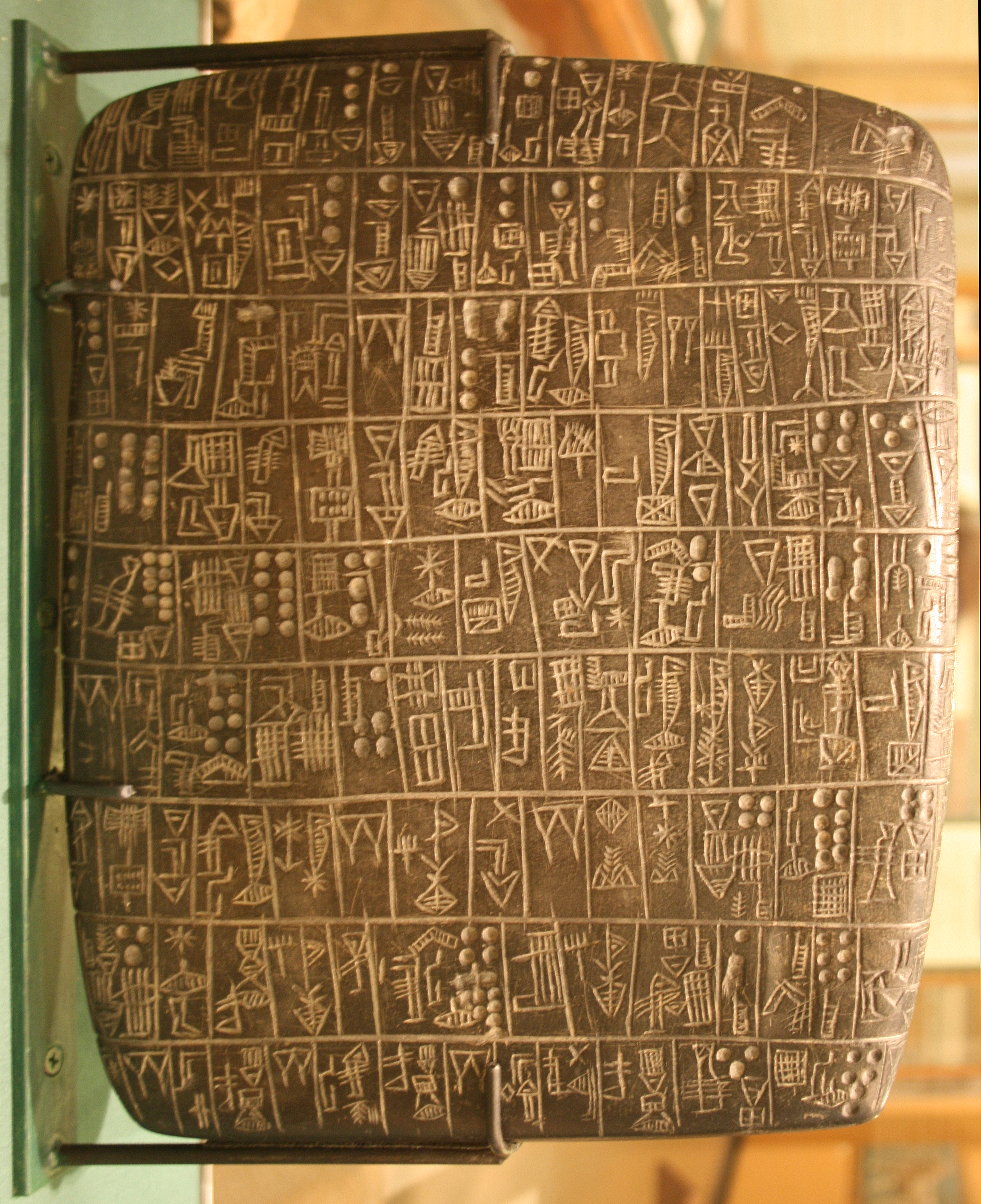

The Atomic Human pages cuneiform 337, 360, 390 , embodiment factor 13, 29, 35, 79, 87, 105, 197, 216-217, 249, 269, 353, 369, intellectual debt 84, 85, 349, 365, separation of concerns 84-85, 103, 109, 199, 284, 371, intellectual debt 84-85, 349, 365, 376, natural vs artificial systems 102-103, Gilruth, Bob 190-192, National Advisory Committee on Aeronautics (NACA) 163–168, feedback loops 117-119, 122-130, 132-133, 140, 145, 152, 177, 180-181, 183-184, 206, 228, 231, 256-257, 263-264, 265, 329, Horizon scandal 371, feedback failure 163-168, 189-196, 211-213, 334-336, 340, 342-343, 365-366, Blake, William Newton 121–123, Blake, William Newton 121–123, 258, 260, 283, 284, 301, 306, engineering complexity 198-204, 342-343, 365-366, consultation challenges 340-341, 348-349, 351-352, 363-366, 369-370, MONIAC 232-233, 266, 343, MacKay, Donald, Behind the Eye 268-270, 316, psychological representation 326–329, 344–345, 353, 361, 367, human-analogue machine 343–5, 346–7, 358–9, 365–8, human-analogue machine (HAMs) 343-347, 359-359, 365-368, Royal Society; machine learning review and 25, 321, 395.

podcast: The Talking Machines

newspaper: Guardian Profile Page

blog posts: