Revisiting the Revisiting of the Revisit of the 2014 NeurIPS Experiment

ELLIS Unconference

Introduction

NeurIPS in Numbers

- To review papers we had:

- 1474 active reviewers (1133 in 2013)

- 92 area chairs (67 in 2013)

- 2 program chairs

NeurIPS in Numbers

- In 2014 NeurIPS had:

- 1678 submissions

- 414 accepted papers

- 20 oral presentations

- 62 spotlight presentations

- 331 poster presentations

- 19 papers rejected without review

The NeurIPS Experiment

- How consistent was the process of peer review?

- What would happen if you independently reran it?

The NeurIPS Experiment

- We selected ~10% of NeurIPS papers to be reviewed twice, independently.

- 170 papers were reviewed by two separate committees.

- Each committee was 1/2 the size of the full committee.

- Reviewers allocated at random

- Area Chairs allocated to ensure distribution of expertise

Timeline for NeurIPS

- Submission deadline 6th June

- three weeks for paper bidding and allocation

- three weeks for review

- two weeks for discussion and adding/augmenting reviews/reviewers

- one week for author rebuttal

- two weeks for discussion

- one week for teleconferences and final decisons

- one week cooling off

- Decisions sent 9th September

Paper Scoring and Reviewer Instructions

Quantitative Evaluation

10: Top 5% of accepted NIPS papers, a seminal paper for the ages.

I will consider not reviewing for NIPS again if this is rejected.

9: Top 15% of accepted NIPS papers, an excellent paper, a strong accept.

I will fight for acceptance.

8: Top 50% of accepted NIPS papers, a very good paper, a clear accept.

I vote and argue for acceptance.

Quantitative Evaluation

7: Good paper, accept.

I vote for acceptance, although would not be upset if it were rejected.

6: Marginally above the acceptance threshold.

I tend to vote for accepting it, but leaving it out of the program would be no great loss.

Quantitative Evaluation

5: Marginally below the acceptance threshold.

I tend to vote for rejecting it, but having it in the program would not be that bad.

4: An OK paper, but not good enough. A rejection.

I vote for rejecting it, although would not be upset if it were accepted.

Quantitative Evaluation

3: A clear rejection.

I vote and argue for rejection.

2: A strong rejection. I’m surprised it was submitted to this conference.

I will fight for rejection.

1: Trivial or wrong or known. I’m surprised anybody wrote such a paper.

I will consider not reviewing for NIPS again if this is accepted.

Qualitative Evaluation

Quality

Clarity

Originality

Significance

Reviewer Calibration

Reviewer Calibration Model

\[ y_{i,j} = f_i + b_j + \epsilon_{i, j} \]

\[f_i \sim \mathcal{N}\left(0,\alpha_f\right)\quad b_j \sim \mathcal{N}\left(0,\alpha_b\right)\quad \epsilon_{i,j} \sim \mathcal{N}\left(0,\sigma^2\right)\]

Fitting the Model

- Sum of Gaussian random variables is Gaussian.

- Model is a joint Gaussian over the data.

- Fit by maximum likelihood three parameters, \(\alpha_f\), \(\alpha_b\), \(\sigma_2\)

NeurIPS 2014 Parameters

\[ \alpha_f = 1.28\] \[ \alpha_b = 0.24\] \[ \sigma^2 = 1.27\]

Review Quality Prediction

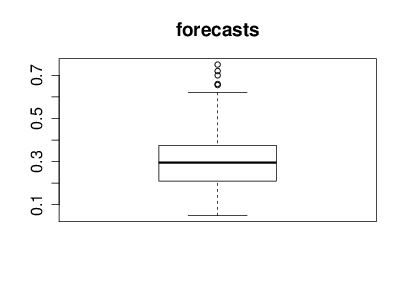

Monte Carlo Simulations for Probability of Acceptance

Correlation of Duplicate Papers

Correlation Plots

Correlation Plots

Subjectivity of Superiority

Conference Simulation

- Calibration model suggests score is roughly 50% subjective, 50% objective.

- Duplicate experiment backs this up with roughly 50% correlation.

Experiment

- Simulate conference scores which are 50% subjective/objective.

- Study statistics of conference.

Consistency vs Accept Rate

Gain in Consistency

Where do Rejected Papers Go?

Impact of Papers Ten Years Six Months On

All Papers 2021

All Papers 2024

Accepted Papers 2021

Accepted Papers 2024

Rejected Papers 2021

Rejected Papers 2024

Accepted Papers 2021

Accepted Papers 2024

Accepted Papers 2021

Accepted Papers 2024

My Conclusion

- Inconsistent errors are better than consistent errors

- NeurIPS and Impractical Knives

Appendix

Speculation

- To check public opinion before experiment: scicast question

NeurIPS Experiment Results

Table: Table showing the results from the two committees as a confusion matrix. Four papers were rejected or withdrawn without review.

| Committee 1 | |||

| Accept | Reject | ||

| Committee 2 | Accept | 22 | 22 |

| Reject | 21 | 101 | |

Reaction After Experiment

Public reaction after experiment documented here

Open Data Science (see Heidelberg Meeting)

NIPS was run in a very open way. Code and blog posts all available!

Reaction triggered by this blog post.

NeurIPS 2021 Experiment

- Experiment repeated in 2021 by Beygelzimer et al. (2023).

- Conference five times bigger.

- Broadly the same results.

}

A Random Committee @ 25%

Table: Table shows the expected values for the confusion matrix if the committee was making decisions totally at random.

| Committee 1 | |||

| Accept | Reject | ||

| Committee 2 | Accept | 10.4 (1 in 16) | 31.1 (3 in 16) |

| Reject | 31.1 (3 in 16) | 93.4 (9 in 16) | |

NeurIPS Experiment Results

| Committee 1 | |||

| Accept | Reject | ||

| Committee 2 | Accept | 22 | 22 |

| Reject | 21 | 101 | |

A Random Committee @ 25%

| Committee 1 | |||

| Accept | Reject | ||

| Committee 2 | Accept | 10 | 31 |

| Reject | 31 | 93 | |

Thanks!

- book: The Atomic Human

- twitter: @lawrennd

- The Atomic Human

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com