Information Engines

Exploring Connections Between Intelligence and Thermodynamics

Neil D. Lawrence

Departmental Seminar, Department of Computer Science, University of Manchester

Hydrodynamica

Entropy Billiards

|

|

|

|

|

|

|

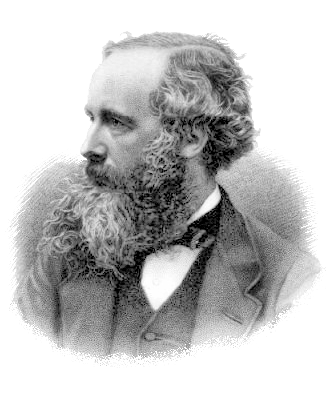

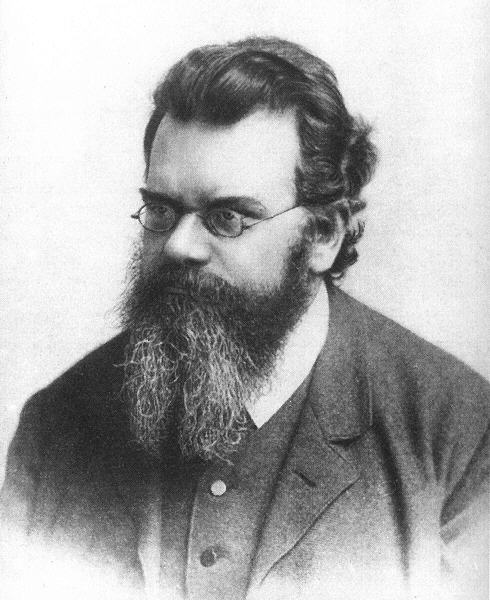

Maxwell’s Demon

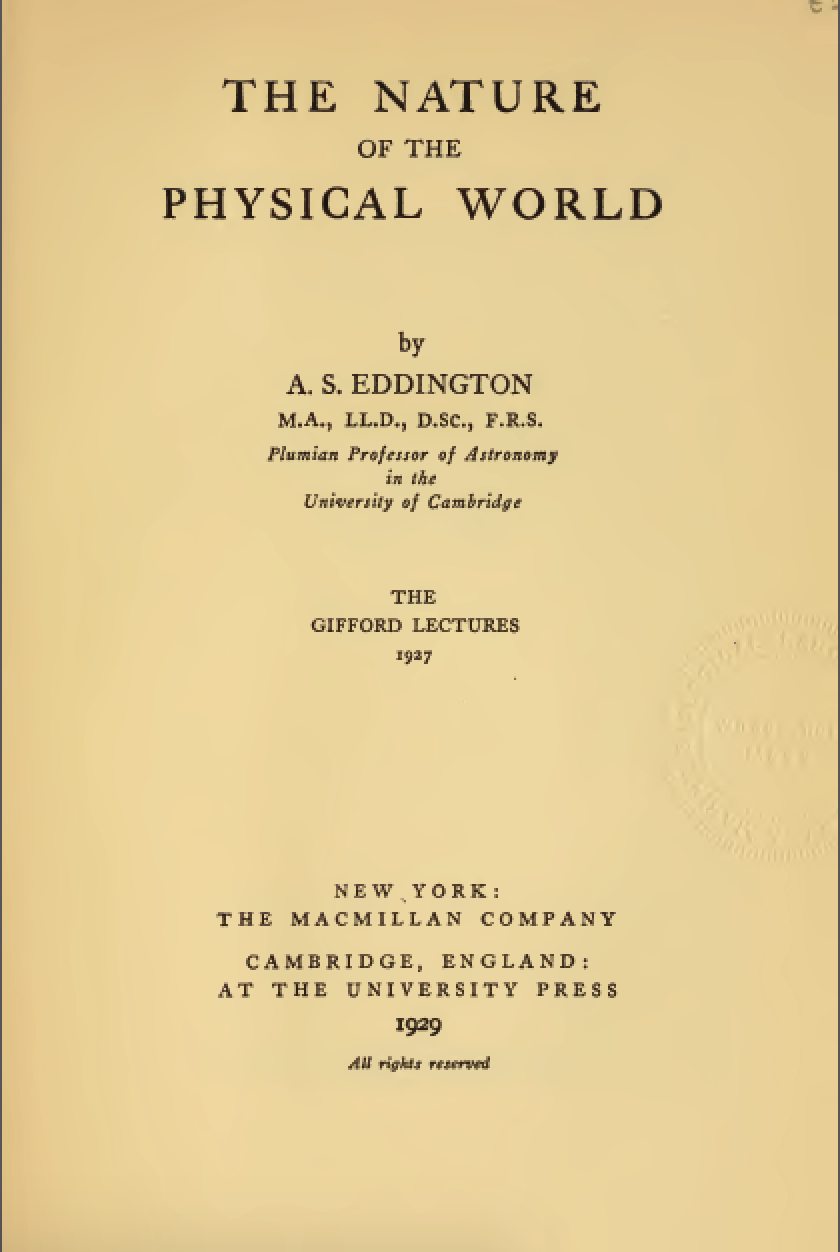

Information Theory and Thermodynamics

- Information theory quantifies uncertainty and information

- Core concepts inspired by thermodynamic ideas

- Information entropy \(\leftrightarrow\) Thermodynamic entropy

- Free energy minimization common in both domains

Entropy

Entropy \[ S(X) = -\sum_X \rho(X) \log p(X) \]

In thermodynamics preceded by Boltzmann’s constant, \(k_B\)

Exponential Family

- Exponential family: \[ \rho(Z) = h(Z) \exp\left(\boldsymbol{\theta}^\top T(Z) + A(\boldsymbol{\theta})\right) \]

- Entropy is, \[ S(Z) = A(\boldsymbol{\theta}) - E_\rho\left[\boldsymbol{\theta}^\top T(Z) + \log h(Z)\right] \]

Where \[ E_\rho\left[T(Z)\right] = \nabla_\boldsymbol{\theta}A(\boldsymbol{\theta}) \] because \(A(\boldsymbol{\theta})\) is log partition function.

operates as a cummulant generating function for \(\rho(Z)\).

Available Energy

- Available energy: \[ A(\boldsymbol{\theta}) \]

- Internal energy: \[ U(\boldsymbol{\theta}) = A(\boldsymbol{\theta}) + T S(\boldsymbol{\theta}) \]

- Traditional relationship \[ A = U - TS \]

- Legendre transformation of entropy

Work through Measurement

- Split system \(Z\) into two parts:

- Variables \(X\) - stochastically evolving

- Memory \(M\) - low entropy partition

Joint Entropy Decomposition

Joint entropy can be decomposed \[ S(Z) = S(X,M) = S(X|M) + S(M) = S(X) - I(X;M) + S(M) \]

Mutual information \(I(X;M)\) connects information and energy

Measurement and Available Energy

Measurement changes system entropy by \(-I(X;M)\)

Increases available energy

Difference in available energy: \[ \Delta A = A(X) - A(X|M) = I(X;M) \]

Can recover \(k_B T \cdot I(X;M)\) in work from the system

Information to Work Conversion

- Maxwell’s demon thought experiment in practice

- Information gain \(I(X;M)\) can be converted to work

- Maximum extractable work: \(W_{max} = k_B T \cdot I(X;M)\)

- Measurement creates a non-equilibrium state

- Information is a physical resource

The Animal Game

- Intelligence as optimal uncertainty reduction

- 20 Questions game as intuitive example

- Binary search exemplifies optimal strategy

- Information gain measures question quality

- Wordle as a more complex example

The 20 Questions Paradigm

Entropy Reduction and Decisions

- Entropy before question: \(S(X)\)

- Entropy after answer: \(S(X|M)\)

- Information gain: \(I(X;M) = S(X) - S(X|M)\)

- Optimal decision maximise \(I(X;M)\) per unit cost

Thermodynamic Parallels

- Intelligence requires work to reduce uncertainty

- Thermodynamic work reduces physical entropy

- Both operate under resource constraints

- Both bound by fundamental efficiency limits

Information Engines: Intelligence as an Energy-Efficiency

- Information can be converted to available energy

- Simple systems that exploit this are “information engines”

- This provides our first model of intelligence

Measurement as a Thermodynamic Process: Information-Modified Second Law

- Measurement is a thermodynamic process

- Maximum extractable work: \(W_\text{ext} \leq -\Delta\mathcal{F} + k_BTI(X;M)\)

- Information acquisition creates work potential

\[ I(X;M) = \sum_{x,m} \rho(x,m) \log \frac{\rho(x,m)}{\rho(x)\rho(m)}, \]

Efficacy of Feedback Control

Channel Coding Perspective on Memory

- Memory acts as an information channel

- Channel capacity limited by memory size: \(C \leq n\) bits

- Relates to Ashby’s Law of Requisite Variety and the information bottleneck

Decomposition into Past and Future

Model Approximations and Thermodynamic Efficiency

- Perfect models require infinite resources

- Intelligence balances measurement against energy efficiency

- Bounded rationality as thermodynamic necessity

Markov Blanket

- Split system into past/present (\(X_0\)) and future (\(X_1\))

- Memory \(M\) creates Markov separation when \(I(X_0;X_1|M) = 0\)

- Efficient memory minimizes information loss

At What Scales Does this Apply?

- Equipartition theorem: \(kT/2\) energy per degree of freedom

- Information storage is a small perturbation in large systems

- Most relevant at microscopic scales

Small-Scale Biochemical Systems and Information Processing

- Microscopic biological systems operate where information matters

- Molecular machines exploit thermal fluctuations

- Information processing enables work extraction

Molecular Machines as Information Engines

- ATP synthase, kinesin, photosynthetic apparatus

- Convert environmental information to useful work

- Example: ATP synthase uses ~3-4 protons per ATP

ATP Synthase: Nature’s Rotary Engine

Jaynes’ World

- Zero-player game implementing entropy game

- Distribution \(\rho(Z)\) over state space \(Z\)

- State space partitioned into observables \(X\) and memory \(M\)

- Entropy bounded: \(0 \leq S(Z) \leq N\)

Jaynes’ World

Unlike animal game (which reduces entropy), Jaynes’ World maximizes entropy

System evolves by ascending the entropy gradient \(S(Z)\)

Animal game: max uncertainty → min uncertainty

Jaynes’ World: min uncertainty → max uncertainty

Thought experiment: looking backward from any point

Game appears to come from minimal entropy configuration (“origin”)

Game appears to move toward maximal entropy configuration (“end”)

\[ \rho(Z) = h(Z) \exp(\boldsymbol{\theta}^\top T(Z) - A(\boldsymbol{\theta})), \] where \(h(Z)\) is the base measure, \(T(Z)\) are sufficient statistics, \(A(\boldsymbol{\theta})\) is the log-partition function, \(\boldsymbol{\theta}\) are the natural parameters of the distribution.}

Exponential Family

- Jaynes showed that entropy optimization leads to exponential family distributions. \[\rho(Z) = h(Z) \exp(\boldsymbol{\theta}^\top T(Z) - A(\boldsymbol{\theta}))\]

- \(h(Z)\): base measure

- \(T(Z)\): sufficient statistics

- \(A(\boldsymbol{\theta})\): log-partition function

- \(\boldsymbol{\theta}\): natural parameters

Information Geometry

- System evolves within information geometry framework

- Entropy gradient \[ \nabla_{\boldsymbol{\theta}}S(Z) = \mathbf{g} = \nabla^2_\boldsymbol{\theta} A(\boldsymbol{\theta}(M)) \]

Fisher Information Matrix

- Fisher information matrix \[ G(\boldsymbol{\theta}) = \nabla^2_{\boldsymbol{\theta}} A(\boldsymbol{\theta}) = \text{Cov}[T(Z)] \]

- Important: We use gradient ascent, not natural gradient

Gradient Ascent

- Gradient step \[ \Delta \boldsymbol{\theta} \propto \mathbf{g} \]

- Natural gradient step \[ \Delta \boldsymbol{\theta} \propto \eta G(\boldsymbol{\theta})^{-1} \mathbf{g} \]

Markovian Decomposition

\(X\) divided into past/present \(X_0\) and future \(X_1\)

Conditional mutual information: \[ I(X_0; X_1 | M) = \sum_{x_0,x_1,m} p(x_0,x_1,m) \log \frac{p(x_0,x_1|m)}{p(x_0|m)p(x_1|m)} \]

Measures dependency between past and future given memory state

Perfect Markovianity: \(I(X_0; X_1 | M) = 0\)

Memory variables capture all dependencies between past and future

Tension between Markovianity and minimal entropy creates uncertainty principle

System Evolution

Start State

- Low entropy, near lower bound

- Highly structured information in \(M\)

- Strong temporal dependencies (high non-Markovian component)

- Precise values for \(\boldsymbol{\theta}\) uncertainty in other parameter characteristics

- Uncertainty principle balances precision vs. capacity

End State

- Maximum entropy, approaching upper bound \(N\)

- Zeno’s paradox: \(\nabla_{\boldsymbol{\theta}}S \approx 0\)

- Primarily Markovian dynamics

- Steady state with no further entropy increase possible

Key Point

- Both minimal and maximal entropy distributions belong to exponential family

- This is a direct consequence of Jaynes’ entropy optimization principle

- System evolves by gradient ascent in natural parameters

- Uncertainty principle governs the balance between precision and capacity

Histogram Game

Four Bin Histogram Entropy Game

Two-Bin Histogram Example

- Simplest example: Two-bin system

- States represented by probability \(p\) (with \(1-p\) in second bin)

Entropy

- Entropy \[ S(p) = -p\log p - (1-p)\log(1-p) \]

- Maximum entropy at \(p = 0.5\)

- Minimal entropy at \(p = 0\) or \(p = 1\)

Natural Gradients vs Steepest Ascent

\[ \Delta \theta_{\text{steepest}} = \eta \frac{\text{d}S}{\text{d}\theta} = \eta p(1-p)(\log(1-p) - \log p). \] \[ G(\theta) = p(1-p) \] \[ \Delta \theta_{\text{natural}} = \eta(\log(1-p) - \log p) \]

Four-Bin Saddle Point Example

- Four-bin system creates 3D parameter space

- Saddle points appear where:

- Gradient is zero

- Some directions increase entropy

- Other directions decrease entropy

- Information reservoirs form in critically slowed directions

Saddle Point Example

Saddle Points

Saddle Point Seeking Behaviour

Gradient Flow and Least Action Principles

- Steepest ascent of entropy ≈ Path of least action

- System follows geodesics in information geometry

Information-Theoretic Action

- Action integral \[ \mathcal{A} = \int L(\theta, \dot{\theta}) \text{d}t \]

- Information-theoretic Lagrangian \[ L = \frac{1}{2}\dot{\theta}^\top G(\theta)\dot{\theta} - S(\theta) \]

- cf Frieden (1998).

Gradient Flow and Least Action Path

Uncertainty Principle

- Information reservoir variables (\(M\)) map to natural parameters \(\boldsymbol{\theta}(M)\)

- Challenge: Need both precision in parameters and capacity for information

Capacity \(\leftrightarrow\) Precision Paradox

- Fundamental trade-off emerges:

- \(\Delta\boldsymbol{\theta}(M) \cdot \Delta c(M) \geq k\)

- Cannot simultaneously have perfect precision and maximum capacity

Quantum vs Classical Information Reservoirs

- Near origin: “Quantum-like” information processing

- Wave-like encoding, non-local correlations

- Uncertainty principle nearly saturated

- Higher entropy: Transition to “classical” behavior

- From wave-like to particle-like information storage

- Local rather than distributed encoding

Visualising the Parameter-Capacity Uncertainty Principle

- Uncertainty principle: \(\Delta\theta \cdot \Delta c \geq k\)

- Minimal uncertainty states form ellipses in phase space

- Quantum-like properties emerge from information constraints

- Different uncertainty states visualized as probability distributions

Visualisation of the Uncertainty Principle

Conceptual Framework

Conclusion

Unifying Perspectives on Intelligence

- Intelligence through multiple lenses:

- Entropy game: Intelligence as optimal questioning

- Information engines: Intelligence as energy-efficient computation

- Least action: Intelligence as path optimization

- Schrödinger’s bridge: Intelligence as probability transport

- Jaynes’ world: Initial attempt to Bridge between different views.

A Unified View of Intelligence Through Information

Converging perspectives on intelligence:

- Efficient entropy reduction (Entropy Game)

- Energy-efficient information processing (Information Engines)

- Path optimization in information space (Least Action)

- Optimal probability transport (Schrödinger’s Bridge)

Unified core: Intelligence as optimal information processing

Implications:

- Fundamental limits on intelligence

- New metrics for AI systems

- Principled approach to cognitive modeling

- Information-theoretic approaches to learning

Research Directions

- Open questions:

- Information-theoretic intelligence metrics

- Physical limits of intelligent systems

- Connections to quantum information theory

- Practical algorithms based on these principles

- Biological implementations of information engines

- Applications:

- Active learning systems

- Energy-efficient AI

- Robust decision-making under uncertainty

- Cognitive architectures

Thanks!

- book: The Atomic Human

- twitter: @lawrennd

- The Atomic Human

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com