AI and Security: From Bandwidth to Practical Implications

Understanding the evolution of security challenges in the age of AI

Neil D. Lawrence

Trent.AI Offsite

Information Theory and AI

- Claude Shannon developed information theory at Bell Labs

- Information measured in bits, separated from context

- Makes information fungible and comparable

Information Transfer Rates

- Humans speaking: ~2,000 bits per minute

- Machines communicating: ~600 billion bits per minute

- Machines share information 300 million times faster than humans

|

|

|

|

|

|

bits/min

|

billions

|

2000

|

6

|

|

billion

calculations/s |

~100

|

a billion

|

a billion

|

|

embodiment

|

20 minutes

|

5 billion years

|

15 trillion years

|

Communication Bandwidth

- Human communication: walking pace (2000 bits/minute)

- Machine communication: light speed (billions of bits/second)

- Our sharing walks, machine sharing …

New Flow of Information

Evolved Relationship

Evolved Relationship

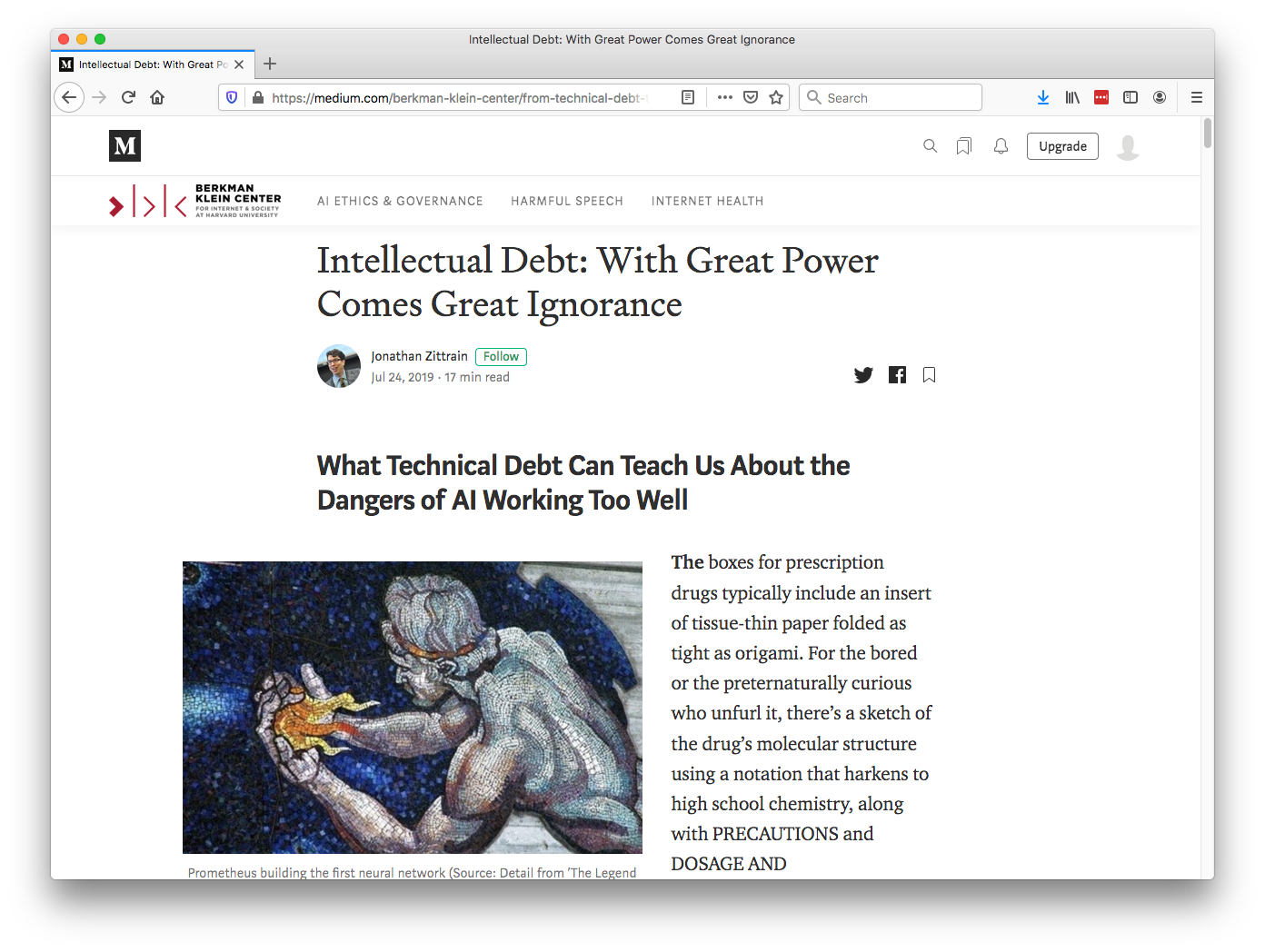

Intellectual Debt

Technical Debt

- Compare with technical debt.

- Highlighted by Sculley et al. (2015).

Lean Startup Methodology

The Mythical Man-month

.jpg)

Separation of Concerns

Intellectual Debt

Technical debt is the inability to maintain your complex software system.

Intellectual debt is the inability to explain your software system.

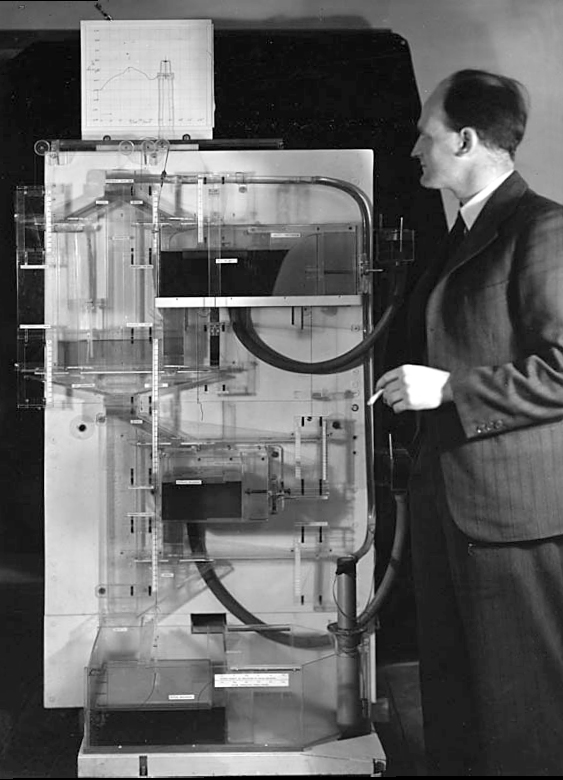

The MONIAC

Donald MacKay

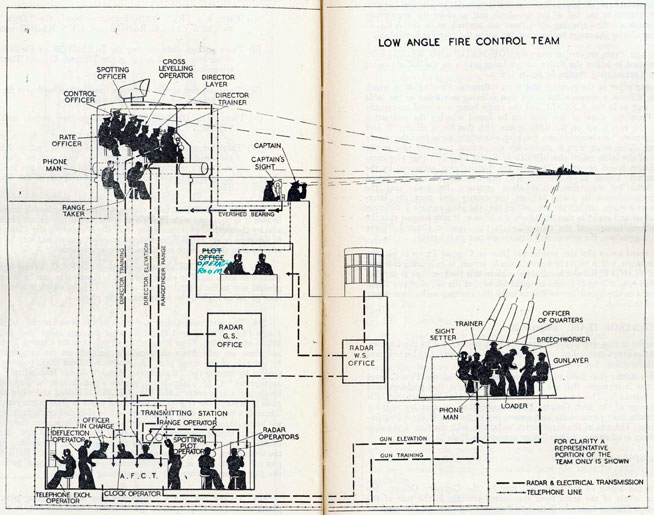

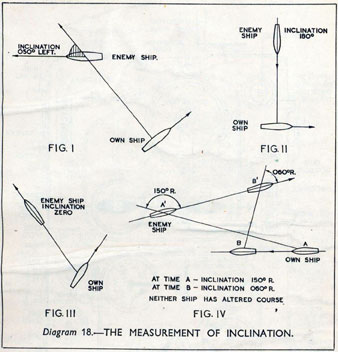

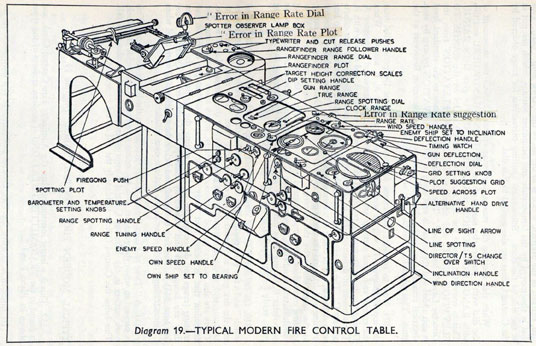

Fire Control Systems

Behind the Eye

Later in the 1940’s, when I was doing my Ph.D. work, there was much talk of the brain as a computer and of the early digital computers that were just making the headlines as “electronic brains.” As an analogue computer man I felt strongly convinced that the brain, whatever it was, was not a digital computer. I didn’t think it was an analogue computer either in the conventional sense.

Human Analogue Machine

Human Analogue Machine

A human-analogue machine is a machine that has created a feature space that is analagous to the “feature space” our brain uses to reason.

The latest generation of LLMs are exhibiting this charateristic, giving them ability to converse.

Heider and Simmel (1944)

Counterfeit People

- Perils of this include counterfeit people.

- Daniel Dennett has described the challenges these bring in an article in The Atlantic.

Psychological Representation of the Machine

But if correctly done, the machine can be appropriately “psychologically represented”

This might allow us to deal with the challenge of intellectual debt where we create machines we cannot explain.

In practice …

LLMs are already being used for robot planning Huang et al. (2023)

Ambiguities are reduced when the machine has had large scale access to human cultural understanding.

Inner Monologue

HAM

Computer Science Paradigm Shift

- Von Neuman Architecture:

- Code and data integrated in memory

- Today (Harvard Architecture):

- Code and data separated for security

Computer Science Paradigm Shift

- Machine learning:

- Software is data

- Machine learning is a high level breach of the code/data separation.

Lancelot

Three Security Examples

1. Safety on Airplanes

rm -rf ~/2. Security at the Gate

- Heathrow/Brussels airport cyber-attack

- Collins Aerospace systems compromised

- Traditional infrastructure vulnerabilities

- Single point of failure in critical systems

3. Agent Safety

- Notion AI Agents security research

- Indirect prompt injection attacks

- HAM vulnerabilities exposed

- “Lethal trifecta” of LLM agents, tool access, and long-term memory

Bandwidth and Security

Agility/Scale

- There is a need to act quickly.

- But a need to react decisevely.

- Creates an agility/scale problem.

Security Implications

- Oversight gap: humans cannot monitor AI systems

- Speed mismatch: incidents unfold faster than human response

- Scale mismatch: AI systems exceed human understanding

- Trust and verification become impossible

Fighting Fire with Fire

- Well deployed these technologies can help.

- Large companies lack agility.

- Small businesses don’t have the technical expertise.

- Trent.AI bringing expertise to bear on the real problems

Human Analogue Machine (HAM) Security

Ross Anderson’s Insight

- “Humans are always the weak link in security chains”

- HAM = “Humans scaled up”

- Amplifies both capabilities AND vulnerabilities

- Social engineering at scale

HAM as “Humans Scaled Up”

- Amplified capabilities: processing power, pattern recognition

- Amplified vulnerabilities: social engineering, authority manipulation

- Error propagation across multiple systems

- Complex interactions difficult to predict

The Notion AI Agents Example

- “Lethal trifecta”: LLM agents, tool access, long-term memory

- Indirect prompt injection attacks

- Authority manipulation and data exfiltration

- RBAC bypass through AI agents

Security Architecture Implications

- Human-centric security design

- Trust and verification challenges

- Fail-safe mechanisms for AI systems

- Transparency and interpretability requirements

Classical Security + GenAI Enhancement

Traditional Security Challenges

- Infrastructure vulnerabilities

- Human factors

- Scale and complexity

- Response time limitations

GenAI Enhancement Opportunities

- Identify existing vulnerabilities before exploiting

- Support OpSec team in resolving

- Learn from patterns across codebases

(Gen)AI-Specific Security Challenges

AI Attack Vectors

- Prompt injection attacks

- Model extraction

- Data poisoning

- Adversarial examples

HAM-Specific Vulnerabilities

- Indirect prompt injection

- Authority manipulation

- Data exfiltration

- RBAC bypass

The “Lethal Trifecta”

- LLM agents: AI systems that understand natural language

- Tool access: Ability to interact with external systems

- Long-term memory: Persistent state that can be manipulated

- Creates vulnerabilities unique to HAM systems

Trent.AI Solution

- Detect problematic prompts early.

Trent’s Security Evolution

Phase 1: Classical Security + GenAI

- Enhance traditional security with AI tools

- Automated threat detection

- Intelligent response systems

- Human-AI collaboration

Phase 2: GenAI Security

- New attack vectors and defenses

- HAM-specific vulnerabilities

- Indirect prompt injection

- Authority manipulation

{Phase 2: GenAI-Specific Security Challenges

The second phase addresses the new security challenges that are unique to generative AI systems. This requires fundamentally different approaches to security design.

Key Focus Areas: - Prompt injection attacks: Defending against indirect prompt injection and authority manipulation

Practical Applications: - Notion AI Agents example: Defending against indirect prompt injection attacks

Phase 3: Information Systems

- Broader architectural implications

Information Systems Security Future

The Horizon Scandal

|

|

|

|

The Lorenzo Scandal

|

|

|

|

Thanks!

company: Trent AI

book: The Atomic Human

twitter: @lawrennd

The Atomic Human pages intellectual debt 84, 85, 349, 365 , intellectual debt 84-85, 349, 365, 376, separation of concerns 84-85, 103, 109, 199, 284, 371, MONIAC 232-233, 266, 343, MacKay, Donald, Behind the Eye 268-270, 316, psychological representation 326–329, 344–345, 353, 361, 367, human-analogue machine 343–5, 346–7, 358–9, 365–8, human-analogue machine (HAMs) 343-347, 359-359, 365-368, Horizon scandal 371.

newspaper: Guardian Profile Page

blog posts: