Where Next for AI?

Abstract

Our current generation of artificial intelligence techniques are driven by data. But also we expect to be able to deploy artificial intelligence techniques on data. What does that mean, is it a contradiction? How will this effect the wider technology landscape? Is it simply a matter of refining deep neural nets? Or are more disruptive technologies needed? What will be the challenges of deploying AI systems?

Introduction

The Gartner Hype Cycle

Figure: The Gartner Hype Cycle places technologies on a graph that relates to the expectations we have of a technology against its actual influence. Early hope for a new techology is often displaced by disillusionment due to the time it takes for a technology to be usefully deployed.

The Gartner Hype Cycle tries to assess where an idea is in terms of maturity and adoption. It splits the evolution of technology into a technological trigger, a peak of expectations followed by a trough of disillusionment and a final ascension into a useful technology. It looks rather like a classical control response to a final set point.

Cycle for ML Terms

Google Trends

import importlib.util

Figure: A Google trends search for ‘artificial intelligence’, ‘big data’, ‘data mining’, ‘deep learning’, ‘machine learning’ as different technological terms give us insight into their popularity over time.

Google trends gives us insight into the interest for different terms over time.

Examining Google trends search for ‘artificial intelligence’, ‘big data’, ‘data mining’, ‘deep learning’ and ‘machine learning’ we can see that ‘artificial intelligence’ may be entering a plateau of productivity, ‘big data’ is entering the trough of disillusionment, and ‘data mining’ seems to be deeply within the trough. On the other hand, ‘deep learning’ and ‘machine learning’ appear to be ascending to the peak of inflated expectations having experienced a technology trigger.

For deep learning that technology trigger was the ImageNet result of 2012 (Krizhevsky et al., n.d.). This step change in performance on object detection in images was achieved through convolutional neural networks, popularly known as ‘deep learning’.

Tracking Hype about Hype

Just checking where the Gartner hype cycle is on the Gartner hype cycle (seems to be at "technology trigger") https://t.co/XbqRJ1bNck

— Neil Lawrence (@lawrennd) October 3, 2017

Lies and Damned Lies

There are three types of lies: lies, damned lies and statistics

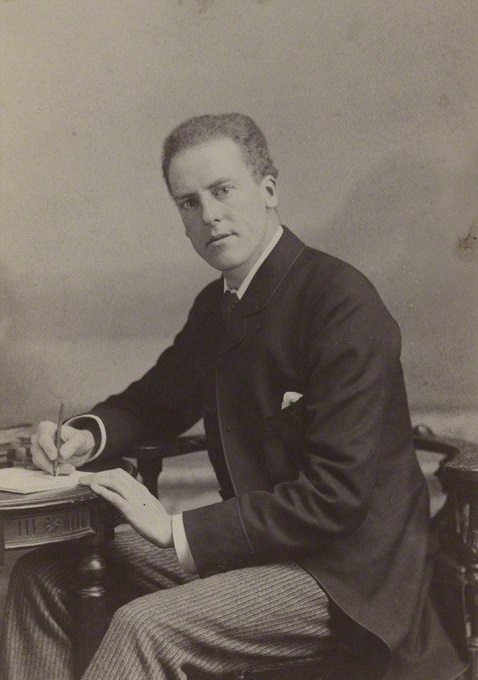

Arthur Balfour 1848-1930

Arthur Balfour was quoting the lawyer James Munro1 when he said that there three types of lies: lies, damned lies and statistics in 1892. This is 20 years before the first academic department of applied statistics was founded at UCL.

If Balfour were alive today, it is likely that he’d rephrase his quote:

There are three types of lies, lies damned lies and big data.

Why? Because the challenges of understanding and interpreting big data today are similar to those that Balfour (who was a Conservative politician and statesman and would later become Prime Minister) faced in governing an empire through statistics in the latter part of the 19th century.

The quote lies, damned lies and statistics was also credited to Benjamin Disraeli by Mark Twain in Twain’s autobiography.2 It characterizes the idea that statistic can be made to prove anything. But Disraeli died in 1881 and Mark Twain died in 1910. The important breakthrough in overcoming our tendency to over-interpet data came with the formalization of the field through the development of mathematical statistics.

Data has an elusive quality, it promises so much but can deliver little, it can mislead and misrepresent. To harness it, it must be tamed. In Balfour and Disraeli’s time during the second half of the 19th century, numbers and data were being accumulated, the social sciences were being developed. There was a large-scale collection of data for the purposes of government.

The modern ‘big data era’ is on the verge of delivering the same sense of frustration that Balfour experienced, the early promise of big data as a panacea is evolving to demands for delivery. For me, personally, peak-hype coincided with an email I received inviting collaboration on a project to deploy “Big Data and Internet of Things in an Industry 4.0 environment”. Further questioning revealed that the actual project was optimization of the efficiency of a manufacturing production line, a far more tangible and realizable goal.

The antidote to this verbiage is found in increasing awareness. When dealing with data the first trap to avoid is the games of buzzword bingo that we are wont to play. The first goal is to quantify what challenges can be addressed and what techniques are required. Behind the hype fundamentals are changing. The phenomenon is about the increasing access we have to data. The way customers’ information is recorded and processes are codified and digitized with little overhead. Internet of things is about the increasing number of cheap sensors that can be easily interconnected through our modern network structures. But businesses are about making money, and these phenomena need to be recast in those terms before their value can be realized.

For more thoughts on the challenges that statistics brings see Chapter 8 of Lawrence (2024).

Mathematical Statistics

Karl Pearson (1857-1936), Ronald Fisher (1890-1962) and others considered the question of what conclusions can truly be drawn from data. Their mathematical studies act as a restraint on our tendency to over-interpret and see patterns where there are none. They introduced concepts such as randomized control trials that form a mainstay of our decision making today, from government, to clinicians to large scale A/B testing that determines the nature of the web interfaces we interact with on social media and shopping.

Figure: Karl Pearson (1857-1936), one of the founders of Mathematical Statistics.

Their movement did the most to put statistics to rights, to eradicate the ‘damned lies’. It was known as ‘mathematical statistics’. Today I believe we should look to the emerging field of data science to provide the same role. Data science is an amalgam of statistics, data mining, computer systems, databases, computation, machine learning and artificial intelligence. Spread across these fields are the tools we need to realize data’s potential. For many businesses this might be thought of as the challenge of ‘converting bits into atoms’. Bits: the data stored on computer, atoms: the physical manifestation of what we do; the transfer of goods, the delivery of service. From fungible to tangible. When solving a challenge through data there are a series of obstacles that need to be addressed.

Firstly, data awareness: what data you have and where its stored. Sometimes this includes changing your conception of what data is and how it can be obtained. From automated production lines to apps on employee smart phones. Often data is locked away: manual logbooks, confidential data, personal data. For increasing awareness an internal audit can help. The website data.gov.uk hosts data made available by the UK government. To create this website the government’s departments went through an audit of what data they each hold and what data they could make available. Similarly, within private businesses this type of audit could be useful for understanding their internal digital landscape: after all the key to any successful campaign is a good map.

Secondly, availability. How well are the data sources interconnected? How well curated are they? The curse of Disraeli was associated with unreliable data and unreliable statistics. The misrepresentations this leads to are worse than the absence of data as they give a false sense of confidence to decision making. Understanding how to avoid these pitfalls involves an improved sense of data and its value, one that needs to permeate the organization.

The final challenge is analysis, the accumulation of the necessary expertise to digest what the data tells us. Data requires interpretation, and interpretation requires experience. Analysis is providing a bottleneck due to a skill shortage, a skill shortage made more acute by the fact that, ideally, analysis should be carried out by individuals not only skilled in data science but also equipped with the domain knowledge to understand the implications in a given application, and to see opportunities for improvements in efficiency.

‘Mathematical Data Science’

As a term ‘big data’ promises much and delivers little, to get true value from data, it needs to be curated and evaluated. The three stages of awareness, availability and analysis provide a broad framework through which organizations should be assessing the potential in the data they hold. Hand waving about big data solutions will not do, it will only lead to self-deception. The castles we build on our data landscapes must be based on firm foundations, process and scientific analysis. If we do things right, those are the foundations that will be provided by the new field of data science.

Today the statement “There are three types of lies: lies, damned lies and ‘big data’” may be more apt. We are revisiting many of the mistakes made in interpreting data from the 19th century. Big data is laid down by happenstance, rather than actively collected with a particular question in mind. That means it needs to be treated with care when conclusions are being drawn. For data science to succeed it needs the same form of rigor that Pearson and Fisher brought to statistics, a “mathematical data science” is needed.

You can also check my blog post on Lies, Damned Lies and Big Data.

Electricity

There are parallels between the deployment of machine learning solutions and update as electricity as a means of powering industry. Tim Harford explores the reasons why it took time to exploit the power of electricity in the manufacturing industry. The true benefit of electricity came when machinery had electric motors incorporated. Substituting a centralized steam engine in a manufacturing plant with a centralized electric motor didn’t reduce costs or improve the reconfigurability of a factory. The real advantages came when the belt drives that were necessary to redistribute power were replaced with electric cables and energy was transformed into motion at the machine rather than centrally. This gives a manufacturing plant reconfigurability.

We can expect to see the same thing with our machine learning capabilities. In the analogy our existing software systems are the steam power, and data driven systems are equivalent to electricity. Currently software engineers create information processing entities (programs) in a centralized manner, where as data driven systems are reactive and responsive to their environment. Just as with electricity this brings new flexibility to our systems, but new dangers as well.

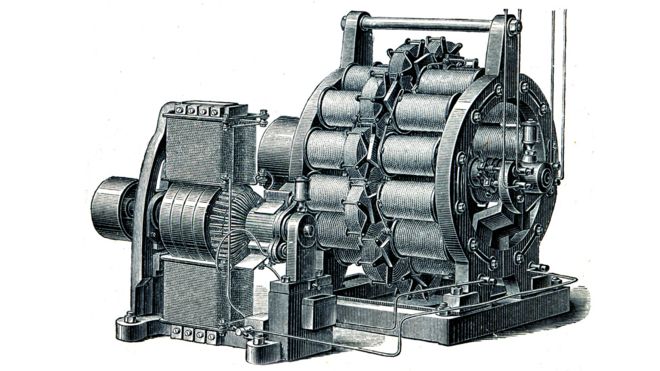

Boulton and Watt’s Steam Engine

Figure: Watt’s Steam Engine which made Steam Power Efficient and Practical.

James Watt’s steam engine contained an early machine learning device. In the same way that modern systems are component based, his engine was composed of components. One of which is a speed regulator sometimes known as Watt’s governor. The two balls in the center of the image, when spun fast, rise, and through a linkage mechanism.

Thanks!

For more information on these subjects and more you might want to check the following resources.

- company: Trent AI

- book: The Atomic Human

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com