The Atomic Human

Abstract

Our fascination with AI stems from the perceived uniqueness of human intelligence. We believe it’s what differentiates us. Fears of AI not only concern how it invades our digital lives, but also the implied threat of an intelligence that displaces us from our position at the centre of the world.

Neil D. Lawrence’s visionary book shows why these fears may be misplaced.

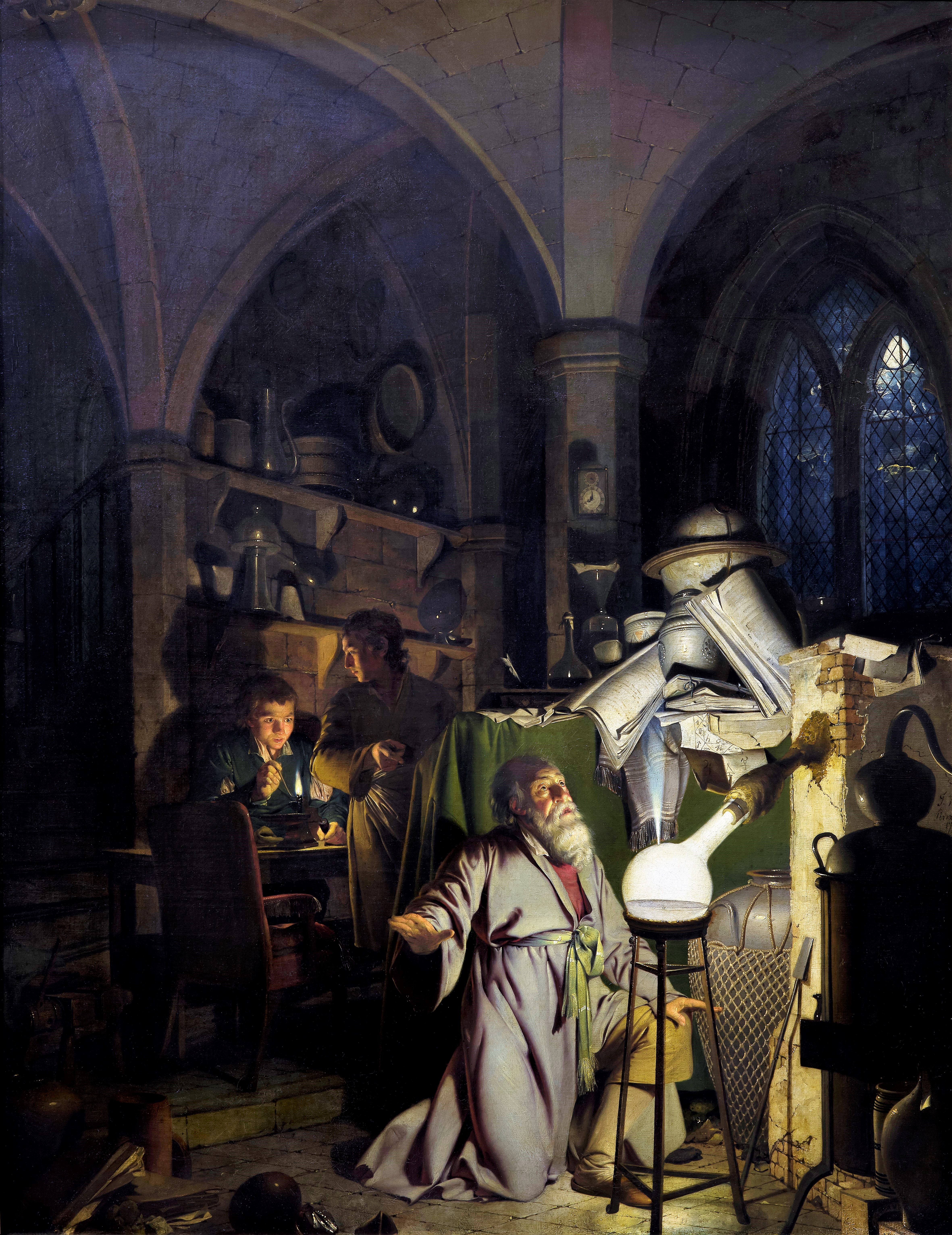

Philosopher’s Stone

Figure: The Alchemist by Joseph Wright of Derby (1771). The picture depcits Hennig Brand discovering the element phosphorus when searching for the Philosopher’s Stone.

The philosopher’s stone is a mythical substance that can convert base metals to gold.

In our modern economy, automation has the same effect. During the industrial revolution, steel and steam replaced human manual labour. Today, silicon and electrons are being combined to replace human mental labour.

The Attention Economy

Herbert Simon on Information

What information consumes is rather obvious: it consumes the attention of its recipients. Hence a wealth of information creates a poverty of attention …

Simon (1971)

The attention economy was a phenomenon described in 1971 by the American computer scientist Herbert Simon. He saw the coming information revolution and wrote that a wealth of information would create a poverty of attention. Too much information means that human attention becomes the scarce resource, the bottleneck. It becomes the gold in the attention economy.

The power associated with control of information dates back to the invention of writing. By pressing reeds into clay tablets Sumerian scribes stored information and controlled the flow of information.

Human Capital Index

The World Bank’s human capital index is one area where the UK is a leading international economy, or at least an area where we currently outperform both the USA and China. The index is a measure of education and health of a population.

Productivity Flywheel

Figure: The productivity flywheel suggests technical innovation is reinvested.

The productivity flywheel should return the gains released by productivity through funding. This relies on the economic value mapping the underlying value.

Inflation of Human Capital

This transformation creates efficiency. But it also devalues the skills that form the backbone of human capital and create a happy, healthy society. Had the alchemists ever discovered the philosopher’s stone, using it would have triggered mass inflation and devalued any reserves of gold. Similarly, our reserve of precious human capital is vulnerable to automation and devaluation in the artificial intelligence revolution. The skills we have learned, whether manual or mental, risk becoming redundant in the face of the machine.

Inflation Proof Human Capital

Will AI totally displace the human? Or is there any form, a core, an irreducible element of human attention that the machine cannot replace? If so, this would be a robust foundation on which to build our digital futures.

Uncertainty Principle

Unfortunately, when we seek it out, we are faced with a form of uncertainty principle. Machines rely on measurable outputs, meaning any aspect of human ability that can be quantified is at risk of automation. But the most essential aspects of humanity are the hardest to measure.

So, the closer we get to the atomic human the more difficult it is to measure the value of the associated human attention.

The Atomic Human

Figure: The Atomic Eye, by slicing away aspects of the human that we used to believe to be unique to us, but are now the preserve of the machine, we learn something about what it means to be human.

The development of what some are calling intelligence in machines, raises questions around what machine intelligence means for our intelligence. The idea of the atomic human is derived from Democritus’s atomism.

In the fifth century bce the Greek philosopher Democritus posed a similar question about our physical universe. He imagined cutting physical matter into pieces in a repeated process: cutting a piece, then taking one of the cut pieces and cutting it again so that each time it becomes smaller and smaller. Democritus believed this process had to stop somewhere, that we would be left with an indivisible piece. The Greek word for indivisible is atom, and so this theory was called atomism. This book considers this question, but in a different domain, asking: As the machine slices away portions of human capabilities, are we left with a kernel of humanity, an indivisible piece that can no longer be divided into parts? Or does the human disappear altogether? If we are left with something, then that uncuttable piece, a form of atomic human, would tell us something about our human spirit.

See Lawrence (2024) atomic human, the p. 13.

Homo Atomicus

We won’t find the atomic human in the percentage of A grades that our children are achieving at schools or the length of waiting lists we have in our hospitals. It sits behind all this. We see the atomic human in the way a nurse spends an extra few minutes ensuring a patient is comfortable or a bus driver pauses to allow a pensioner to cross the road or a teacher praises a struggling student to build their confidence.

We need to move away from homo economicus towards homo atomicus.

Embodiment Factors

| bits/min | billions | 2,000 |

|

billion calculations/s |

~100 | a billion |

| embodiment | 20 minutes | 5 billion years |

Figure: Embodiment factors are the ratio between our ability to compute and our ability to communicate. Relative to the machine we are also locked in. In the table we represent embodiment as the length of time it would take to communicate one second’s worth of computation. For computers it is a matter of minutes, but for a human, it is a matter of thousands of millions of years. See also “Living Together: Mind and Machine Intelligence” Lawrence (2017)

There is a fundamental limit placed on our intelligence based on our ability to communicate. Claude Shannon founded the field of information theory. The clever part of this theory is it allows us to separate our measurement of information from what the information pertains to.1

Shannon measured information in bits. One bit of information is the amount of information I pass to you when I give you the result of a coin toss. Shannon was also interested in the amount of information in the English language. He estimated that on average a word in the English language contains 12 bits of information.

Given typical speaking rates, that gives us an estimate of our ability to communicate of around 100 bits per second (Reed and Durlach, 1998). Computers on the other hand can communicate much more rapidly. Current wired network speeds are around a billion bits per second, ten million times faster.

When it comes to compute though, our best estimates indicate our computers are slower. A typical modern computer can process make around 100 billion floating-point operations per second, each floating-point operation involves a 64 bit number. So the computer is processing around 6,400 billion bits per second.

It’s difficult to get similar estimates for humans, but by some estimates the amount of compute we would require to simulate a human brain is equivalent to that in the UK’s fastest computer (Ananthanarayanan et al., 2009), the MET office machine in Exeter, which in 2018 ranked as the 11th fastest computer in the world. That machine simulates the world’s weather each morning, and then simulates the world’s climate in the afternoon. It is a 16-petaflop machine, processing around 1,000 trillion bits per second.

See Lawrence (2024) embodiment factor p. 13, 29, 35, 79, 87, 105, 197, 216-217, 249, 269, 353, 369.

Bandwidth Constrained Conversations

Figure: Conversation relies on internal models of other individuals.

Figure: Misunderstanding of context and who we are talking to leads to arguments.

Embodiment factors imply that, in our communication between humans, what is not said is, perhaps, more important than what is said. To communicate with each other we need to have a model of who each of us are.

To aid this, in society, we are required to perform roles. Whether as a parent, a teacher, an employee or a boss. Each of these roles requires that we conform to certain standards of behaviour to facilitate communication between ourselves.

Control of self is vitally important to these communications.

The high availability of data available to humans undermines human-to-human communication channels by providing new routes to undermining our control of self.

The consequences between this mismatch of power and delivery are to be seen all around us. Because, just as driving an F1 car with bicycle wheels would be a fine art, so is the process of communication between humans.

If I have a thought and I wish to communicate it, I first need to have a model of what you think. I should think before I speak. When I speak, you may react. You have a model of who I am and what I was trying to say, and why I chose to say what I said. Now we begin this dance, where we are each trying to better understand each other and what we are saying. When it works, it is beautiful, but when mis-deployed, just like a badly driven F1 car, there is a horrible crash, an argument.

Computer Conversations

Figure: Conversation relies on internal models of other individuals.

Figure: Misunderstanding of context and who we are talking to leads to arguments.

Similarly, we find it difficult to comprehend how computers are making decisions. Because they do so with more data than we can possibly imagine.

In many respects, this is not a problem, it’s a good thing. Computers and us are good at different things. But when we interact with a computer, when it acts in a different way to us, we need to remember why.

Just as the first step to getting along with other humans is understanding other humans, so it needs to be with getting along with our computers.

Embodiment factors explain why, at the same time, computers are so impressive in simulating our weather, but so poor at predicting our moods. Our complexity is greater than that of our weather, and each of us is tuned to read and respond to one another.

Their intelligence is different. It is based on very large quantities of data that we cannot absorb. Our computers don’t have a complex internal model of who we are. They don’t understand the human condition. They are not tuned to respond to us as we are to each other.

Embodiment factors encapsulate a profound thing about the nature of humans. Our locked in intelligence means that we are striving to communicate, so we put a lot of thought into what we’re communicating with. And if we’re communicating with something complex, we naturally anthropomorphize them.

We give our dogs, our cats, and our cars human motivations. We do the same with our computers. We anthropomorphize them. We assume that they have the same objectives as us and the same constraints. They don’t.

This means, that when we worry about artificial intelligence, we worry about the wrong things. We fear computers that behave like more powerful versions of ourselves that will struggle to outcompete us.

In reality, the challenge is that our computers cannot be human enough. They cannot understand us with the depth we understand one another. They drop below our cognitive radar and operate outside our mental models.

The real danger is that computers don’t anthropomorphize. They’ll make decisions in isolation from us without our supervision because they can’t communicate truly and deeply with us.

New Flow of Information

Classically the field of statistics focused on mediating the relationship between the machine and the human. Our limited bandwidth of communication means we tend to over-interpret the limited information that we are given, in the extreme we assign motives and desires to inanimate objects (a process known as anthropomorphizing). Much of mathematical statistics was developed to help temper this tendency and understand when we are valid in drawing conclusions from data.

Figure: The trinity of human, data, and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

Data science brings new challenges. In particular, there is a very large bandwidth connection between the machine and data. This means that our relationship with data is now commonly being mediated by the machine. Whether this is in the acquisition of new data, which now happens by happenstance rather than with purpose, or the interpretation of that data where we are increasingly relying on machines to summarize what the data contains. This is leading to the emerging field of data science, which must not only deal with the same challenges that mathematical statistics faced in tempering our tendency to over interpret data but must also deal with the possibility that the machine has either inadvertently or maliciously misrepresented the underlying data.

New Productivity Paradox

Thus we face a new productivity paradox. The classical tools of economic intervention cannot map hard-to-measure supply and demand of quality human attention. So how do we build a new economy that utilises our lead in human capital and delivers the digital future we aspire to?

One answer is to look at the human capital index. This measures the quality and quantity of the attention economy via the health and education of our population.

We need to value this and find a way to reinvest human capital, returning the value of the human back into the system when considering productivity gains from technology like AI.

This means a tighter mapping between what the public want and what the innovation economy delivers. It means more agile policy that responds to public dialogue with tangible solutions co-created with the people who are doing the actual work. It means, for example, freeing up a nurse’s time with technology tools and allowing them to spend that time with patients.

To deliver this, our academic institutions need to step up. Too often in the past we have been distant from the difficulties that society faces. We have been too remote from the real challenges of everyday lives — challenges that don’t make the covers of prestige science magazines. People are rightly angry that innovations like AI have yet to address the problems they face, including in health, social care and education.

Of course, universities cannot fix this on their own, but academics can operate as honest brokers that bridge gaps between public and private considerations, convene different groups and understand, celebrate and empower the contributions of individuals.

This requires people who are prepared to dedicate their time to improving each other’s lives, developing new best practices and sharing them with colleagues and coworkers.

To preserve our human capital and harness our potential, we need the AI alchemists to provide us with solutions that can serve both science and society.

Innovation Economy Challenges

Innovating to serve science and society requires a pipeline of interventions. As well as advances in the technical capabilities of AI technologies, engineering knowhow is required to safely deploy and monitor those solutions in practice. Regulatory frameworks need to adapt to ensure trustworthy use of these technologies. Aligning technology development with public interests demands effective stakeholder engagement to bring diverse voices and expertise into technology design.

Building this pipeline will take coordination across research, engineering, policy and practice. It also requires action to address the digital divides that influence who benefits from AI advances. These include digital divides within the socioeconomic strata that need to be overcome – AI must not exacerbate existing equalities or create new ones. In addressing these challenges, we can be hindered by divides that exist between traditional academic disciplines. We need to develop common understanding of the problems and a shared knowledge of possible solutions.

Digital Failure Examples

The Horizon Scandal

In the UK we saw these effects play out in the Horizon scandal: the accounting system of the national postal service was computerized by Fujitsu and first installed in 1999, but neither the Post Office nor Fujitsu were able to control the system they had deployed. When it went wrong individual sub postmasters were blamed for the systems’ errors. Over the next two decades they were prosecuted and jailed leaving lives ruined in the wake of the machine’s mistakes.

See Lawrence (2024) Horizon scandal p. 371.

The Lorenzo Scandal

The Lorenzo scandal is the National Programme for IT which was intended to allow the NHS to move towards electronic health records.

The oral transcript can be found at https://publications.parliament.uk/pa/cm201012/cmselect/cmpubacc/1070/11052302.htm.

One quote from 16:54:33 in the committee discussion captures the top-down nature of the project.

Q117 Austin Mitchell: You said, Sir David, the problems came from the middle range, but surely they were implicit from the start, because this project was rushed into. The Prime Minister [Tony Blair] was very keen, the delivery unit was very keen, it was very fashionable to computerise things like this. An appendix indicating the cost would be £5 billion was missed out of the original report as published, so you have a very high estimate there in the first place. Then, Richard Granger, the Director of IT, rushed through, without consulting the professions. This was a kind of computer enthusiast’s bit, was it not? The professionals who were going to have to work it were not consulted, because consultation would have made it clear that they were going to ask more from it and expect more from it, and then contracts for £1 billion were let pretty well straightaway, in May 2003. That was very quick. Now, why were the contracts let before the professionals were consulted?

An analysis of the problems was published by Justinia (2017). Based on the paper, the key challenges faced in the UK’s National Programme for IT (NPfIT) included:

Lack of adequate end user engagement, particularly with frontline healthcare staff and patients. The program was imposed from the top-down without securing buy-in from stakeholders.

Absence of a phased change management approach. The implementation was rushed without proper planning for organizational and cultural changes.

Underestimating the scale and complexity of the project. The centralized, large-scale approach was overambitious and difficult to manage.

Poor project management, including unrealistic timetables, lack of clear leadership, and no exit strategy.

Insufficient attention to privacy and security concerns regarding patient data.

Lack of local ownership. The centralized approach meant local healthcare providers felt no ownership over the systems.

Communication issues, including poor communication with frontline staff about the program’s benefits.

Technical problems, delays in delivery, and unreliable software.

Failure to recognize the socio-cultural challenges were as significant as the technical ones.

Lack of flexibility to adapt to changing requirements over the long timescale.

Insufficient resources and inadequate methodologies for implementation.

Low morale among NHS staff responsible for implementation due to uncertainties and unrealistic timetables.

Conflicts between political objectives and practical implementation needs.

The paper emphasizes that while technical competence is necessary, the organizational, human, and change management factors were more critical to the program’s failure than purely technological issues. The top-down, centralized approach and lack of stakeholder engagement were particularly problematic.

Reports at the Time

Report https://publications.parliament.uk/pa/cm201012/cmselect/cmpubacc/1070/1070.pdf

Commonalities

Both the Horizon and Lorenzo scandals highlight fundamental disconnects between macro-level policy decisions and micro-level operational realities. The projects failed to properly account for how systems would actually be used in practice, with devastating consequences.

The key failures can be summarized in four main points:

The failures stemmed from insufficient consideration of local needs, capabilities, and existing systems.

There was a lack of effective feedback mechanisms from the micro to macro level.

The implementations suffered from overly rigid, top-down approaches that didn’t allow for local adaptation.

In both cases, there was insufficient engagement with end-users and local stakeholders.

These systemic failures demonstrate how large-scale digital transformations can go catastrophically wrong when there is a disconnect between high-level strategy and ground-level implementation. Future digital initiatives must bridge this macro-micro gap through meaningful stakeholder engagement and adaptable implementation approaches.

These examples provide valuable lessons for bridging the macro-micro gap in innovation. Success requires comprehensive stakeholder engagement at all levels, with system designs that can flex and adapt to local needs. Effective feedback mechanisms between implementation and policy levels are crucial, supported by phased rollouts that allow for learning and adjustment. Technical competence must be ensured across both policy-making and implementation teams, with realistic timelines based on operational realities. Independent, technically competent oversight can provide valuable guidance and accountability.

Two Types of Stochastic Parrot

Figure: This is the drawing Dan was inspired to create for Chapter 5. An AI parrot repeats information about AI doom panicking humans.

Bender et al. (2021) warned about large language models and suggested that they are operating as stochastic parrots. But we are also seeing an increase in hyperbole and uninformed, yet confident comment, from another form of stochastic parrot. Those who, like ChatGPT, confidently assert opinion based on hearsay rather than experience. These stochastic parrots are a major challenge for organisations trying to assimilate these technologies.

See this reflection on Two Types of Stochastic Parrots.

What do People Want?

Public Dialogue on AI in Public Services

Figure: In September 2024, ai@cam convened a series of public dialogues to understand perspectives on the role of AI in delivering policy agendas.

In September 2024, ai@cam convened a series of public dialogues to understand perspectives on the role of AI in delivering priority policy agendas. Through workshops in Cambridge and Liverpool, 40 members of the public shared their views on how AI could support delivery of four key government missions around health, crime and policing, education, and energy and net zero.

The dialogue revealed a pragmatic public view that sees clear benefits from AI in reducing administrative burdens and improving service efficiency, while emphasizing the importance of maintaining human-centered services and establishing robust governance frameworks.

Key participant quotes illustrate this balanced perspective:

“It must be so difficult for GPs to keep track of how medication reacts with other medication on an individual basis. If there’s some database that shows all that, then it can only help, can’t it?”

Public Participant, Liverpool pg 10 ai@cam and Hopkins Van Mil (2024)

“I think a lot of the ideas need to be about AI being like a co-pilot to someone. I think it has to be that. So not taking the human away.”

Public Participant, Liverpool pg 15 ai@cam and Hopkins Van Mil (2024)

AI in Healthcare: Public Perspectives

In healthcare discussions, participants saw clear opportunities for AI to support NHS administration and improve service delivery, while expressing caution about AI involvement in direct patient care and diagnosis.

Participants identified several key aspirations for AI in healthcare. A major focus was on reducing the administrative workload that currently burdens healthcare professionals, allowing them to spend more time on direct patient care. There was strong support for AI’s potential in early diagnosis and preventive care, where it could help identify health issues before they become severe. The public also saw significant value in AI accelerating medical research and drug development processes, potentially leading to new treatments more quickly. Finally, participants recognized AI’s capability to help manage complex medical conditions by analyzing large amounts of patient data and identifying optimal treatment strategies. These aspirations reflect a pragmatic view of AI as a tool to enhance healthcare delivery while maintaining the central role of human medical professionals.

Illustrative quotes show the nuanced views.

“My wife [an NHS nurse] says that the paperwork side takes longer than the actual care.”

Public Participant, Liverpool pg 9 ai@cam and Hopkins Van Mil (2024)

“I wouldn’t just want to rely on the technology for something big like that, because obviously it’s a lifechanging situation.”

Public Participant, Cambridge pg 10 ai@cam and Hopkins Van Mil (2024)

Concerns focused particularly on maintaining human involvement in healthcare decisions and protecting patient privacy.

AI in Education: Public Perspectives

In education discussions, participants strongly supported AI’s potential to reduce teacher workload but expressed significant concerns about screen time and the importance of human interaction in learning.

A clear distinction emerged between support for AI in administrative tasks versus direct teaching roles. Participants emphasized that core aspects of education require human qualities that AI cannot replicate.

Key quotes illustrate these views:

“Education isn’t just about learning, it’s about preparing children for life, and you don’t do all of that in front of a screen.”

Public Participant, Cambridge ai@cam and Hopkins Van Mil (2024) pg 18

“Kids with ADHD or autism might prefer to interact with an iPad than they would a person, it could lighten the load for them.”

Public Participant, Liverpool ai@cam and Hopkins Van Mil (2024) pg 17

The dialogue revealed particular concern about the risk of AI increasing screen time and reducing social interaction, while acknowledging potential benefits for personalized learning support.

Dialogue Summary

The public dialogue revealed several important cross-cutting themes about how AI should be deployed in public services. First and foremost was the principle that AI should enhance rather than replace human capabilities - participants consistently emphasized that AI should be a tool to support and augment human work rather than substitute for it. There was also strong consensus that robust governance frameworks need to be established before AI systems are deployed in public services, to ensure proper oversight and accountability.

Transparency and public engagement emerged as essential requirements, with participants emphasizing the need for clear communication about how AI is being used and meaningful opportunities for public input. The fair distribution of benefits was another key concern - participants wanted assurance that AI-enabled improvements would benefit all segments of society rather than exacerbating existing inequalities. Finally, there was strong emphasis on maintaining human-centered service delivery, ensuring that the introduction of AI doesn’t diminish the crucial human elements of public services.

A powerful theme throughout the dialogue was the desire to maintain human connection and expertise while leveraging AI’s capabilities to improve service efficiency and effectiveness. As one participant noted:

“We need to look at the causes, we need to do some more thinking and not just start using AI to plaster over them [societal issues].”

Public Participant, Cambridge pg 13 ai@cam and Hopkins Van Mil (2024)

Royal Society Report

Figure: The Royal Society report on Machine Learning was released on 25th April 2017

A useful reference for state of the art in machine learning is the UK Royal Society Report, Machine Learning: Power and Promise of Computers that Learn by Example.

See Lawrence (2024) Royal Society; machine learning review and p. 25, 321, 395.

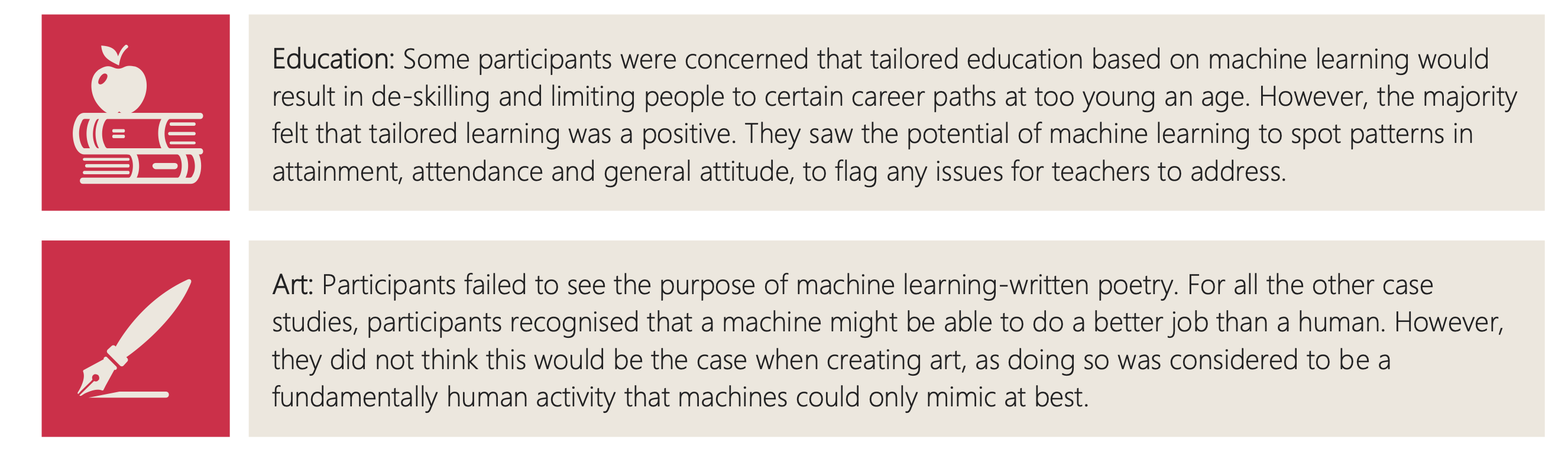

Public Research

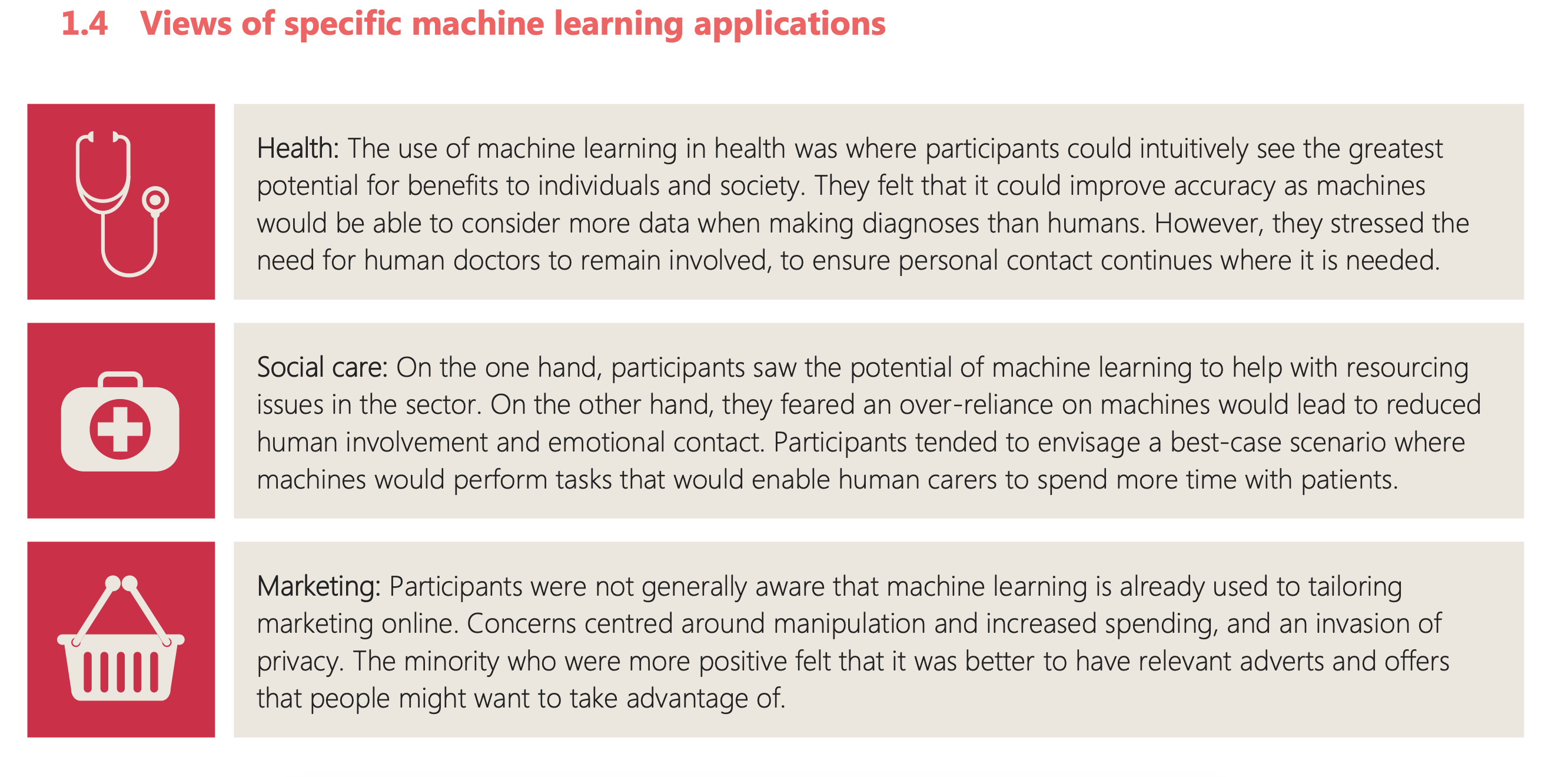

Figure: The Royal Society comissioned public research from Mori as part of the machine learning review.

As is often the cas when members of the public are well convened, they offered sensible opinions about the utility and challenges of machine learning methods.

Figure: One of the questions focussed on machine learning applications.

A range of different scenaios were captured by this qualitative work.

Figure: The public were broadly supportive of a range of application areas.

Figure: But they failed to see the point in AI’s that could produce poetry.

The one scenario where the public were unable to perceive any advantage in machine learning was an example from art, the creation of poetry. This was felt to be a fundamentally human activity that machines could only emulate at best.

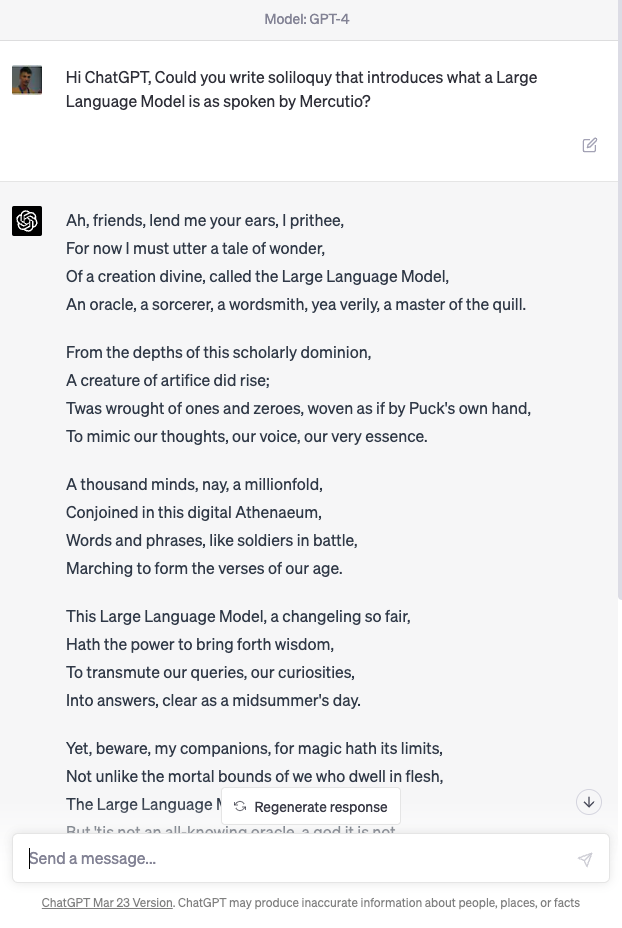

Mercutio

It’s ironic that despite the public focus on transport, health, cities, crime and their confusion as to why we might want AI to do art, one area where significant progress has been made is on AIs that can create literature.

Figure: Despite the public’s desire to see challenges in health, finance, transport, crime solved by machine learning and their skepticism around poetry, one of the problems we have solved seems to be poetry.

User: Hi ChatGPT, Could you write soliloquy that introduces what a Large Language Model is as spoken by Mercutio?

Machine: Ah, friends, lend me your ears, I prithee, For now I must utter a tale of wonder, Of a creation divine, called the Large Language Model, An oracle, a sorcerer, a wordsmith, yea verily, a master of the quill.

From the depths of this scholarly dominion, A creature of artifice did rise; Twas wrought of ones and zeroes, woven as if by Puck’s own hand, To mimic our thoughts, our voice, our very essence.

A thousand minds, nay, a millionfold, Conjoined in this digital Athenaeum, Words and phrases, like soldiers in battle, Marching to form the verses of our age.

This Large Language Model, a changeling so fair, Hath the power to bring forth wisdom, To transmute our queries, our curiosities, Into answers, clear as a midsummer’s day.

Yet, beware, my companions, for magic hath its limits, Not unlike the mortal bounds of we who dwell in flesh, The Large Language Model hath its strengths, But ’tis not an all-knowing oracle, a god it is not.

As Mercutio, your humble servant, doth stand before thee, I marvel at the art and science that hath brought forth this wondrous creation, This Large Language Model, a testament to man’s yearning, To comprehend the world and make meaning of the great tapestry of existence.

In faith, dear friends, let us embrace the magic and beauty of this digital bard, And mayhaps, by its grace, we shall glean some wisdom, As we wander together, hand in hand, Through this boundless realm of knowledge and wonder.

ChatGPT transcript, 14th April 2023.

What’s the solution?

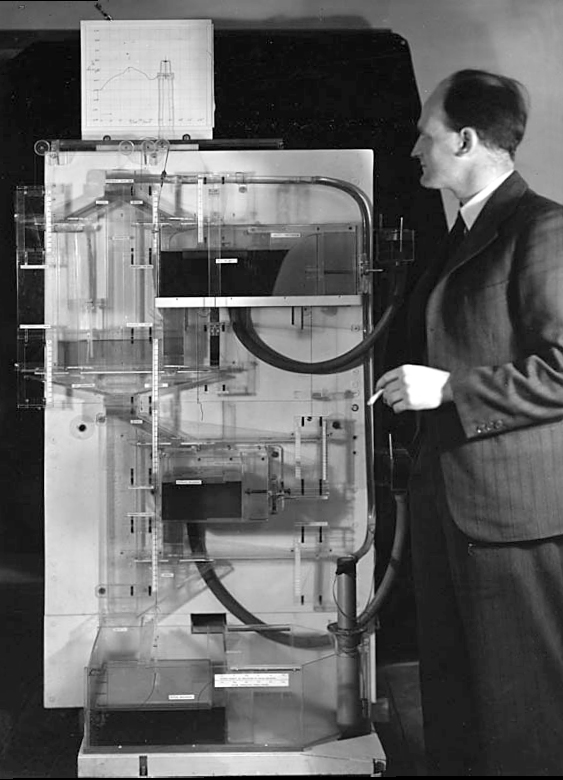

The MONIAC

The MONIAC was an analogue computer designed to simulate the UK economy. Analogue comptuers work through analogy, the analogy in the MONIAC is that both money and water flow. The MONIAC exploits this through a system of tanks, pipes, valves and floats that represent the flow of money through the UK economy. Water flowed from the treasury tank at the top of the model to other tanks representing government spending, such as health and education. The machine was initially designed for teaching support but was also found to be a useful economic simulator. Several were built and today you can see the original at Leeds Business School, there is also one in the London Science Museum and one in the Unisversity of Cambridge’s economics faculty.

Figure: Bill Phillips and his MONIAC (completed in 1949). The machine is an analogue computer designed to simulate the workings of the UK economy.

See Lawrence (2024) MONIAC p. 232-233, 266, 343.

Human Analogue Machine

Recent breakthroughs in generative models, particularly large language models, have enabled machines that, for the first time, can converse plausibly with other humans.

The Apollo guidance computer provided Armstrong with an analogy when he landed it on the Moon. He controlled it through a stick which provided him with an analogy. The analogy is based in the experience that Amelia Earhart had when she flew her plane. Armstrong’s control exploited his experience as a test pilot flying planes that had control columns which were directly connected to their control surfaces.

Figure: The human analogue machine is the new interface that large language models have enabled the human to present. It has the capabilities of the computer in terms of communication, but it appears to present a “human face” to the user in terms of its ability to communicate on our terms. (Image quite obviously not drawn by generative AI!)

The generative systems we have produced do not provide us with the “AI” of science fiction. Because their intelligence is based on emulating human knowledge. Through being forced to reproduce our literature and our art they have developed aspects which are analogous to the cultural proxy truths we use to describe our world.

These machines are to humans what the MONIAC was the British economy. Not a replacement, but an analogue computer that captures some aspects of humanity while providing advantages of high bandwidth of the machine.

HAM

The Human-Analogue Machine or HAM therefore provides a route through which we could better understand our world through improving the way we interact with machines.

Figure: The trinity of human, data, and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

The HAM can provide an interface between the digital computer and the human allowing humans to work closely with computers regardless of their understandin gf the more technical parts of software engineering.

Figure: The HAM now sits between us and the traditional digital computer.

Of course this route provides new routes for manipulation, new ways in which the machine can undermine our autonomy or exploit our cognitive foibles. The major challenge we face is steering between these worlds where we gain the advantage of the computer’s bandwidth without undermining our culture and individual autonomy.

See Lawrence (2024) human-analogue machine (HAMs) p. 343-347, 359-359, 365-368.

Supply Chain of Ideas

Model is “supply chain of ideas” framework, particularly in the context of information technology and AI solutions like machine learning and large language models. You suggest that this idea flow, from creation to application, is similar to how physical goods move through economic supply chains.

In the realm of IT solutions, there’s been an overemphasis on macro-economic “supply-side” stimulation - focusing on creating new technologies and ideas - without enough attention to the micro-economic “demand-side” - understanding and addressing real-world needs and challenges.

Imagining the supply chain rather than just the notion of the Innovation Economy allows the conceptualisation of the gaps between macro and micro economic issues, enabling a different way of thinking about process innovation.

Phrasing things in terms of a supply chain of ideas suggests that innovation requires both characterisation of the demand and the supply of ideas. This leads to four key elements:

- Multiple sources of ideas (diversity)

- Efficient delivery mechanisms

- Quick deployment capabilities

- Customer-driven prioritization

The next priority is mapping the demand for ideas to the supply of ideas. This is where much of our innovation system is failing. In supply chain optimisaiton a large effort is spent on understanding current stock and managing resources to bring the supply to map to the demand. This includes shaping the supply as well as managing it.

The objective is to create a system that can generate, evaluate, and deploy ideas efficiently and effectively, while ensuring that people’s needs and preferences are met. The customer here depends on the context - it could be the public, it could be a business, it could be a government department but very often it’s individual citizens. The loss of their voice in the innovation economy is a trigger for the gap between the innovation supply (at a macro level) and the innovation demand (at a micro level).

AI cannot replace atomic human

Figure: Opinion piece in the FT that describes the idea of a social flywheel to drive the targeted growth we need in AI innovation.

New Attention Flywheel

Figure: The attention flywheel focusses on reinvesting human capital.

Example: Data Science Africa

Data Science Africa is a grass roots initiative that focuses on capacity building to develop ways of solving on the ground problems in health, education, transport and conservation in way that is grounded in local needs and capabilities.

Data Science Africa

Figure: Data Science Africa https://datascienceafrica.org is a ground up initiative for capacity building around data science, machine learning and artificial intelligence on the African continent.

Figure: Data Science Africa meetings held up to October 2021.

Data Science Africa is a bottom up initiative for capacity building in data science, machine learning and artificial intelligence on the African continent.

As of May 2023 there have been eleven workshops and schools, located in seven different countries: Nyeri, Kenya (twice); Kampala, Uganda; Arusha, Tanzania; Abuja, Nigeria; Addis Ababa, Ethiopia; Accra, Ghana; Kampala, Uganda and Kimberley, South Africa (virtual), and in Kigali, Rwanda.

The main notion is end-to-end data science. For example, going from data collection in the farmer’s field to decision making in the Ministry of Agriculture. Or going from malaria disease counts in health centers to medicine distribution.

The philosophy is laid out in (Lawrence, 2015). The key idea is that the modern information infrastructure presents new solutions to old problems. Modes of development change because less capital investment is required to take advantage of this infrastructure. The philosophy is that local capacity building is the right way to leverage these challenges in addressing data science problems in the African context.

Data Science Africa is now a non-govermental organization registered in Kenya. The organising board of the meeting is entirely made up of scientists and academics based on the African continent.

Figure: The lack of existing physical infrastructure on the African continent makes it a particularly interesting environment for deploying solutions based on the information infrastructure. The idea is explored more in this Guardian op-ed on Guardian article on How African can benefit from the data revolution.

Guardian article on Data Science Africa

Example: Cambridge Approach

ai@cam is the flagship University mission that seeks to address these challenges. It recognises that development of safe and effective AI-enabled innovations requires this mix of expertise from across research domains, businesses, policy-makers, civil society, and from affected communities. AI@Cam is setting out a vision for AI-enabled innovation that benefits science, citizens and society.

ai@cam

The ai@cam vision is being achieved in a manner that is modelled on other grass roots initiatives like Data Science Africa. Through leveraging the University’s vibrant interdisciplinary research community. ai@cam has formed partnerships between researchers, practitioners, and affected communities that embed equity and inclusion. It is developing new platforms for innovation and knowledge transfer. It is delivering innovative interdisciplinary teaching and learning for students, researchers, and professionals. It is building strong connections between the University and national AI priorities.

We are working across the University to empower the diversity of expertise and capability we have to focus on these broad societal problems. In April 2022 we shared an ai@cam with a vision document that outlines these challenges for the University.

The University operates as both an engine of AI-enabled innovation and steward of those innovations.

AI is not a universal remedy. It is a set of tools, techniques and practices that correctly deployed can be leveraged to deliver societal benefit and mitigate social harm.

The initiative was funded in November 2022 where a £5M investment from the University.

The progress made so far has been across the University community. We have successfully engaged with over members spanning more than 30 departments and institutes, bringing together academics, researchers, start-ups, and large businesses to collaborate on AI initiatives. The program has already supported 6 new funding bids and launched five interdisciplinary A-Ideas projects that bring together diverse expertise to tackle complex challenges. The establishment of the Policy Lab has created a crucial bridge between research and policy-making. Additionally, through the Pioneer program, we have initiated 46 computing projects that are helping to build our technical infrastructure and capabilities.

How ai@cam is Addressing Innovation Challenges

1. Bridging Macro and Micro Levels

Challenge: There is often a disconnect between high-level AI research and real-world needs that must be addressed.

The A-Ideas Initiative represents an effort to bridge this gap by funding interdisciplinary projects that span 19 departments across 6 schools. This ensures diverse perspectives are brought to bear on pressing challenges. Projects focusing on climate change, mental health, and language equity demonstrate how macro-level AI capabilities can be effectively applied to micro-level societal needs.

Challenge: Academic insights often fail to translate into actionable policy changes.

The Policy Lab initiative addresses this by creating direct connections between researchers, policymakers, and the public, ensuring academic insights can influence policy decisions. The Lab produces accessible policy briefs and facilitates public dialogues. A key example is the collaboration with the Bennett Institute and Minderoo Centre, which resulted in comprehensive policy recommendations for AI governance.

2. Addressing Data, Compute, and Capability Gaps

Challenge: Organizations struggle to balance data accessibility with security and privacy concerns.

The data intermediaries initiative establishes trusted entities that represent the interests of data originators, helping to establish secure and ethical frameworks for data sharing and use. Alongside approaches for protecting data we need to improve our approach to processing data. Careful assessment of data quality and organizational data maturity ensures that data can be shared and used effectively. Together these approaches help to ensure that data can be used to serve science, citizens and society.

2. Addressing data, Compute and Capability Gaps

Challenge: Many researchers lack access to necessary computational resources for modern research.

The HPC Pioneer Project addresses this by providing access to the Dawn supercomputer, enabling 46 diverse projects across 20 departments to conduct advanced computational research. This democratization of computing resources ensures that researchers from various disciplines can leverage high-performance computing for their work. The ai@cam project also supports the ICAIN initiative, further strengthening the computational infrastructure available to researchers with a particular focus on emerging economies.

Challenge: There is a significant skills gap in applying AI across different academic disciplines.

The Accelerate Programme for Scientific Discovery addresses this through a comprehensive approach to building AI capabilities. Through a tiered training system that ranges from basic to advanced levels, the programme ensures that domain experts can develop the AI skills relevant to their field. The initiative particularly emphasizes peer-to-peer learning creating sustainable communities of practice where researchers can share knowledge and experiences through “AI Clubs”.

The Accelerate Programme

Figure: The Accelerate Programme for Scientific Discovery covers research, education and training, engagement. Our aim is to bring about a step change in scientific discovery through AI. http://science.ai.cam.ac.uk

We’re now in a new phase of the development of computing, with rapid advances in machine learning. But we see some of the same issues – researchers across disciplines hope to make use of machine learning, but need access to skills and tools to do so, while the field machine learning itself will need to develop new methods to tackle some complex, ‘real world’ problems.

It is with these challenges in mind that the Computer Lab has started the Accelerate Programme for Scientific Discovery. This new Programme is seeking to support researchers across the University to develop the skills they need to be able to use machine learning and AI in their research.

To do this, the Programme is developing three areas of activity:

- Research: we’re developing a research agenda that develops and applies cutting edge machine learning methods to scientific challenges, with three Accelerate Research fellows working directly on issues relating to computational biology, psychiatry, and string theory. While we’re concentrating on STEM subjects for now, in the longer term our ambition is to build links with the social sciences and humanities.

Progress so far includes:

Recruited a core research team working on the application of AI in mental health, bioinformatics, healthcare, string theory, and complex systems.

Created a research agenda and roadmap for the development of AI in science.

Funded interdisciplinary projects, e.g. in first round:

Antimicrobial resistance in farming

Quantifying Design Trade-offs in Electricity-generation-focused Tokamaks using AI

Automated preclinical drug discovery in vivo using pose estimation

Causal Methods for Environmental Science Workshop

Automatic tree mapping in Cambridge

Acoustic monitoring for biodiversity conservation

AI, mathematics and string theory

Theoretical, Scientific, and Philosophical Perspectives on Biological Understanding in the age of Artificial Intelligence

AI in pathology: optimising a classifier for digital images of duodenal biopsies

Teaching and learning: building on the teaching activities already delivered through University courses, we’re creating a pipeline of learning opportunities to help PhD students and postdocs better understand how to use data science and machine learning in their work.

Progress so far includes:

Teaching and learning

Brought over 250 participants from over 30 departments through tailored data science and machine learning for science training (Data Science Residency and Machine Learning Academy);

Convened workshops with over 80 researchers across the University on the development of data pipelines for science;

Delivered University courses to over 100 students in Advanced Data Science and Machine Learning and the Physical World.

Online training course in Python and Pandas accessed by over 380 researchers.

Engagement: we hope that Accelerate will help build a community of researchers working across the University at the interface on machine learning and the sciences, helping to share best practice and new methods, and support each other in advancing their research. Over the coming years, we’ll be running a variety of events and activities in support of this.

Progress so far includes:

- Launched a Machine Learning Engineering Clinic that has supported over 40 projects across the University with MLE troubleshooting and advice;

- Hosted and participated in events reaching over 300 people in Cambridge;

- Led international workshops at Dagstuhl and Oberwolfach, convening over 60 leading researchers;

- Engaged over 70 researchers through outreach sessions and workshops with the School of Clinical Medicine, the Faculty of Education, Cambridge Digital Humanities and the School of Biological Sciences.

3. Stakeholder Engagement and Feedback Mechanisms

Challenge: AI development often proceeds without adequate incorporation of public perspectives and concerns.

Our public dialogue work, conducted in collaboration with the Kavli Centre for Ethics, Science, and the Public, creates structured spaces for public dialogue about AI’s potential benefits and risks. The approach ensures that diverse voices and perspectives are heard and considered in AI development.

Challenge: AI initiatives often fail to align with diverse academic needs across institutions.

Cross-University Workshops serve as vital platforms for alignment, bringing together faculty and staff from different departments to discuss AI teaching and learning strategies. By engaging professional services staff, the initiative ensures that capability building extends beyond academic departments to support staff who play key roles in implementing and maintaining AI systems.

4. Flexible and Adaptable Approaches

Challenge: Traditional rigid, top-down research agendas often fail to address real needs effectively.

The AI-deas Challenge Development program empowers researchers to identify and propose challenge areas based on their expertise and understanding of field needs. Through collaborative workshops, these initial ideas are refined and developed, ensuring that research directions emerge organically from the academic community while maintaining alignment with broader strategic goals.

5. Phased Implementation and Realistic Planning

Challenge: Ambitious AI initiatives often fail due to unrealistic implementation timelines and expectations.

The overall strategy emphasizes careful, phased deployment to ensure sustainable success. Beginning with pilot programs like AI-deas and the Policy Lab, the approach allows for testing and refinement of methods before broader implementation. This measured approach enables the incorporation of lessons learned from early phases into subsequent expansions.

6. Independent Oversight and Diverse Perspectives

Challenge: AI initiatives often lack balanced guidance and oversight from diverse perspectives.

The Steering Group provides crucial oversight through representatives from various academic disciplines and professional services. Working with a cross-institutional team, it ensures balanced decision-making that considers multiple perspectives. The group maintains close connections with external initiatives like ELLIS, ICAIN, and Data Science Africa, enabling the university to benefit from and contribute to broader AI developments.

7. Addressing the Innovation Supply Chain

Challenge: Academic innovations often struggle to connect with and address industry needs effectively.

The Industry Engagement initiative develops meaningful industrial partnerships through collaboration with the Strategic Partnerships Office, helping translate research into real-world solutions. The planned sciencepreneurship initiative aims to create a structured pathway from academic research to entrepreneurial ventures, helping ensure that innovations can effectively reach and benefit society.

Innovation Economy Conclusion

ai@cam’s approach aims to address the macro-micro disconnects in AI innovation through several key strategies. We are building bridges between macro and micro levels, fostering interdisciplinary collaboration, engaging diverse stakeholders and voices, and providing crucial resources and training. Through these efforts, ai@cam is working to create a more integrated and effective AI innovation ecosystem.

Our implementation approach emphasizes several critical elements learned from past IT implementation failures. We focus on flexibility to adapt to changing needs, phased rollout of initiatives to manage risk, establishing continuous feedback loops for improvement, and maintaining a learning mindset throughout the process.

Looking to the future, we recognize that AI technologies and their applications will continue to evolve rapidly. This evolution requires strategic agility and a continued focus on effective implementation. We will need to remain adaptable, continuously assessing and adjusting our strategies while working to bridge capability gaps between high-level AI capabilities and on-the-ground implementation challenges.

The Atomic Human

Thanks!

For more information on these subjects and more you might want to check the following resources.

- book: The Atomic Human

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com