NIPS Reviewer Recruitment and 'Experience'

Triggered by a question from Christoph Lampert as a comment on a previous blog post on reviewer allocation, I thought I’d post about how we did reviewer recruitment, and what the profile of reviewer ‘experience’ is, as defined by their NIPS track record.

I wrote this blog post, but it ended up being quite detailed, so Corinna suggested I put the summary of reviewer recruitment first, which makes a lot of sense. If you are interested in the details of our reviewer recruitment, please read on to the section below ‘Experience of the Reviewing Body’.

Questions

As a summary, I’ve imagined two questions and given answers below:

- I’m an Area Chair for NIPS, how did I come to be invited? You were personally known to one of the Program Chairs as an expert in your domain who had good judgement about the type and quality of papers we are looking to publish at NIPS. You have a strong publication track record in your domain. You were known to be reliable and responsive. You may have a track record of workshop organization in your domain and/or experience in area chairing previously at NIPS or other conferences. Through these activities you have shown community leadership.

- I’m a reviewer for NIPS, how did I come to be invited?

You could have been invited for one of several reasons:

- you were a reviewer for NIPS in 2013

- you were a reviewer for AISTATS in 2012

- you were personally recommended by an Area Chair or a Program Chair

- you have been on a Program Committee (i.e. you were an Area Chair) at a leading international conference in recent years (specifically NIPS since 2000, ICML since 2008, AISTATS since 2011).

- you have published 2 or more papers at NIPS since 2007

- you published at NIPS in either 2012 or 2013 and your publication track record was personally reviewed and approved by one of the Program Chairs.

Experience of The Reviewing Body

That was the background to Reviewer and Area Chair recruitment, and it is also covered below, in much more detail than perhaps anyone could wish for! Now, for those of you that have gotten this far, we can try and look at the result in terms of one way of measuring reviewer experience. Our aim was to increase the number of reviewers and try and maintain or increase the quality of the reviewing body. Of course quality is subjective, but we can look at things such as reviewer experience in terms of how many NIPS publications they have had. Note that we have purposefully selected many reviewers and area chairs who have never previously published at NIPS, so this is clearly not the only criterion for experience, but it is one that is easily available to us and given Christoph’s question, the statistics may be of wider interest.

Reviewer NIPS Publication Record

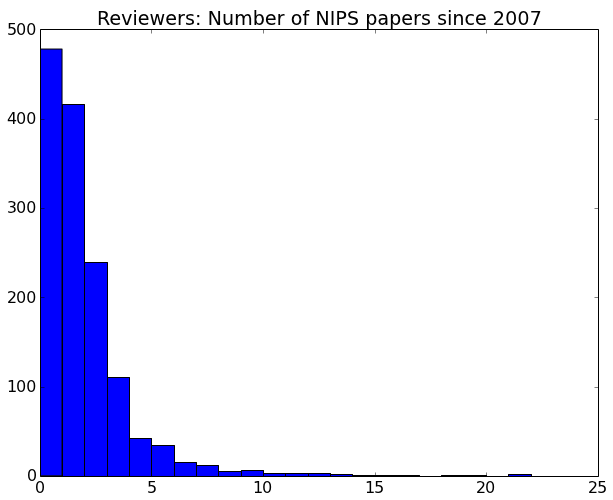

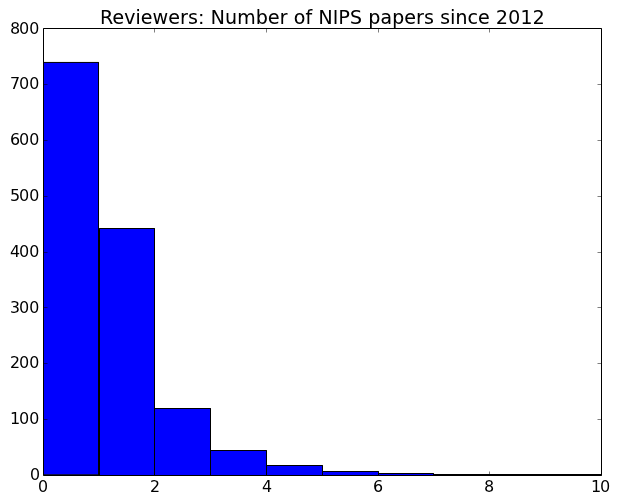

Firstly we give the histograms for cumulative reviewer publications. We plot two histograms, publications since 2007 (to give an idea of long term trends) and publications since 2012 (to give an idea of recent trends).

Histogram of NIPS 2014 reviewers publication records since 2007.

Our most prolific reviewer has published 22 times at NIPS since 2007! That’s an average of over 3 per year (for comparison, I’ve published 7 times at NIPS since 2007).

Looking more recently we can get an idea of the number of NIPS publications reviewers have had since 2012.

Histogram of NIPS 2014 reviewers publication records since 2012.

Impressively the most prolific reviewer has published 10 papers at NIPS over the last two years, and intriguingly it is not the same reviewer that has published 22 times since 2007. The mode of 0 reviews is unsurprising, and comparing the histograms it looks like about 200 of our reviewing body haven’t published in the last two years, but have published at NIPS since 2007.

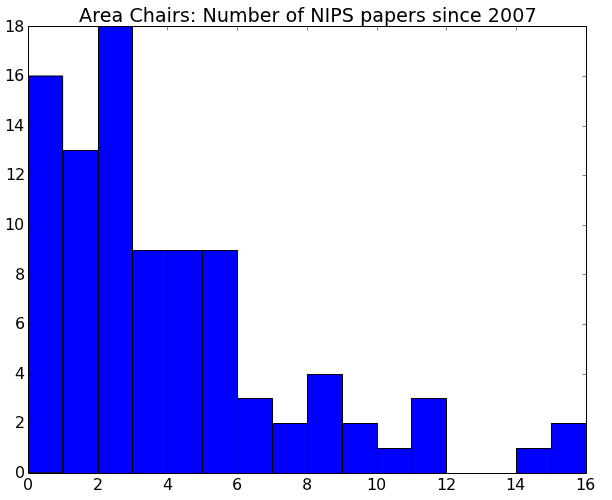

Area Chair Publication Record

We have got similar plots for the Area Chairs. Here is the histogram since 2007.

Histogram of NIPS 2014 Area Chair’s publication records since 2007.

Note that we’ve selected 16 Area Chairs who haven’t published at NIPS before. People who aren’t regular to NIPS may be surprised at this, but I think it reflects the openness of the community to other ideas and new directions for research. NIPS has always been a crossroads between traditional fields, and that is one of it’s great charms. As a result, NIPS publication record is a poor proxy for ‘experience’ where many of our area chairs are concerned.

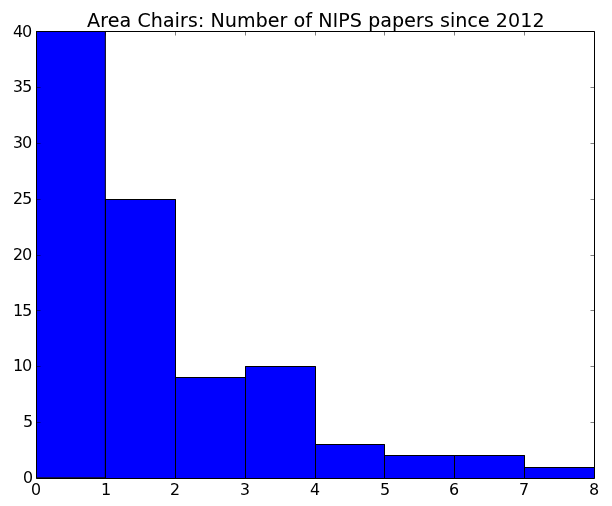

Looking at the more recent publication track record for Area Chairs we have the following histogram.

Histogram of NIPS 2014 Area Chair’s publication records since 20012.

Here we see that a considerable portion of our Area Chairs haven’t published at NIPS in the last two years. I also find this unsurprising. I’ve only published one paper at NIPS since then (that was NIPS 2012, the groups’ NIPS 2013 submissions were both rejected—although I think my overall ‘hit rate’ for NIPS success is still around 50%).

Details of the Recruitment Process

Below are all the gritty details in terms of how things actually panned out in practice for reviewer recruitment. This might be useful for other people chairing conferences in the future.

Area Chair Recruitment

The first stage is invitation of area chairs. To ensure we got the correct distribution of expertise in area chairs, we invited in waves. Max and Zoubin gave us information about the subject distribution of the previous year’s NIPS submissions. This then gave us a rough number of area chairs required for each area. We had compiled a list of 99 candidate area chairs by mid January 2014, coverage here matched the subject coverage from the previous year’s conference. The Area Chairs are experts in their field, the majority of the Area Chairs are people that either Corinna or I have worked with directly or indirectly, others have a long track record of organising workshops and demonstrating thought leadership in their subject area. It’s their judgement on which we’ll be relying for paper decisions. As capable and active researchers they are in high demand for a range of activities (journal editing, program chairing other conferences, organizing workshops etc). This combined with the demands on our everyday lives (including family illnesses, newly born children etc) mean that not everyone can accept the demands on time that being an area chair makes. As well as being involved in reviewer recruitment, assignment and paper discussion. Area chairs need to be available for video conference meetings to discuss their allocation and make final recommendations on their papers. All this across periods of the summer when many are on vacation. Of our original list of 99 invites, 56 were available to help out. This then allowed us to refocus on areas where we’d missed out on Area Chairs. By early March we had a list of 57 further candidate area chairs. Of these 36 were available to help out. Finally we recruited a further 3 Area Chairs in early April, targeted at areas where we felt we were still short of expertise.

Reviewer Recruitment

Reviewer recruitment consists of identifying suitable people and inviting them to join the reviewing body. This process is completed in collaboration with the Area Chairs, who nominate reviewers in their domains. For NIPS 2014 we were targeting 1400 reviewers to account for our duplication of papers and the anticipated increase in submissions. There is no unified database of machine learning expertise, and the history of who reviewed in what years for NIPS is currently not recorded. This means that year to year, we are typically only provided with those people that agreed to review in the previous year as our starting point for compiling this list. From February onwards Corinna and I focussed on increasing this starting number. NIPS 2013 had 1120 reviewers and 80 area chairs, these names formed the core starting point for invitations. Further, since I program chaired AISTATS in 2012 we also had the list of reviewers who’d agreed to review for that conference (400 reviewers, 28 area chairs). These names were also added to our initial list of candidate reviewers (although, of course, some of these names had already agreed to be area chairs for NIPS 2014 and there were many duplicates in the lists).

Sustaining Expertise in the Reviewing Body

A major concern for Corinna and I was to ensure that we had as much expertise in our reviewing body as possible. Because of the way that reviewer names are propagated from year to year, and the fact that more senior people tend to be busier and therefore more likely to decline, many well known researcher names weren’t in this initial list. To rectify this we took from the web the lists of Area Chairs for all previous NIPS conferences going back to 2000, all ICML conferences going back to 2008 and all AISTATS conferences going back to 2011. We could have extended this search to COLT, COSYNE and UAI also. Back in 2000 there were only 13 Area Chairs at NIPS, by the time that I first did the job in 2005 there were 19 Area Chairs. Corinna and I worked together at the last Program Committee to have a physical meeting in 2006 when John Platt was Program Chair. I remember having an above-average allocation of about 50-60 papers as Area Chair that year. I had papers on Gaussian processes (about 20) and many more in dimensionality reduction, mainly on spectral approaches. Corinna also had a lot of papers that year because she was dealing with kernel methods. Although I think a more typical load was 30-40, and reviewer load was probably around 6-8. The physical meeting consisted of two days in a conference room discussing every paper in turn as a full program committee. That was also the last year of a single program chair. The early NIPS program committees mainly read as a “who’s who of machine learning”, and it sticks in my mind how carefully each chair went through each of the papers that were around the borderline of acceptance. Many papers were re-read at that meeting. Overall 160 new names were added to the list of candidate reviewers from incorporating the Area Chairs from these meetings, giving us around 1600 candidate reviewers in total. Note that the sort of reviewing expertise we are after is not only the technical expertise necessary to judge the correctness of the paper. We are looking for reviewers that can judge whether the work is going to be of interest to the wider NIPS community and whether the ideas in the work are likely to have significant impact. The latter two areas are perhaps more subjective, and may require more experience than the first. However, the quality of papers submitted to NIPS is very high, and the number that are technically correct is a very large portion of those submitted. The objective of NIPS is not then to select those papers that are the ‘most technical’, but to select those papers that are likely to have an influence on the field. This is where understanding of likely impact is so important. To this end, Max and Zoubin introduced an ‘impact’ score, with the precise intent of reminding reviewers to think about this aspect. However, if the focus is too much on the technical side, then maybe a paper that is highly complex from a technical stand-point, but less unlikely to have an influence on the direction of the field, is more likely to be accepted than a paper that contains a potentially very influential idea that doesn’t present a strong technical challenge. Ideally then, a paper should have a distribution of reviewers who aren’t purely experts in the particular technical domain from where the paper arises, but also informed experts in the wider context of where the paper sits. The role of the Area Chair is also important here. The next step in reviewer recruitment was to involve the Area Chairs in adding to the list in areas where we had missed people. This is also an important route for new and upcoming NIPS researchers to become involved in reviewing. We provided Area Chairs with access to the list of candidate reviewers and asked them to add names of experts who they would like to recruit, but weren’t currently in the list. This led to a further 220 names.

At this point we had also begun to invite reviewers. Reviewer invitation was done in waves. We started with the first wave of around 1600-1700 invites in mid-April. At that point, the broad form of the Program Committee was already resolved. Acceptance rates for reviewer invites indicated that we weren’t going to hit our target of 1400 reviewers with our candidate list. By the end of April we had around 1000 reviewers accepted, but we were targeting another 400 reviewers to ensure we could keep reviewer load low.

A final source of candidates was from Chris Hiestand. Chris maintains the NIPS data base of authors and presenters on behalf of the NIPS foundation. This gave us another potential source of reviewers. We considered all authors that had 2 or more NIPS papers since 2007. We’d initially intended to restrict this number to 3, but that gained us only 91 more new candidate reviewers (because most of the names were in our candidate list already), relaxing this constraint to 2 led to 325 new candidate reviewers. These additional reviewers were invited at the end of April. However, even with this group, were likely to fall short of our target.

Our final group of reviewers came from authors who published either at NIPS 2013 or NIPS 2012. However, authors that have published only one paper are not necessarily qualified to review at NIPS. For example, the author may be a collaborator from another field. There were 697 authors who had one NIPS paper in 2012 or 2013 and were not in our current candidate list. For these 697 authors, we felt it was necessary to go through each author individually, checking their track record on through web searches (DBLP and Google Scholar as well as web pages) and ensuring they had the necessary track record to review for NIPS. This process resulted in an additional 174 candidate reviewer names. The remainder we either were unable to identify on the web (169 people) or they had a track record where we couldn’t be confident about their ability to review for NIPS without a personal recommendation (369 people). This final wave of invites went out at the beginning of May and also included new reviewer suggestions from Area Chairs and invites to candidate Area Chairs who had not been able to commit to Area Chairing, but may have been able to commit to reviewing. Again, we wanted to ensure the expertise of the reviewing body was as highly developed as possible.

This meant that by the submission deadline we had 1390 reviewers in the system. On 15th July this number has increased slightly. This is because during paper allocation, Area Chairs have recruited additional specific reviewers to handle particular papers where they felt that the available reviewers didn’t have the correct expertise. This means that currently, we have 1400 reviewers exactly. This total number of reviewers comes from around 2255 invitations to review.

Overall, reviewer recruitment took up a very large amount of time, distributed over many weeks. Keeping track of who had been invited already was difficult, because we didn’t have a unique ID for our candidate reviewers. We have a local SQLite data base that indexes on email, and we try to check for matches based on names as well. Most of these checks are done in Python code which is now available on the github repository here, along with IPython notebooks that did the processing (with identifying information removed). Despite care taken to ensure we didn’t add potential reviewers twice to our data base, several people received two invites to review. Very often, they also didn’t notice that they were separate invites, so they agreed to review twice for NIPS. Most of these duplications were picked up at some point before paper allocation and they tended to arise for people whose names could be rendered in multple ways (e.g. because of accents) who have multiple email addresses (e.g. due to change of affiliation).

Firstly, NIPS uses the CMT system for conference management. In an ideal world, choice of management system shouldn’t dictate how you do things, but in practice particularities of the system can affect our choices. CMT doesn’t store a uniques profile for conference reviewers (unlike for example EasyChair which stores every conference you’ve submitted to or reviewed/chaired for). This means that from year to year information about the previous years reviewers isn’t necessarily passed in a consistent way between program chairs. Corinna and I requested that the CMT set up for our year copied across the reviewers from NIPS 2013 along with their subject areas and conflicts to try and alleviate this. The NIPS program committee in 2013 consisted of 1120 reviewers and 80 area chairs. Corinna and I set a target of 1400 reviewers and 100 area chairs. This was to account for (a) increase in submissions of perhaps 10% and (b) duplication of papers for independent reviewing at a level of around 10%.