Get Your NIPS Reviews in!

This time last year Corinna and I were starting to pull NIPS reviews together. Making sure that each paper has three reviews before the start of the rebuttal stage. It’s a lot of work.

As many will know we ran an experiment on the consistency of the review process. We are still engaged in the full write up, and the delay is down to me, I’ve had the draft on my desk for too long.

However, at this point it’s worth highlighting one of the conclusions and publishing some off the analysis.

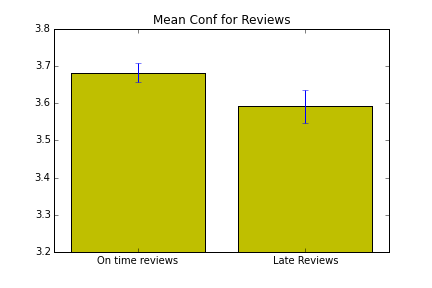

Late reviews generally mess up the process. Statistically we found that they tend to be shorter, lower confidence and rate papers as higher quality.

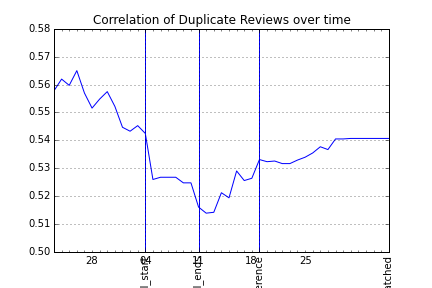

In each case the magnitude of the effect was small in each case but statistically significant. The whiskers in the plots show two standard deviations of the standard error. Further, we could see from our duplication experiment (see also here for other analysis), that correlation between independent re-reviewing of papers dropped during the period late reviews came in.

So please help out Daniel and Masashi by spending time on your reviews, not rushing them, but submitting them on time!

For more details of the analysis you can check this jupyter notebook which revisits the original analysis that Corinna did for the Progam Chairs’ presentation at NIPS.