Leading with AI: Strategic Decision Making in the Age of Human-Analogue Machines

Abstract

As AI technologies reshape the banking landscape, senior executives face questions about balancing automation with human judgment, information flows, and organizational decision-making. This keynote builds on the ideas in The Atomic Human to explore the practical implications of AI for financial institutions through the lens of information topography, decision-making structures, and human-AI collaboration. Drawing from real-world examples and insights from the book, we’ll explore how banks can strategically implement AI while maintaining human agency, intelligent accountability, and organizational effectiveness in an industry where trust and judgment are paramount.

Introduction: The Age of Human-Analogue Machines

Henry Ford’s Faster Horse

Figure: A 1925 Ford Model T built at Henry Ford’s Highland Park Plant in Dearborn, Michigan. This example now resides in Australia, owned by the founder of FordModelT.net. From https://commons.wikimedia.org/wiki/File:1925_Ford_Model_T_touring.jpg

It’s said that Henry Ford’s customers wanted a “a faster horse.” If Henry Ford was selling us artificial intelligence today, what would the customer call for, “a smarter human?” That’s certainly the picture of machine intelligence we find in science fiction narratives, but the reality of what we’ve developed is much more mundane.

Car engines produce prodigious power from petrol. Machine intelligences deliver decisions derived from data. In both cases the scale of consumption enables a speed of operation that is far beyond the capabilities of their natural counterparts. Unfettered energy consumption has consequences in the form of climate change. Does unbridled data consumption also have consequences for us?

If we devolve decision making to machines, we depend on those machines to accommodate our needs. If we don’t understand how those machines operate, we lose control over our destiny. Our mistake has been to see machine intelligence as a reflection of our intelligence. We cannot understand the smarter human without understanding the human. To understand the machine, we need to better understand ourselves.

As we enter an era where machines increasingly mimic tasks traditionally undertaken by humans, senior executives in banking face fundamental transformations in how their organizations function. The challenges aren’t merely operational - they require us to reimagine the very nature of work, human capital, and organizational culture in an industry where trust and judgment are the foundation of success.

The Atomic Human

Figure: The Atomic Eye, by slicing away aspects of the human that we used to believe to be unique to us, but are now the preserve of the machine, we learn something about what it means to be human.

The development of what some are calling intelligence in machines, raises questions around what machine intelligence means for our intelligence. The idea of the atomic human is derived from Democritus’s atomism.

In the fifth century bce the Greek philosopher Democritus posed a question about our physical universe. He imagined cutting physical matter into pieces in a repeated process: cutting a piece, then taking one of the cut pieces and cutting it again so that each time it becomes smaller and smaller. Democritus believed this process had to stop somewhere, that we would be left with an indivisible piece. The Greek word for indivisible is atom, and so this theory was called atomism.

The Atomic Human considers the same question, but in a different domain, asking: As the machine slices away portions of human capabilities, are we left with a kernel of humanity, an indivisible piece that can no longer be divided into parts? Or does the human disappear altogether? If we are left with something, then that uncuttable piece, a form of atomic human, would tell us something about our human spirit.

See Lawrence (2024) atomic human, the p. 13.

Our fascination with AI stems from the perceived uniqueness of human intelligence. We believe it’s what differentiates us. But to understand how AI will reshape banking, we first need to understand what makes human intelligence unique and how it differs from machine intelligence.

Understanding Human vs Machine Intelligence

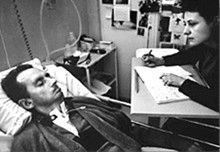

The Diving Bell and the Butterfly

Figure: The Diving Bell and the Buttefly is the autobiography of Jean Dominique Bauby.

The Diving Bell and the Butterfly is the autobiography of Jean Dominique Bauby. Jean Dominique, the editor of French Elle magazine, suffered a major stroke at the age of 43 in 1995. The stroke paralyzed him and rendered him speechless. He was only able to blink his left eyelid, he became a sufferer of locked in syndrome.

See Lawrence (2024) Le Scaphandre et le papillon (The Diving Bell and the Butterfly) p. 10–12.

O M D P C F B V

H G J Q Z Y X K W

Figure: The ordering of the letters that Bauby used for writing his autobiography.

How could he do that? Well, first, they set up a mechanism where he could scan across letters and blink at the letter he wanted to use. In this way, he was able to write each letter.

It took him 10 months of four hours a day to write the book. Each word took two minutes to write.

Imagine doing all that thinking, but so little speaking, having all those thoughts and so little ability to communicate.

One challenge for the atomic human is that we are all in that situation. While not as extreme as for Bauby, when we compare ourselves to the machine, we all have a locked-in intelligence.

Figure: Jean Dominique Bauby was the Editor in Chief of the French Elle Magazine, he suffered a stroke that destroyed his brainstem, leaving him only capable of moving one eye. Jean Dominique became a victim of locked in syndrome.

Incredibly, Jean Dominique wrote his book after he became locked in. It took him 10 months of four hours a day to write the book. Each word took two minutes to write.

The idea behind embodiment factors is that we are all in that situation. While not as extreme as for Bauby, we all have somewhat of a locked in intelligence.

See Lawrence (2024) Bauby, Jean Dominique p. 9–11, 18, 90, 99-101, 133, 186, 212–218, 234, 240, 251–257, 318, 368–369.

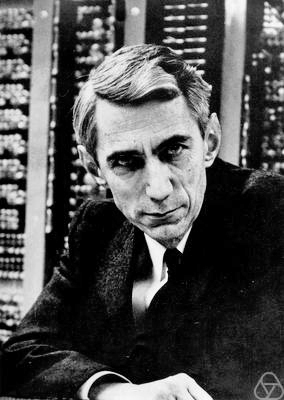

Bauby and Shannon

|

|

Figure: Claude Shannon developed information theory which allows us to quantify how much Bauby can communicate. This allows us to compare how locked in he is to us.

See Lawrence (2024) Shannon, Claude p. 10, 30, 61, 74, 98, 126, 134, 140, 143, 149, 260, 264, 269, 277, 315, 358, 363.

The story of Jean-Dominique Bauby illustrates a fundamental truth about human intelligence: it’s not just about processing information, but about the embodied experience of being human. This has profound implications for how we think about AI in banking.

Embodiment Factors

|

|||

| bits/min | billions | 2000 | 6 |

|

billion calculations/s |

~100 | a billion | a billion |

| embodiment | 20 minutes | 5 billion years | 15 trillion years |

Figure: Embodiment factors are the ratio between our ability to compute and our ability to communicate. Jean Dominique Bauby suffered from locked-in syndrome. The embodiment factors show that relative to the machine we are also locked in. In the table we represent embodiment as the length of time it would take to communicate one second’s worth of computation. For computers it is a matter of minutes, but for a human, whether locked in or not, it is a matter of many millions of years.

Let me explain what I mean. Claude Shannon introduced a mathematical concept of information for the purposes of understanding telephone exchanges.

Information has many meanings, but mathematically, Shannon defined a bit of information to be the amount of information you get from tossing a coin.

If I toss a coin, and look at it, I know the answer. You don’t. But if I now tell you the answer I communicate to you 1 bit of information. Shannon defined this as the fundamental unit of information.

If I toss the coin twice, and tell you the result of both tosses, I give you two bits of information. Information is additive.

Shannon also estimated the average information associated with the English language. He estimated that the average information in any word is 12 bits, equivalent to twelve coin tosses.

So every two minutes Bauby was able to communicate 12 bits, or six bits per minute.

This is the information transfer rate he was limited to, the rate at which he could communicate.

Compare this to me, talking now. The average speaker for TEDX speaks around 160 words per minute. That’s 320 times faster than Bauby or around a 2000 bits per minute. 2000 coin tosses per minute.

But, just think how much thought Bauby was putting into every sentence. Imagine how carefully chosen each of his words was. Because he was communication constrained he could put more thought into each of his words. Into thinking about his audience.

So, his intelligence became locked in. He thinks as fast as any of us, but can communicate slower. Like the tree falling in the woods with no one there to hear it, his intelligence is embedded inside him.

Two thousand coin tosses per minute sounds pretty impressive, but this talk is not just about us, it’s about our computers, and the type of intelligence we are creating within them.

So how does two thousand compare to our digital companions? When computers talk to each other, they do so with billions of coin tosses per minute.

Let’s imagine for a moment, that instead of talking about communication of information, we are actually talking about money. Bauby would have 6 dollars. I would have 2000 dollars, and my computer has billions of dollars.

The internet has interconnected computers and equipped them with extremely high transfer rates.

However, by our very best estimates, computers actually think slower than us.

How can that be? You might ask, computers calculate much faster than me. That’s true, but underlying your conscious thoughts there are a lot of calculations going on.

Each thought involves many thousands, millions or billions of calculations. How many exactly, we don’t know yet, because we don’t know how the brain turns calculations into thoughts.

Our best estimates suggest that to simulate your brain a computer would have to be as large as the UK Met Office machine here in Exeter. That’s a 250 million pound machine, the fastest in the UK. It can do 16 billion billon calculations per second.

It simulates the weather across the word every day, that’s how much power we think we need to simulate our brains.

So, in terms of our computational power we are extraordinary, but in terms of our ability to explain ourselves, just like Bauby, we are locked in.

For a typical computer, to communicate everything it computes in one second, it would only take it a couple of minutes. For us to do the same would take 15 billion years.

If intelligence is fundamentally about processing and sharing of information. This gives us a fundamental constraint on human intelligence that dictates its nature.

I call this ratio between the time it takes to compute something, and the time it takes to say it, the embodiment factor (Lawrence, 2017). Because it reflects how embodied our cognition is.

If it takes you two minutes to say the thing you have thought in a second, then you are a computer. If it takes you 15 billion years, then you are a human.

These bandwidth differences explain why AI struggles with context and social understanding - the very domains where human bankers excel. The challenge for financial institutions is designing systems that leverage the strengths of both.

Bandwidth Constrained Conversations

Figure: Conversation relies on internal models of other individuals.

Figure: Misunderstanding of context and who we are talking to leads to arguments.

Embodiment factors imply that, in our communication between humans, what is not said is, perhaps, more important than what is said. To communicate with each other we need to have a model of who each of us are.

To aid this, in society, we are required to perform roles. Whether as a parent, a teacher, an employee or a boss. Each of these roles requires that we conform to certain standards of behaviour to facilitate communication between ourselves.

Control of self is vitally important to these communications.

The high availability of data available to humans undermines human-to-human communication channels by providing new routes to undermining our control of self.

The consequences between this mismatch of power and delivery are to be seen all around us. Because, just as driving an F1 car with bicycle wheels would be a fine art, so is the process of communication between humans.

If I have a thought and I wish to communicate it, I first need to have a model of what you think. I should think before I speak. When I speak, you may react. You have a model of who I am and what I was trying to say, and why I chose to say what I said. Now we begin this dance, where we are each trying to better understand each other and what we are saying. When it works, it is beautiful, but when mis-deployed, just like a badly driven F1 car, there is a horrible crash, an argument.

Figure: This is the drawing Dan was inspired to create for Chapter 1. It captures the fundamentally narcissistic nature of our (societal) obsession with our intelligence.

See blog post on Dan Andrews image of our reflective obsession with AI.. See also (Vallor, 2024).

Computer Conversations

Figure: Conversation relies on internal models of other individuals.

Figure: Misunderstanding of context and who we are talking to leads to arguments.

Similarly, we find it difficult to comprehend how computers are making decisions. Because they do so with more data than we can possibly imagine.

In many respects, this is not a problem, it’s a good thing. Computers and us are good at different things. But when we interact with a computer, when it acts in a different way to us, we need to remember why.

Just as the first step to getting along with other humans is understanding other humans, so it needs to be with getting along with our computers.

Embodiment factors explain why, at the same time, computers are so impressive in simulating our weather, but so poor at predicting our moods. Our complexity is greater than that of our weather, and each of us is tuned to read and respond to one another.

Their intelligence is different. It is based on very large quantities of data that we cannot absorb. Our computers don’t have a complex internal model of who we are. They don’t understand the human condition. They are not tuned to respond to us as we are to each other.

Embodiment factors encapsulate a profound thing about the nature of humans. Our locked in intelligence means that we are striving to communicate, so we put a lot of thought into what we’re communicating with. And if we’re communicating with something complex, we naturally anthropomorphize them.

We give our dogs, our cats, and our cars human motivations. We do the same with our computers. We anthropomorphize them. We assume that they have the same objectives as us and the same constraints. They don’t.

This means, that when we worry about artificial intelligence, we worry about the wrong things. We fear computers that behave like more powerful versions of ourselves that will struggle to outcompete us.

In reality, the challenge is that our computers cannot be human enough. They cannot understand us with the depth we understand one another. They drop below our cognitive radar and operate outside our mental models.

The real danger is that computers don’t anthropomorphize. They’ll make decisions in isolation from us without our supervision because they can’t communicate truly and deeply with us.

The true potential of AI in banking isn’t in replacing humans but in creating complementary systems that enhance human capabilities. Moving beyond the ‘faster horse’ mindset requires understanding what makes human intelligence uniquely valuable in financial services.

The Information Revolution in Banking

New Flow of Information

Classically the field of statistics focused on mediating the relationship between the machine and the human. Our limited bandwidth of communication means we tend to over-interpret the limited information that we are given, in the extreme we assign motives and desires to inanimate objects (a process known as anthropomorphizing). Much of mathematical statistics was developed to help temper this tendency and understand when we are valid in drawing conclusions from data.

Figure: The trinity of human, data, and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

Data science brings new challenges. In particular, there is a very large bandwidth connection between the machine and data. This means that our relationship with data is now commonly being mediated by the machine. Whether this is in the acquisition of new data, which now happens by happenstance rather than with purpose, or the interpretation of that data where we are increasingly relying on machines to summarize what the data contains. This is leading to the emerging field of data science, which must not only deal with the same challenges that mathematical statistics faced in tempering our tendency to over interpret data but must also deal with the possibility that the machine has either inadvertently or maliciously misrepresented the underlying data.

In an AI-augmented banking organization, human attention becomes the most precious resource. The strategic allocation of this attention will determine organizational success. This is particularly critical in banking where complex decisions require both algorithmic precision and human judgment.

Networked Interactions

Our modern society intertwines the machine with human interactions. The key question is who has control over these interfaces between humans and machines.

Figure: Humans and computers interacting should be a major focus of our research and engineering efforts.

So the real challenge that we face for society is understanding which systemic interventions will encourage the right interactions between the humans and the machine at all of these interfaces.

The API Mandate

The API Mandate was a memo issued by Jeff Bezos in 2002. Internet folklore has the memo making five statements:

- All teams will henceforth expose their data and functionality through service interfaces.

- Teams must communicate with each other through these interfaces.

- There will be no other form of inter-process communication allowed: no direct linking, no direct reads of another team’s data store, no shared-memory model, no back-doors whatsoever. The only communication allowed is via service interface calls over the network.

- It doesn’t matter what technology they use.

- All service interfaces, without exception, must be designed from the ground up to be externalizable. That is to say, the team must plan and design to be able to expose the interface to developers in the outside world. No exceptions.

The mandate marked a shift in the way Amazon viewed software, moving to a model that dominates the way software is built today, so-called “Software-as-a-Service.”

Any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization’s communication structure.

Conway (n.d.)

The law is cited in the classic software engineering text, The Mythical Man Month (Brooks, n.d.).

As a result, and in awareness of Conway’s law, the implementation of this mandate also had a dramatic effect on Amazon’s organizational structure.

Because the design that occurs first is almost never the best possible, the prevailing system concept may need to change. Therefore, flexibility of organization is important to effective design.

Conway (n.d.)

Amazon is set up around the notion of the “two pizza team.” Teams of 6-10 people that can be theoretically fed by two (American) pizzas. This structure is tightly interconnected with the software. Each of these teams owns one of these “services.” Amazon is strict about the team that develops the service owning the service in production. This approach is the secret to their scale as a company, and the approach has been adopted by many other large tech companies. The software-as-a-service approach changed the information infrastructure of the company. The routes through which information is shared. This had a knock-on effect on the corporate culture.

Amazon works through an approach I think of as “devolved autonomy.” The culture of the company is widely taught (e.g. Customer Obsession, Ownership, Frugality), a team’s inputs and outputs are strictly defined, but within those parameters, teams have a great of autonomy in how they operate. The information infrastructure was devolved, so the autonomy was devolved. The different parts of Amazon are then bound together through shared corporate culture.

The way decisions are made in organizations is fundamentally changing. In banking, this means rethinking how we balance centralized control with devolved authority, especially in areas like risk assessment and customer service.

Information Topography: How AI Reshapes Banking Decision Making

An Attention Economy

I don’t know what the future holds, but there are three things that (in the longer term) I think we can expect to be true.

- Human attention will always be a “scarce resource” (See Simon, 1971)

- Humans will never stop being interested in other humans.

- Organisations will keep trying to “capture” the attention economy.

Over the next few years our social structures will be significantly disrupted, and during periods of volatility it’s difficult to predict what will be financially successful. But in the longer term the scarce resource in the economy will be the “capital” of human attention. Even if all traditionally “productive jobs” such as manufacturing were automated, and sustainable energy problems are resolved, human attention is still the bottle neck in the economy. See Simon (1971)

Beyond that, humans will not stop being interested in other humans, sport is a nice example of this, we are as interested in the human stories of athletes as their achievements (as a series of Netflix productions evidences: Quaterback, Receiver, Drive to Survive, THe Last Dance) etc. Or the “creator economy” on YouTube. While we might prefer a future where the labour in such an economy is distributed, such that we all individually can participate in the creation as well as the consumption, my final thought is that there are significant forces to centralise this so that the many consume from the few, and companies will be financially incentivised to capture this emerging attention economy. For more on the attention economy see Tim O’Reilly’s talk here: https://www.mctd.ac.uk/watch-ai-and-the-attention-economy-tim-oreilly/.

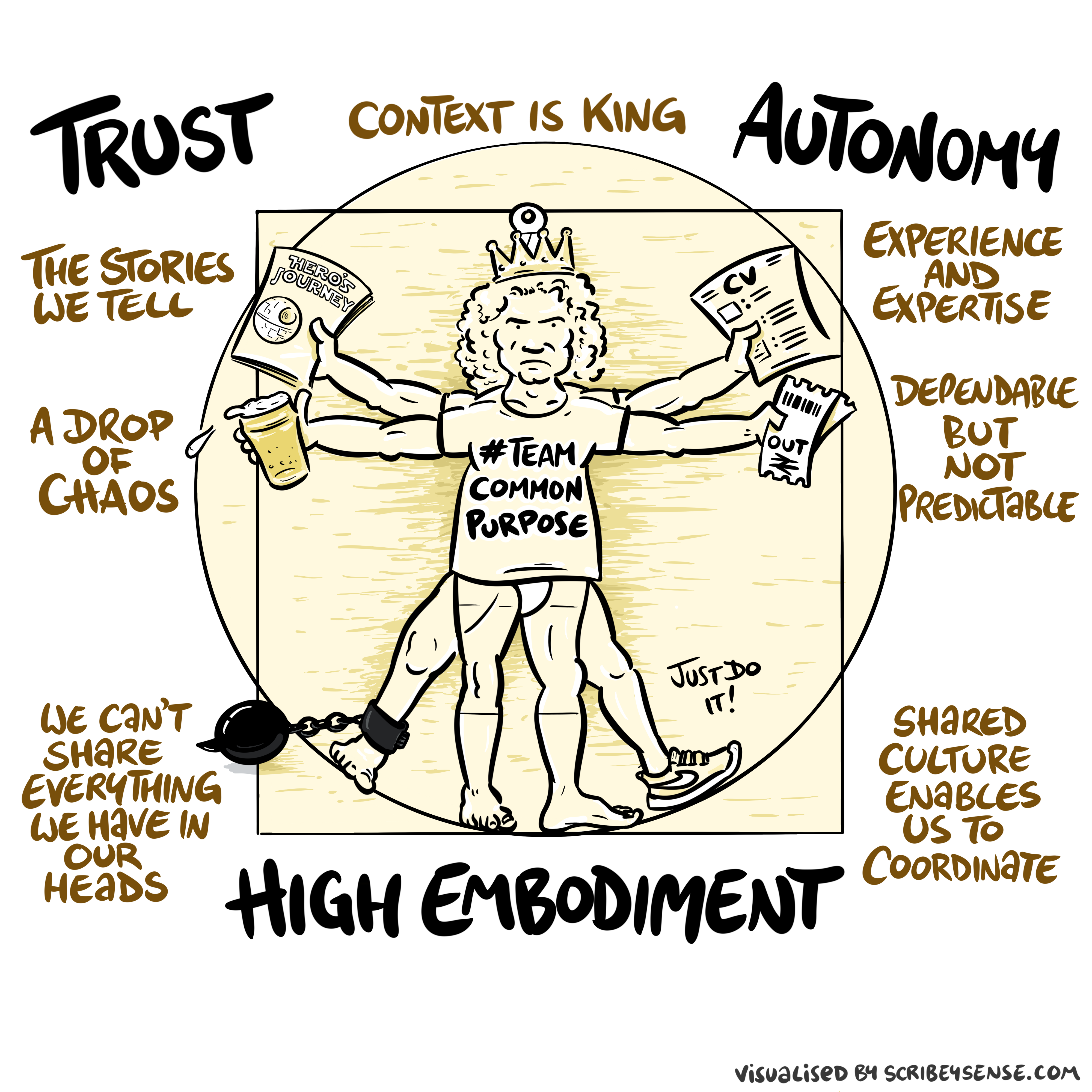

Figure: The relationships between trust, autonomy and embodiment are key to understanding how to properly deploy AI systems in a way that avoids digital autocracy. (Illustration by Dan Andrews inspired by Chapter 3 “Intent” of “The Atomic Human” Lawrence (2024))

This illustration was created by Dan Andrews after reading Chapter 3 “Intent” of “The Atomic Human” book. The chapter explores the concept of intent in AI systems and how trust, autonomy, and embodiment interact to shape our relationship with technology. Dan’s drawing captures these complex relationships and the balance needed for responsible AI deployment.

See blog post on Dan Andrews image from Chapter 3.

See blog post on Dan Andrews image from Chapter 3..

AI fundamentally changes the information topography of banking organizations. Understanding this new landscape is crucial for strategic decision-making.

Balancing Centralized Control with Devolved Authority

Question Mark Emails

Figure: Jeff Bezos sends employees at Amazon question mark emails. They require an explaination. The explaination required is different at different levels of the management hierarchy. See this article.

One challenge at Amazon was what I call the “L4 to Q4 problem.” The issue when an graduate engineer (Level 4 in Amazon terminology) makes a change to the code base that has a detrimental effect but we only discover it when the 4th Quarter results are released (Q4).

The challenge in explaining what went wrong is a challenge in intellectual debt.

Executive Sponsorship

Another lever that can be deployed is that of executive sponsorship. My feeling is that organisational change is most likely if the executive is seen to be behind it. This feeds the corporate culture. While it may be a necessary condition, or at least it is helpful, it is not a sufficient condition. It does not solve the challenge of the institutional antibodies that will obstruct long term change. Here by executive sponsorship I mean that of the CEO of the organisation. That might be equivalent to the Prime Minister or the Cabinet Secretary.

A key part of this executive sponsorship is to develop understanding in the executive of how data driven decision making can help, while also helping senior leadership understand what the pitfalls of this decision making are.

Pathfinder Projects

I do exec education courses for the Judge Business School. One of my main recommendations there is that a project is developed that directly involves the CEO, the CFO and the CIO (or CDO, CTO … whichever the appropriate role is) and operates on some aspect of critical importance for the business.

The inclusion of the CFO is critical for two purposes. Firstly, financial data is one of the few sources of data that tends to be of high quality and availability in any organisation. This is because it is one of the few forms of data that is regularly audited. This means that such a project will have a good chance of success. Secondly, if the CFO is bought in to these technologies, and capable of understanding their strengths and weaknesses, then that will facilitate the funding of future projects.

In the DELVE data report (The DELVE Initiative, 2020), we translated this recommendation into that of “pathfinder projects.” Projects that cut across departments, and involve treasury. Although I appreciate the nuances of the relationship between Treasury and No 10 do not map precisely onto that of CEO and CFO in a normal business. However, the importance of cross cutting exemplar projects that have the close attention of the executive remains.

In banking, this balance is particularly critical. You need centralized oversight for compliance and risk management, but you also need devolved decision-making for customer service and innovation. AI can help achieve both, but only if properly designed.

Maintaining Human Judgment in Critical Banking Decisions

The Horizon Scandal

In the UK we saw these effects play out in the Horizon scandal: the accounting system of the national postal service was computerized by Fujitsu and first installed in 1999, but neither the Post Office nor Fujitsu were able to control the system they had deployed. When it went wrong individual sub postmasters were blamed for the systems’ errors. Over the next two decades they were prosecuted and jailed leaving lives ruined in the wake of the machine’s mistakes.

See Lawrence (2024) Horizon scandal p. 371.

The Horizon scandal dramatically demonstrates what happens when human judgment is subordinated to algorithmic outputs. In banking, where decisions can have profound consequences for customers and the economy, maintaining human judgment is not optional - it’s essential.

Banking is fundamentally built on trust - trust between customers and the bank, trust in the financial system, trust in the accuracy of information and decisions. AI systems fundamentally challenge how trust functions in banking organizations.

Complexity in Action

As an exercise in understanding complexity, watch the following video. You will see the basketball being bounced around, and the players moving. Your job is to count the passes of those dressed in white and ignore those of the individuals dressed in black.

Figure: Daniel Simon’s famous illusion “monkey business.” Focus on the movement of the ball distracts the viewer from seeing other aspects of the image.

In a classic study Simons and Chabris (1999) ask subjects to count the number of passes of the basketball between players on the team wearing white shirts. Fifty percent of the time, these subjects don’t notice the gorilla moving across the scene.

The phenomenon of inattentional blindness is well known, e.g in their paper Simons and Charbris quote the Hungarian neurologist, Rezsö Bálint,

It is a well-known phenomenon that we do not notice anything happening in our surroundings while being absorbed in the inspection of something; focusing our attention on a certain object may happen to such an extent that we cannot perceive other objects placed in the peripheral parts of our visual field, although the light rays they emit arrive completely at the visual sphere of the cerebral cortex.

Rezsö Bálint 1907 (translated in Husain and Stein 1988, page 91)

When we combine the complexity of the world with our relatively low bandwidth for information, problems can arise. Our focus on what we perceive to be the most important problem can cause us to miss other (potentially vital) contextual information.

This phenomenon is known as selective attention or ‘inattentional blindness.’

Figure: For a longer talk on inattentional bias from Daniel Simons see this video.

Techno-Inattention Bias in Banking

The Danger for Banking

The selective attention phenomenon has a direct parallel in how banking organizations approach AI and digital transformation. Senior executives are increasingly asked to focus on complex technical details of AI systems and digital technology.

In this process of technological fascination, they miss the metaphorical gorilla walking through their business – the fundamental human and organizational dynamics that actually determine success in banking. The gorilla represents customer relationships, regulatory compliance, ethical considerations, and trust - elements that technical systems can never fully replace.

Human Attention as the Scarce Resource in Banking

The Attention Economy

Herbert Simon on Information

What information consumes is rather obvious: it consumes the attention of its recipients. Hence a wealth of information creates a poverty of attention …

Simon (1971)

The attention economy was a phenomenon described in 1971 by the American computer scientist Herbert Simon. He saw the coming information revolution and wrote that a wealth of information would create a poverty of attention. Too much information means that human attention becomes the scarce resource, the bottleneck. It becomes the gold in the attention economy.

The power associated with control of information dates back to the invention of writing. By pressing reeds into clay tablets Sumerian scribes stored information and controlled the flow of information.

In an AI-augmented banking organization, human attention becomes the most precious resource. The strategic allocation of this attention will determine organizational success. This is particularly critical in banking where complex decisions require both algorithmic precision and human judgment.

Emulsion: Combining Human and Machine Intelligence in Banking

Banking organizations are like an emulsion mixing oil and water. The oil could be replaced by the machine, but the water, the vital life-giving human component cannot be easily separated. Successful banking organizations need to develop structures that combine human and machine intelligence in stable, productive ways.

This means reversing the power dynamics and ensuring the organization is in touch with its business differentiators, because in the long run those differentiators are unlikely to include AI - they’ll include the human elements that AI cannot replicate.

Attention Reinvestment Cycle

Figure: The attention flywheel focusses on reinvesting human capital.

While the traditional productivity flywheel focuses on reinvesting financial capital, the attention flywheel focuses on reinvesting human capital - our most precious resource in an AI-augmented world. This requires deliberately creating systems that capture the value of freed attention and channel it toward human-centered activities that machines cannot replicate.

While the traditional productivity flywheel focuses on reinvesting financial capital, the attention flywheel focuses on reinvesting human capital - our most precious resource in an AI-augmented world. This requires deliberately creating systems that capture the value of freed attention and channel it toward human-centered activities that machines cannot replicate.

Generative AI as Human-Analogue Machines (HAMs)

Human-Analogue Machines (HAMs) as Banking Tools

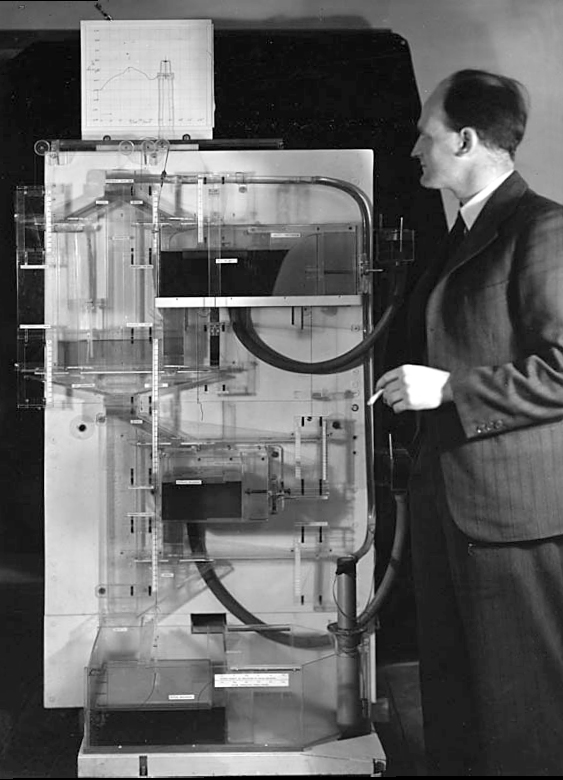

The MONIAC

The MONIAC was an analogue computer designed to simulate the UK economy. Analogue comptuers work through analogy, the analogy in the MONIAC is that both money and water flow. The MONIAC exploits this through a system of tanks, pipes, valves and floats that represent the flow of money through the UK economy. Water flowed from the treasury tank at the top of the model to other tanks representing government spending, such as health and education. The machine was initially designed for teaching support but was also found to be a useful economic simulator. Several were built and today you can see the original at Leeds Business School, there is also one in the London Science Museum and one in the Unisversity of Cambridge’s economics faculty.

Figure: Bill Phillips and his MONIAC (completed in 1949). The machine is an analogue computer designed to simulate the workings of the UK economy.

See Lawrence (2024) MONIAC p. 232-233, 266, 343.

Human Analogue Machine

Recent breakthroughs in generative models, particularly large language models, have enabled machines that, for the first time, can converse plausibly with other humans.

The Apollo guidance computer provided Armstrong with an analogy when he landed it on the Moon. He controlled it through a stick which provided him with an analogy. The analogy is based in the experience that Amelia Earhart had when she flew her plane. Armstrong’s control exploited his experience as a test pilot flying planes that had control columns which were directly connected to their control surfaces.

Figure: The human analogue machine is the new interface that large language models have enabled the human to present. It has the capabilities of the computer in terms of communication, but it appears to present a “human face” to the user in terms of its ability to communicate on our terms. (Image quite obviously not drawn by generative AI!)

The generative systems we have produced do not provide us with the “AI” of science fiction. Because their intelligence is based on emulating human knowledge. Through being forced to reproduce our literature and our art they have developed aspects which are analogous to the cultural proxy truths we use to describe our world.

These machines are to humans what the MONIAC was the British economy. Not a replacement, but an analogue computer that captures some aspects of humanity while providing advantages of high bandwidth of the machine.

HAM

The Human-Analogue Machine or HAM therefore provides a route through which we could better understand our world through improving the way we interact with machines.

Figure: The trinity of human, data, and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

The HAM can provide an interface between the digital computer and the human allowing humans to work closely with computers regardless of their understandin gf the more technical parts of software engineering.

Figure: The HAM now sits between us and the traditional digital computer.

Of course this route provides new routes for manipulation, new ways in which the machine can undermine our autonomy or exploit our cognitive foibles. The major challenge we face is steering between these worlds where we gain the advantage of the computer’s bandwidth without undermining our culture and individual autonomy.

See Lawrence (2024) human-analogue machine (HAMs) p. 343-347, 359-359, 365-368.

Generative AI provides banking organizations with what I call “Human-Analogue Machines” - systems that can process and generate information at scales far beyond human capacity, but that still require human oversight and judgment for critical decisions.

The challenge for banking executives is that we know our current approaches to AI implementation are likely wrong, but we don’t yet know exactly how they’re wrong. This requires a different approach than the traditional “Marconi approach” of building the technology first and figuring out the applications later.

The Uncertainty Principle of Human Capital Quantification

Inflation of Human Capital

This transformation creates efficiency. But it also devalues the skills that form the backbone of human capital and create a happy, healthy society. Had the alchemists ever discovered the philosopher’s stone, using it would have triggered mass inflation and devalued any reserves of gold. Similarly, our reserve of precious human capital is vulnerable to automation and devaluation in the artificial intelligence revolution. The skills we have learned, whether manual or mental, risk becoming redundant in the face of the machine.

The more we try to precisely quantify human contribution in banking, the more we risk changing the nature of that contribution. This creates fundamental challenges for performance management in AI-augmented banking organizations.

In an age where algorithms become commoditized, organizational culture becomes the primary competitive advantage. This is particularly true in banking where trust, relationships, and ethical behavior are central to success.

The Business Imperative: People First, Not AI First

AI cannot replace atomic human

Figure: Opinion piece in the FT that describes the idea of a social flywheel to drive the targeted growth we need in AI innovation.

The organizations that will succeed in the AI age will not be those that most aggressively automate, but those that most thoughtfully integrate human and machine intelligence to create systems greater than the sum of their parts.

Superficial Automation

The rise of AI has enabled automation of many surface-level tasks - what we might call “superficial automation.” These are tasks that appear complex but primarily involve reformatting or restructuring existing information, such as converting bullet points into prose, summarizing documents, or generating routine emails.

While such automation can increase immediate productivity, it risks missing the deeper value of these seemingly mundane tasks. For example, the process of composing an email isn’t just about converting thoughts into text - it’s about:

- Reflection time to properly consider the message

- Building relationships through personal communication

- Developing and refining ideas through the act of writing

- Creating institutional memory through thoughtful documentation

- Projecting corporate culture

When we automate these superficial aspects, we can create what appears to be a more efficient process, but one that gradually loses meaning without human involvement. It’s like having a meeting transcription without anyone actually attending the meeting - the words are there, but the value isn’t.

Consider email composition: An AI can convert bullet points into a polished email instantly, but this bypasses the valuable thinking time that comes with composition. The human “pause” in communication isn’t inefficiency - it’s often where the real value lies.

This points to a broader challenge with AI automation: the need to distinguish between tasks that are merely complex (and can be automated) versus those that are genuinely complicated (requiring human judgment and involvement). Effective deployment of AI requires understanding this distinction and preserving the human elements that give business processes their true value.

The risk is creating what appears to be a more efficient system but is actually a hollow process - one that moves faster but creates less real value. True digital transformation isn’t about removing humans from the loop, but about augmenting human capabilities while preserving the essential human elements that give work its meaning and effectiveness.

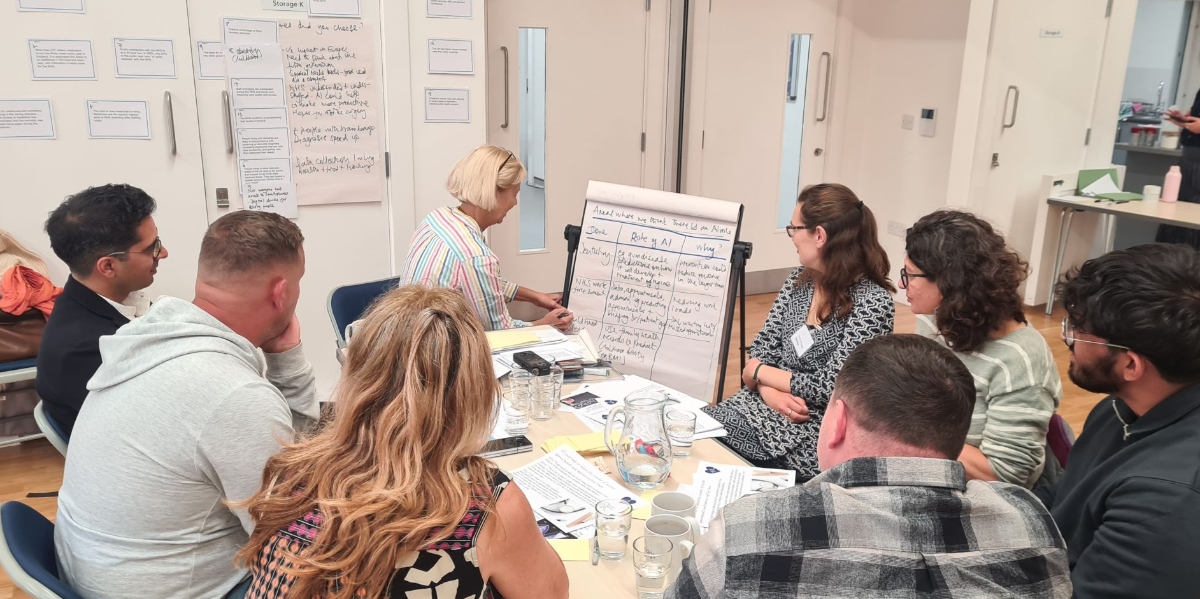

Figure: Public dialogue held in Liverpool alongside the 2024 Labour Party Conference. The process of discussion is as important as the material discussed. In line with previous dialogues attendees urged us to develop technology where AI operates as a tool for human augmentation, not replacement.

In our public dialogues we saw the same theme: good process can drive purpose. Discussion is as important as the conclusions reached. Attendees urged us to develop technology where AI operates as a tool for human augmentation, not replacement.

In banking, superficial automation that doesn’t address the underlying human needs can be particularly dangerous. We need systems that enhance human capabilities rather than simply replacing human tasks.

Developing Digital Literacy at Board Level

Banking executives must lead in developing digital literacy at the board level to ensure governance structures can effectively oversee AI implementation while maintaining appropriate human oversight.

The Future of Banking: Architecting Human-Machine Collaboration

Figure: This is the drawing Dan was inspired to create for Chapter 11. It captures the core idea in our tendency to devolve complex decisions to what we perceive as greater authorities, but what are in reality ill equipped to deliver a human response.

See blog post on Playing in People’s Backyards..

In the past when decisions became too difficult, we invoked higher powers in the forms of gods, and “trial by ordeal.” Today we face a similar challenge with AI. When a decision becomes difficult there is a danger that we hand it to the machine, but it is precisely these difficult decisions that need to contain a human element.

The future of banking in the AI age is not about choosing between humans and machines, its about creating systems that leverage the best of both. The organizations that will thrive will be those that understand that human attention, judgment, and relationships remain the most valuable assets in banking, even as AI becomes ubiquitous.

The Atomic Human

Thanks!

For more information on these subjects and more you might want to check the following resources.

- book: The Atomic Human

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com