The AI Paradigm Shift: Machine Learning, Automated Decision Making and Modern Society

Abstract

The term Artificial Intelligence means different things to different people, but we can distil some commonality across different expectations of the term. It seems that the word intelligence drives us to believe that this new approach to automation will be the first to adapt to us as humans rather than requiring us to adapt to it. This promise presents challenges, because machine learning technologies that underpin the revolution in artificial intelligence are not capable of adapting to humans as we are to each other. In this talk we introduce the challenges and overview the research directions we are taking to uncover the solutions.

AI in Three Apocraphal Quotes

Henry Ford’s Faster Horse

Figure: A 1925 Ford Model T built at Henry Ford’s Highland Park Plant in Dearborn, Michigan. This example now resides in Australia, owned by the founder of FordModelT.net. From https://commons.wikimedia.org/wiki/File:1925_Ford_Model_T_touring.jpg

It’s said that Henry Ford’s customers wanted a “a faster horse.” If Henry Ford was selling us artificial intelligence today, what would the customer call for, “a smarter human?” That’s certainly the picture of machine intelligence we find in science fiction narratives, but the reality of what we’ve developed is much more mundane.

Car engines produce prodigious power from petrol. Machine intelligences deliver decisions derived from data. In both cases the scale of consumption enables a speed of operation that is far beyond the capabilities of their natural counterparts. Unfettered energy consumption has consequences in the form of climate change. Does unbridled data consumption also have consequences for us?

If we devolve decision making to machines, we depend on those machines to accommodate our needs. If we don’t understand how those machines operate, we lose control over our destiny. Our mistake has been to see machine intelligence as a reflection of our intelligence. We cannot understand the smarter human without understanding the human. To understand the machine, we need to better understand ourselves.

The Diving Bell and the Butterfly

Figure: The Diving Bell and the Buttefly is the autobiography of Jean Dominique Bauby.

The Diving Bell and the Butterfly is the autobiography of Jean Dominique Bauby. Jean Dominique was the editor of the French Elle magazine, in 1995 at the age of 43, he suffered a major stroke. The stroke paralyzed him and rendered him speechless. He was only able to blink his left eyelid, he became a sufferer of locked in syndrome.

Human Communication

For human conversation to work, we require an internal model of who we are speaking to. We model each other, and combine our sense of who they are, who they think we are, and what has been said. This is our approach to dealing with the limited bandwidth connection we have. Empathy and understanding of intent. Mental dispositional concepts are used to augment our limited communication bandwidth.

Fritz Heider referred to the important point of a conversation as being that they are happenings that are “psychologically represented in each of the participants” (his emphasis) (Heider, 1958).

Bandwidth Constrained Conversations

Figure: Conversation relies on internal models of other individuals.

Figure: Misunderstanding of context and who we are talking to leads to arguments.

Embodiment factors imply that, in our communication between humans, what is not said is, perhaps, more important than what is said. To communicate with each other we need to have a model of who each of us are.

To aid this, in society, we are required to perform roles. Whether as a parent, a teacher, an employee or a boss. Each of these roles requires that we conform to certain standards of behaviour to facilitate communication between ourselves.

Control of self is vitally important to these communications.

The high availability of data available to humans undermines human-to-human communication channels by providing new routes to undermining our control of self.

A Six Word Novel

Figure: Consider the six word novel, apocraphally credited to Ernest Hemingway, “For sale: baby shoes, never worn.” To understand what that means to a human, you need a great deal of additional context. Context that is not directly accessible to a machine that has not got both the evolved and contextual understanding of our own condition to realize both the implication of the advert and what that implication means emotionally to the previous owner.

But this is a very different kind of intelligence than ours. A computer cannot understand the depth of the Ernest Hemingway’s apocryphal six word novel: “For Sale, Baby Shoes, Never worn,” because it isn’t equipped with that ability to model the complexity of humanity that underlies that statement.

Computer Conversations

Figure: Conversation relies on internal models of other individuals.

Figure: Misunderstanding of context and who we are talking to leads to arguments.

Similarly, we find it difficult to comprehend how computers are making decisions. Because they do so with more data than we can possibly imagine.

In many respects, this is not a problem, it’s a good thing. Computers and us are good at different things. But when we interact with a computer, when it acts in a different way to us, we need to remember why.

Just as the first step to getting along with other humans is understanding other humans, so it needs to be with getting along with our computers.

Embodiment factors explain why, at the same time, computers are so impressive in simulating our weather, but so poor at predicting our moods. Our complexity is greater than that of our weather, and each of us is tuned to read and respond to one another.

Their intelligence is different. It is based on very large quantities of data that we cannot absorb. Our computers don’t have a complex internal model of who we are. They don’t understand the human condition. They are not tuned to respond to us as we are to each other.

Embodiment factors encapsulate a profound thing about the nature of humans. Our locked in intelligence means that we are striving to communicate, so we put a lot of thought into what we’re communicating with. And if we’re communicating with something complex, we naturally anthropomorphize them.

We give our dogs, our cats and our cars human motivations. We do the same with our computers. We anthropomorphize them. We assume that they have the same objectives as us and the same constraints. They don’t.

This means, that when we worry about artificial intelligence, we worry about the wrong things. We fear computers that behave like more powerful versions of ourselves that will struggle to outcompete us.

In reality, the challenge is that our computers cannot be human enough. They cannot understand us with the depth we understand one another. They drop below our cognitive radar and operate outside our mental models.

The real danger is that computers don’t anthropomorphize. They’ll make decisions in isolation from us without our supervision, because they can’t communicate truly and deeply with us.

Lies and Damned Lies

There are three types of lies: lies, damned lies and statistics

Benjamin Disraeli 1804-1881

Benjamin Disraeli said1 that there three types of lies: lies, damned lies and statistics. Disraeli died in 1881, 30 years before the first academic department of applied statistics was founded at UCL. If Disraeli were alive today, it is likely that he’d rephrase his quote:

There are three types of lies, lies damned lies and big data.

Why? Because the challenges of understanding and interpreting big data today are similar to those that Disraeli faced in governing an empire through statistics in the latter part of the 19th century.

The quote lies, damned lies and statistics was credited to Benjamin Disraeli by Mark Twain in his autobiography. It characterizes the idea that statistic can be made to prove anything. But Disraeli died in 1881 and Mark Twain died in 1910. The important breakthrough in overcoming our tendency to overinterpet data came with the formalization of the field through the development of mathematical statistics.

Data has an elusive quality, it promises so much but can deliver little, it can mislead and misrepresent. To harness it, it must be tamed. In Disraeli’s time during the second half of the 19th century, numbers and data were being accumulated, the social sciences were being developed. There was a large scale collection of data for the purposes of government.

The modern ‘big data era’ is on the verge of delivering the same sense of frustration that Disraeli experienced, the early promise of big data as a panacea is evolving to demands for delivery. For me, personally, peak-hype coincided with an email I received inviting collaboration on a project to deploy “Big Data and Internet of Things in an Industry 4.0 environment.” Further questioning revealed that the actual project was optimization of the efficiency of a manufacturing production line, a far more tangible and realizable goal.

The antidote to this verbage is found in increasing awareness. When dealing with data the first trap to avoid is the games of buzzword bingo that we are wont to play. The first goal is to quantify what challenges can be addressed and what techniques are required. Behind the hype fundamentals are changing. The phenomenon is about the increasing access we have to data. The manner in which customers information is recorded and processes are codified and digitized with little overhead. Internet of things is about the increasing number of cheap sensors that can be easily interconnected through our modern network structures. But businesses are about making money, and these phenomena need to be recast in those terms before their value can be realized.

Mathematical Statistics

Karl Pearson (1857-1936), Ronald Fisher (1890-1962) and others considered the question of what conclusions can truly be drawn from data. Their mathematical studies act as a restraint on our tendency to over-interpret and see patterns where there are none. They introduced concepts such as randomized control trials that form a mainstay of the our decision making today, from government, to clinicians to large scale A/B testing that determines the nature of the web interfaces we interact with on social media and shopping.

Figure: Karl Pearson (1857-1936), one of the founders of Mathematical Statistics.

Their movement did the most to put statistics to rights, to eradicate the ‘damned lies.’ It was known as ‘mathematical statistics’. Today I believe we should look to the emerging field of data science to provide the same role. Data science is an amalgam of statistics, data mining, computer systems, databases, computation, machine learning and artificial intelligence. Spread across these fields are the tools we need to realize data’s potential. For many businesses this might be thought of as the challenge of ‘converting bits into atoms.’ Bits: the data stored on computer, atoms: the physical manifestation of what we do; the transfer of goods, the delivery of service. From fungible to tangible. When solving a challenge through data there are a series of obstacles that need to be addressed.

Firstly, data awareness: what data you have and where its stored. Sometimes this includes changing your conception of what data is and how it can be obtained. From automated production lines to apps on employee smart phones. Often data is locked away: manual log books, confidential data, personal data. For increasing awareness an internal audit can help. The website data.gov.uk hosts data made available by the UK government. To create this website the government’s departments went through an audit of what data they each hold and what data they could make available. Similarly, within private buisnesses this type of audit could be useful for understanding their internal digital landscape: after all the key to any successful campaign is a good map.

Secondly, availability. How well are the data sources interconnected? How well curated are they? The curse of Disraeli was associated with unreliable data and unreliable statistics. The misrepresentations this leads to are worse than the absence of data as they give a false sense of confidence to decision making. Understanding how to avoid these pitfalls involves an improved sense of data and its value, one that needs to permeate the organization.

The final challenge is analysis, the accumulation of the necessary expertise to digest what the data tells us. Data requires intepretation, and interpretation requires experience. Analysis is providing a bottleneck due to a skill shortage, a skill shortage made more acute by the fact that, ideally, analysis should be carried out by individuals not only skilled in data science but also equipped with the domain knowledge to understand the implications in a given application, and to see opportunities for improvements in efficiency.

‘Mathematical Data Science’

As a term ‘big data’ promises much and delivers little, to get true value from data, it needs to be curated and evaluated. The three stages of awareness, availability and analysis provide a broad framework through which organizations should be assessing the potential in the data they hold. Hand waving about big data solutions will not do, it will only lead to self-deception. The castles we build on our data landscapes must be based on firm foundations, process and scientific analysis. If we do things right, those are the foundations that will be provided by the new field of data science.

Today the statement “There are three types of lies: lies, damned lies and ‘big data’” may be more apt. We are revisiting many of the mistakes made in interpreting data from the 19th century. Big data is laid down by happenstance, rather than actively collected with a particular question in mind. That means it needs to be treated with care when conclusions are being drawn. For data science to succede it needs the same form of rigour that Pearson and Fisher brought to statistics, a “mathematical data science” is needed.

You can also check my blog post on Lies, Damned Lies and Big Data.

Evolved Relationship with Information

The high bandwidth of computers has resulted in a close relationship between the computer and data. Large amounts of information can flow between the two. The degree to which the computer is mediating our relationship with data means that we should consider it an intermediary.

Originaly our low bandwith relationship with data was affected by two characteristics. Firstly, our tendency to over-interpret driven by our need to extract as much knowledge from our low bandwidth information channel as possible. Secondly, by our improved understanding of the domain of mathematical statistics and how our cognitive biases can mislead us.

With this new set up there is a potential for assimilating far more information via the computer, but the computer can present this to us in various ways. If it’s motives are not aligned with ours then it can misrepresent the information. This needn’t be nefarious it can be simply as a result of the computer pursuing a different objective from us. For example, if the computer is aiming to maximize our interaction time that may be a different objective from ours which may be to summarize information in a representative manner in the shortest possible length of time.

For example, for me, it was a common experience to pick up my telephone with the intention of checking when my next appointment was, but to soon find myself distracted by another application on the phone, and end up reading something on the internet. By the time I’d finished reading, I would often have forgotten the reason I picked up my phone in the first place.

There are great benefits to be had from the huge amount of information we can unlock from this evolved relationship between us and data. In biology, large scale data sharing has been driven by a revolution in genomic, transcriptomic and epigenomic measurement. The improved inferences that can be drawn through summarizing data by computer have fundamentally changed the nature of biological science, now this phenomenon is also infuencing us in our daily lives as data measured by happenstance is increasingly used to characterize us.

Better mediation of this flow actually requires a better understanding of human-computer interaction. This in turn involves understanding our own intelligence better, what its cognitive biases are and how these might mislead us.

For further thoughts see Guardian article on marketing in the internet era from 2015.

You can also check my blog post on System Zero. also from 2015.

New Flow of Information

Classically the field of statistics focussed on mediating the relationship between the machine and the human. Our limited bandwidth of communication means we tend to over-interpret the limited information that we are given, in the extreme we assign motives and desires to inanimate objects (a process known as anthropomorphizing). Much of mathematical statistics was developed to help temper this tendency and understand when we are valid in drawing conclusions from data.

Figure: The trinity of human, data and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

Data science brings new challenges. In particular, there is a very large bandwidth connection between the machine and data. This means that our relationship with data is now commonly being mediated by the machine. Whether this is in the acquisition of new data, which now happens by happenstance rather than with purpose, or the interpretation of that data where we are increasingly relying on machines to summarise what the data contains. This is leading to the emerging field of data science, which must not only deal with the same challenges that mathematical statistics faced in tempering our tendency to over interpret data, but must also deal with the possibility that the machine has either inadvertently or malisciously misrepresented the underlying data.

Reflexive and Reflective Intelligence

Another distinction I find helpful when thinking about intelligence is the difference between reflexive actions and reflective actions. We are much more aware of our reflections, but most actions we take are reflexive. And this can lead to an underestimate of the importance of our reflexive actions.

\[\text{reflect} \Longleftrightarrow \text{reflex}\]

It is our reflective capabilities that distinguish us from so many lower forms of intelligence. And it is also in reflective thinking that we can contextualise and justify our actions.

Reflective actions require longer timescales to deploy, often when we are in the moment it is the reflexive thinking that takes over. Naturally our biases about the world can enter in either our reflective or reflexive thinking, but biases associated with reflexive thinking are likely to be those we are unaware of.

This interaction between reflexive and reflective, where our reflective-self can place us within a wider cultural context, would seem key to better human decision making. If the reflexive-self can learn from the reflective-self to make better decisions, or if we have mechanisms of doubt that allow our reflective-self to intervene when our reflexive-decisions have consequences, then our reflexive thinking can be “lifted” to better reflect the results of our actions.

\[\text{reflect} \Longleftrightarrow \text{reflex}\]

The Great AI Fallacy

There is a lot of variation in the use of the term artificial intelligence. I’m sometimes asked to define it, but depending on whether you’re speaking to a member of the public, a fellow machine learning researcher, or someone from the business community, the sense of the term differs.

However, underlying its use I’ve detected one disturbing trend. A trend I’m beginining to think of as “The Great AI Fallacy.”

The fallacy is associated with an implicit promise that is embedded in many statements about Artificial Intelligence. Artificial Intelligence, as it currently exists, is merely a form of automated decision making. The implicit promise of Artificial Intelligence is that it will be the first wave of automation where the machine adapts to the human, rather than the human adapting to the machine.

How else can we explain the suspension of sensible business judgment that is accompanying the hype surrounding AI?

This fallacy is particularly pernicious because there are serious benefits to society in deploying this new wave of data-driven automated decision making. But the AI Fallacy is causing us to suspend our calibrated skepticism that is needed to deploy these systems safely and efficiently.

The problem is compounded because many of the techniques that we’re speaking of were originally developed in academic laboratories in isolation from real-world deployment.

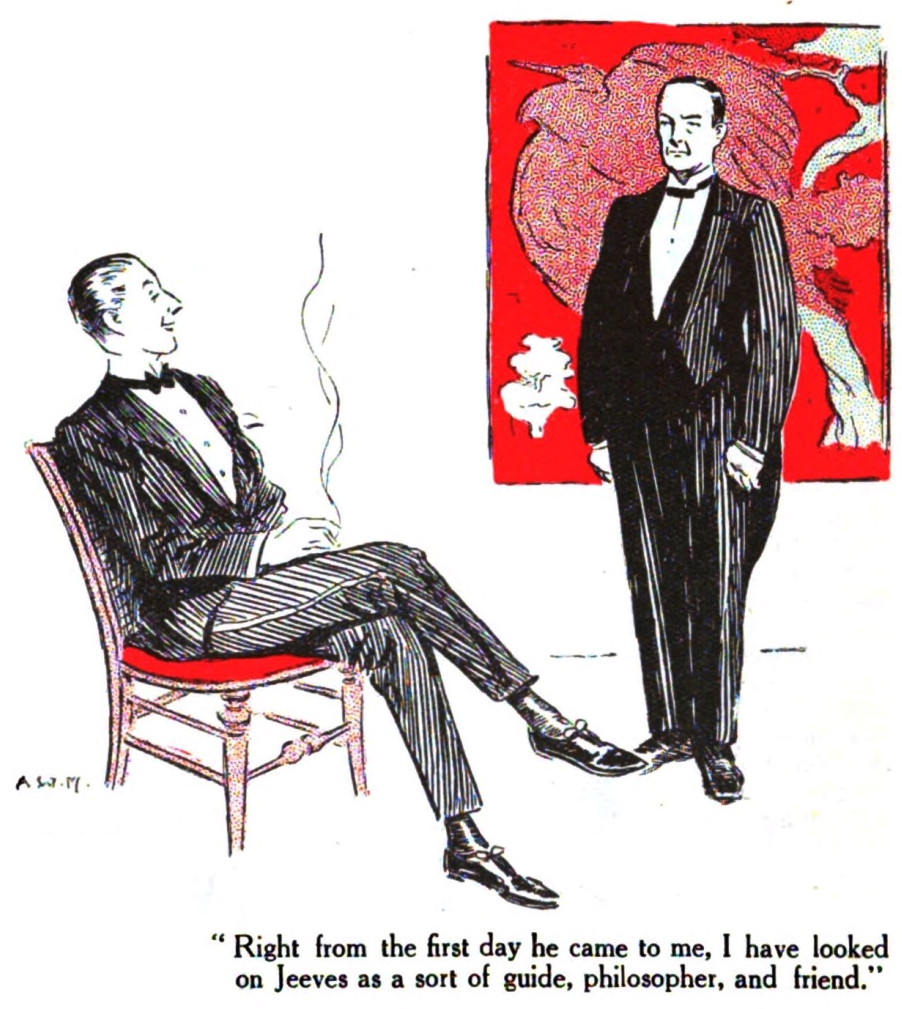

Figure: We seem to have fallen for a perspective on AI that suggests it will adapt to our schedule, rather in the manner of a 1930s manservant.

In large part, these challenges associated with AI are because AI has no understanding of the human condition. But there’s also a problem that we don’t have an intuitive understanding of AI and how it is working.

The marvellous resolution does not apply to machine driven decisions, because we don’t have an intuitive understanding of what motivates the machine.

The consequence is that the AI, driven by it’s detailed knowledge of who we are, arising from its access to large quantities of our data, can undermine the delicate balance of our decision-making, and replace our objectives with it’s own simplistic ideas of how things should be.

Figure: Address challenges in the way that complex software systems involving machine learning components are constructed to deal with the challenge of Intellectual Debt.

You can find our strategic research agenda here: https://mlatcl.github.io/papers/autoai-sra.pdf.

Challenge

It used to be true that computers only did what we programmed them to do, but today AI systems are learning from our data. This introduces new problems in how these systems respond to their environment.

We need to better monitor how data is influencing decision making and take corrective action as required.

Aim

Our aim is to scale our ability to deploy safe and reliable AI solutions. Our technical approach is to do this through data-oriented software engineering practices and deep system emulation. We will do this through a significant extension of the notion of Automated ML (AutoML) to Automated AI (AutoAI), this relies on a shift from Bayesian Optimisation to Bayesian System Optimisation. The project will develop a toolkit for automating the deployment, maintenance and monitoring of artificial intelligence systems.

Turing AI Fellowship

From December 2019 I began a Senior AI Fellowship at the Turing Institute funded by the Office for AI to investigate the consequences of deploying complex AI systems.

The notion relates from the “Promise of AI”: it promises to be the first generation of automation technology that will adapt to us, rather than us adapting to it. The premise of the project is that this promise will remain unfulfilled with current approaches to systems design and deployment.

A second intervention is dealing with the complexity of the software systems that underpin modern AI solutions. Even if two individuals, say African masters students, who are technically capable and have an interesting idea, deploy their idea. One challenge they face is the operational load in maintaining and explaining their software systems. The challenge of maintaining is known as intellectual debt (Sculley et al., 2015), the problem of explaining is known as intellectual debt.

The AutoAI project, sponsored by an ATI Senior AI Fellowship addresses this challenge.

Data Trusts: Empower People through their Data

Figure: The Data Trusts Initiative (http://datatrusts.uk) hosts blog posts helping build understanding of data trusts and supports research and pilot projects.

The third intervention goes direct to the source of the machine’s power. What we are seeing is an emergent digital oligarchy based on the power that comes with aggregation of data. Data Trusts are form of data intermediary designed to reutrn the power associated with this data accumulation to the originators of the data, that is us.

Personal Data Trusts

The machine learning solutions we are dependent on to drive automated decision making are dependent on data. But with regard to personal data there are important issues of privacy. Data sharing brings benefits, but also exposes our digital selves. From the use of social media data for targeted advertising to influence us, to the use of genetic data to identify criminals, or natural family members. Control of our virtual selves maps on to control of our actual selves.

The fuedal system that is implied by current data protection legislation has signficant power asymmetries at its heart, in that the data controller has a duty of care over the data subject, but the data subject may only discover failings in that duty of care when it’s too late. Data controllers also may have conflicting motivations, and often their primary motivation is not towards the data-subject, but that is a consideration in their wider agenda.

Personal Data Trusts (Delacroix and Lawrence, 2018; Edwards, 2004; Lawrence, 2016) are a potential solution to this problem. Inspired by land societies that formed in the 19th century to bring democratic representation to the growing middle classes. A land society was a mutual organisation where resources were pooled for the common good.

A Personal Data Trust would be a legal entity where the trustees responsibility was entirely to the members of the trust. So the motivation of the data-controllers is aligned only with the data-subjects. How data is handled would be subject to the terms under which the trust was convened. The success of an individual trust would be contingent on it satisfying its members with appropriate balancing of individual privacy with the benefits of data sharing.

Formation of Data Trusts became the number one recommendation of the Hall-Presenti report on AI, but unfortunately, the term was confounded with more general approaches to data sharing that don’t necessarily involve fiduciary responsibilities or personal data rights. It seems clear that we need to better characterise the data sharing landscape as well as propose mechanisms for tackling specific issues in data sharing.

It feels important to have a diversity of approaches, and yet it feels important that any individual trust would be large enough to be taken seriously in representing the views of its members in wider negotiations.

Figure: Data Trusts were the first recommendation of the Hall-Presenti Report. Unfortunately, since then the role of data trusts vs other data sharing mechanisms in the UK has been somewhat confused.

See Guardian articles on Guardian article on Digital Oligarchies and Guardian article on Information Feudalism.

Data Trusts Initiative

The Data Trusts Initiative, funded by the Patrick J. McGovern Foundation is supporting three pilot projects that consider how bottom-up empowerment can redress the imbalance associated with the digital oligarchy.

Figure: The Data Trusts Initiative (http://datatrusts.uk) hosts blog posts helping build understanding of data trusts and supports research and pilot projects.

Progress So Far

In its first 18 months of operation, the Initiative has:

Convened over 200 leading data ethics researchers and practitioners;

Funded 7 new research projects tackling knowledge gaps in data trust theory and practice;

Supported 3 real-world data trust pilot projects establishing new data stewardship mechanisms.

Finally, we are working across the University to empower the diversity ofexpertise and capability we have to focus on these broad societal problems. We will recently launched AI@Cam with a vision document that outlines these challenges for the University.

Thanks!

For more information on these subjects and more you might want to check the following resources.

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com

References