The Atomic Human

Abstract

In a space dedicated to Marconi’s legacy of communication, we find ourselves at a time where machines don’t only transmit messages: they summarise, recommend, predict, and decide.

In this talk we frame AI as information infrastructure. We connect today’s digital world with the deeper question from The Atomic Human: what remains uniquely human when machines can mimic so much of what we do?

To make good decisions we need to clarify the real opportunities and challenges that sit between security and creativity: trust, autonomy, accountability, and the role of cultural institutions and art, in how we adopt and deploy these powerful tools.

Henry Ford’s Faster Horse

Figure: A 1925 Ford Model T built at Henry Ford’s Highland Park Plant in Dearborn, Michigan. This example now resides in Australia, owned by the founder of FordModelT.net. From https://commons.wikimedia.org/wiki/File:1925_Ford_Model_T_touring.jpg

It’s said that Henry Ford’s customers wanted a “a faster horse.” If Henry Ford was selling us artificial intelligence today, what would the customer call for, “a smarter human?” That’s certainly the picture of machine intelligence we find in science fiction narratives, but the reality of what we’ve developed is much more mundane.

Car engines produce prodigious power from petrol. Machine intelligences deliver decisions derived from data. In both cases the scale of consumption enables a speed of operation that is far beyond the capabilities of their natural counterparts. Unfettered energy consumption has consequences in the form of climate change. Does unbridled data consumption also have consequences for us?

If we devolve decision making to machines, we depend on those machines to accommodate our needs. If we don’t understand how those machines operate, we lose control over our destiny. Our mistake has been to see machine intelligence as a reflection of our intelligence. We cannot understand the smarter human without understanding the human. To understand the machine, we need to better understand ourselves.

The Atomic Human

Figure: The Atomic Eye, by slicing away aspects of the human that we used to believe to be unique to us, but are now the preserve of the machine, we learn something about what it means to be human.

The development of what some are calling intelligence in machines, raises questions around what machine intelligence means for our intelligence. The idea of the atomic human is derived from Democritus’s atomism.

In the fifth century bce the Greek philosopher Democritus posed a question about our physical universe. He imagined cutting physical matter into pieces in a repeated process: cutting a piece, then taking one of the cut pieces and cutting it again so that each time it becomes smaller and smaller. Democritus believed this process had to stop somewhere, that we would be left with an indivisible piece. The Greek word for indivisible is atom, and so this theory was called atomism.

The Atomic Human considers the same question, but in a different domain, asking: As the machine slices away portions of human capabilities, are we left with a kernel of humanity, an indivisible piece that can no longer be divided into parts? Or does the human disappear altogether? If we are left with something, then that uncuttable piece, a form of atomic human, would tell us something about our human spirit.

See Lawrence (2024) atomic human, the p. 13.

Embodiment Factors: Walking vs Light Speed

Imagine human communication as moving at walking pace. The average person speaks about 160 words per minute, which is roughly 2000 bits per minute. If we compare this to walking speed, roughly 1 m/s we can think of this as the speed at which our thoughts can be shared with others.

Compare this to machines. When computers communicate, their bandwidth is 600 billion bits per minute. Three hundred million times faster than humans or the equiavalent of \(3 \times 10 ^{8}\). In twenty minutes we could be a kilometer down the road, where as the computer can go to the Sun and back again..

This difference is not just only about speed of communication, but about embodiment. Our intelligence is locked in by our biology: our brains may process information rapidly, but our ability to share those thoughts is limited to the slow pace of speech or writing. Machines, in comparison, seem able to communicate their computations almost instantaneously, anywhere.

So, the embodiment factor is the ratio between the time it takes to think a thought and the time it takes to communicate it. For us, it’s like walking; for machines, it’s like moving at light speed. This difference means that most direct comparisons between human and machine need to be carefully made. Because for humans not the size of our communication bandwidth that counts, but it’s how we overcome that limitation..

Figure: Conversation relies on internal models of other individuals.

Figure: Misunderstanding of context and who we are talking to leads to arguments.

Embodiment factors imply that, in our communication between humans, what is not said is, perhaps, more important than what is said. To communicate with each other we need to have a model of who each of us are.

To aid this, in society, we are required to perform roles. Whether as a parent, a teacher, an employee or a boss. Each of these roles requires that we conform to certain standards of behaviour to facilitate communication between ourselves.

Control of self is vitally important to these communications.

The consequences between this mismatch of power and delivery are to be seen all around us. Because, just as driving an F1 car with bicycle wheels would be a fine art, so is the process of communication between humans.

If I have a thought and I wish to communicate it, I first need to have a model of what you think. I should think before I speak. When I speak, you may react. You have a model of who I am and what I was trying to say, and why I chose to say what I said. Now we begin this dance, where we are each trying to better understand each other and what we are saying. When it works, it is beautiful, but when mis-deployed, just like a badly driven F1 car, there is a horrible crash, an argument.

From Marconi to AI

In Bologna, it’s hard not to feel the long arc from Marconi’s breakthrough to today’s AI moment. Wireless did something profound: it separated communication from place.

AI is doing something similarly profound: it separates decision-making from place, and sometimes from the human institutions that normally hold responsibility. It can feel like “intelligence at a distance.”

That’s why the right frame isn’t magic or sentience, but governance: when do we delegate, when do we supervise, and how do we keep accountability when the system is complex?

Security and Creativity

On the one hand, we have security: not only cyber-security, but epistemic security, how we know what is true when our information is mediated by machines.

On the other hand, we have emulated creativity. Generative models can produce convincing images and text. But real creativity is also intention, context, responsibility, and lived experience. The atomic human part that doesn’t decompose into patterns alone.

Digital twins are an example of the overlap: they are extraordinary creative/engineering achievements, but they also move authority into models, so the key question is how we keep humans in the loop, and on the hook.

New Flow of Information

Classically the field of statistics focused on mediating the relationship between the machine and the human. Our limited bandwidth of communication means we tend to over-interpret the limited information that we are given, in the extreme we assign motives and desires to inanimate objects (a process known as anthropomorphizing). Much of mathematical statistics was developed to help temper this tendency and understand when we are valid in drawing conclusions from data.

Figure: The trinity of human, data, and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

Data science brings new challenges. In particular, there is a very large bandwidth connection between the machine and data. This means that our relationship with data is now commonly being mediated by the machine. Whether this is in the acquisition of new data, which now happens by happenstance rather than with purpose, or the interpretation of that data where we are increasingly relying on machines to summarize what the data contains. This is leading to the emerging field of data science, which must not only deal with the same challenges that mathematical statistics faced in tempering our tendency to over interpret data but must also deal with the possibility that the machine has either inadvertently or maliciously misrepresented the underlying data.

See Lawrence (2024) topography, information p. 34-9, 43-8, 57, 62, 104, 115-16, 127, 140, 192, 196, 199, 291, 334, 354-5. See Lawrence (2024) anthropomorphization (‘anthrox’) p. 30-31, 90-91, 93-4, 100, 132, 148, 153, 163, 216-17, 239, 276, 326, 342.

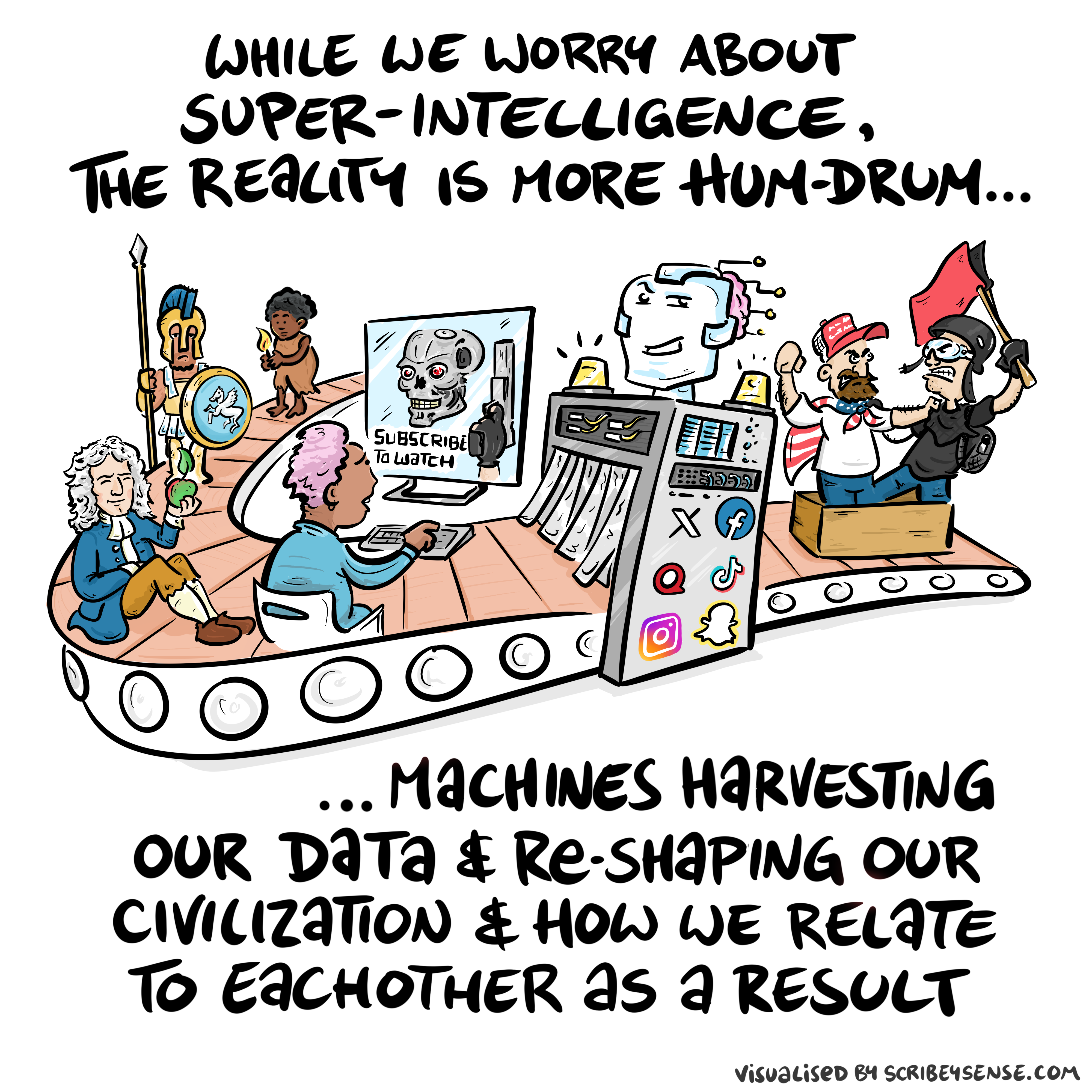

Figure: The nature of digital communication has changed dramatically, with machines processing vast amounts of human-generated data and feeding it back to us in ways that can distort our information landscape. (Illustration by Dan Andrews inspired by Chapter 5 “Enlightenment” of “The Atomic Human” Lawrence (2024))

This illustration was created by Dan Andrews after reading Chapter 5 “Enlightenment” of “The Atomic Human” book. The chapter examines how machine learning models like GPTs ingest vast amounts of human-created data and then reflect modified versions back to us. The drawing celebrates the stochastic parrots paper by Bender et al. (2021) while also capturing how this feedback loop of information can distort our digital discourse.

See blog post on Two Types of Stochastic Parrots.

See blog post on Two Types of Stochastic Parrots.

The stochastic parrots paper (Bender et al., 2021) was the moment that the research community, through a group of brave researchers, some of whom paid with their jobs, raised the first warnings about these technologies. Despite their bravery, at least in the UK, their voices and those of many other female researchers were erased from the public debate around AI.

Their voices were replaced by a different type of stochastic parrot, a group of “fleshy GPTs” that speak confidently and eloquently but have little experience of real life and make arguments that, for those with deeper knowledge are flawed in naive and obvious ways.

The story is a depressing reflection of a similar pattern that undermined the UK computer industry Hicks (2018).

We all have a tendency to fall into the trap of becoming fleshy GPTs, and the best way to prevent that happening is to gather diverse voices around ourselves and take their perspectives seriously even when we might instinctively disagree.

Sunday Times article “Our lives may be enhanced by AI, but Big Tech just sees dollar signs” Times article “Don’t expect AI to just fix everything, professor warns”

HAM

The Human-Analogue Machine or HAM therefore provides a route through which we could better understand our world through improving the way we interact with machines.

Figure: The trinity of human, data, and computer, and highlights the modern phenomenon. The communication channel between computer and data now has an extremely high bandwidth. The channel between human and computer and the channel between data and human is narrow. New direction of information flow, information is reaching us mediated by the computer. The focus on classical statistics reflected the importance of the direct communication between human and data. The modern challenges of data science emerge when that relationship is being mediated by the machine.

The HAM can provide an interface between the digital computer and the human allowing humans to work closely with computers regardless of their understandin gf the more technical parts of software engineering.

Figure: The HAM now sits between us and the traditional digital computer.

Of course this route provides new routes for manipulation, new ways in which the machine can undermine our autonomy or exploit our cognitive foibles. The major challenge we face is steering between these worlds where we gain the advantage of the computer’s bandwidth without undermining our culture and individual autonomy.

See Lawrence (2024) human-analogue machine (HAMs) p. 343-347, 359-359, 365-368.

The Sorcerer’s Apprentice

See this blog post on The Open Society and its AI.

Figure: A young sorcerer learns his masters spells, and deploys them to perform his chores, but can’t control the result.

In Goethe’s poem The Sorcerer’s Apprentice, a young sorcerer learns one of their master’s spells and deploys it to assist in his chores. Unfortunately, he cannot control it. The poem was popularised by Paul Dukas’s musical composition, in 1940 Disney used the composition in the film Fantasia. Mickey Mouse plays the role of the hapless apprentice who deploys the spell but cannot control the results.

When it comes to our software systems, the same thing is happening. The Harvard Law professor, Jonathan Zittrain calls the phenomenon intellectual debt. In intellectual debt, like the sorcerer’s apprentice, a software system is created but it cannot be explained or controlled by its creator. The phenomenon comes from the difficulty of building and maintaining large software systems: the complexity of the whole is too much for any individual to understand, so it is decomposed into parts. Each part is constructed by a smaller team. The approach is known as separation of concerns, but it has the unfortunate side effect that no individual understands how the whole system works. When this goes wrong, the effects can be devastating. We saw this in the recent Horizon scandal, where neither the Post Office or Fujitsu were able to control the accounting system they had deployed, and we saw it when Facebook’s systems were manipulated to spread misinformation in the 2016 US election.

In 2019 Mark Zuckerberg wrote an op-ed in the Washington Post calling for regulation of social media. He was repeating the realisation of Goethe’s apprentice, he had released a technology he couldn’t control. In Goethe’s poem, the master returns, “Besen, besen! Seid’s gewesen” he calls, and order is restored, but back in the real world the role of the master is played by Popper’s open society. Unfortunately, those institutions have been undermined by the very spell that these modern apprentices have cast. The book, the letter, the ledger, each of these has been supplanted in our modern information infrastructure by the computer. The modern scribes are software engineers, and their guilds are the big tech companies. Facebook’s motto was to “move fast and break things.” Their software engineers have done precisely that and the apprentice has robbed the master of his powers. This is a desperate situation, and it’s getting worse. The latest to reprise the apprentice’s role are Sam Altman and OpenAI who dream of “general intelligence” solutions to societal problems which OpenAI will develop, deploy, and control. Popper worried about the threat of totalitarianism to our open societies, today’s threat is a form of information totalitarianism which emerges from the way these companies undermine our institutions.

So, what to do? If we value the open society, we must expose these modern apprentices to scrutiny. Open development processes are critical here, Fujitsu would never have got away with their claims of system robustness for Horizon if the software they were using was open source. We also need to re-empower the professions, equipping them with the resources they need to have a critical understanding of these technologies. That involves redesigning the interface between these systems and the humans that empowers civil administrators to query how they are functioning. This is a mammoth task. But recent technological developments, such as code generation from large language models, offer a route to delivery.

The open society is characterised by institutions that collaborate with each other in the pragmatic pursuit of solutions to social problems. The large tech companies that have thrived because of the open society are now putting that ecosystem in peril. For the open society to survive it needs to embrace open development practices that enable Popper’s piecemeal social engineers to come back together and chant “Besen, besen! Seid’s gewesen.” Before it is too late for the master to step in and deal with the mess the apprentice has made.

See Lawrence (2024) sorcerer’s apprentice p. 371-374.

Figure: When we delegate to automation, we can lose the ability to intervene, explain, or reverse outcomes. (Illustration by Dan Andrews, inspired by Chapter 8 “System Zero” of The Atomic Human.)

The Sorcerer’s Apprentice is a story about delegation without controllability: a tool takes over a task, but the human can’t stop it cleanly.

“System Zero” is the modern version: we build systems that operate at machine speed, across many interfaces, and we gradually lose the practical capacity to audit, pause, or override them. The risk isn’t “AI becomes conscious”; it’s that the system becomes unaccountable infrastructure.

Culture

Cicero suggested that philosophy cultivates the mind. This notion of is vital for how we communicate. Because we have so little bandwidth we rely on shared conceptions of the world to communicate complex subjects.

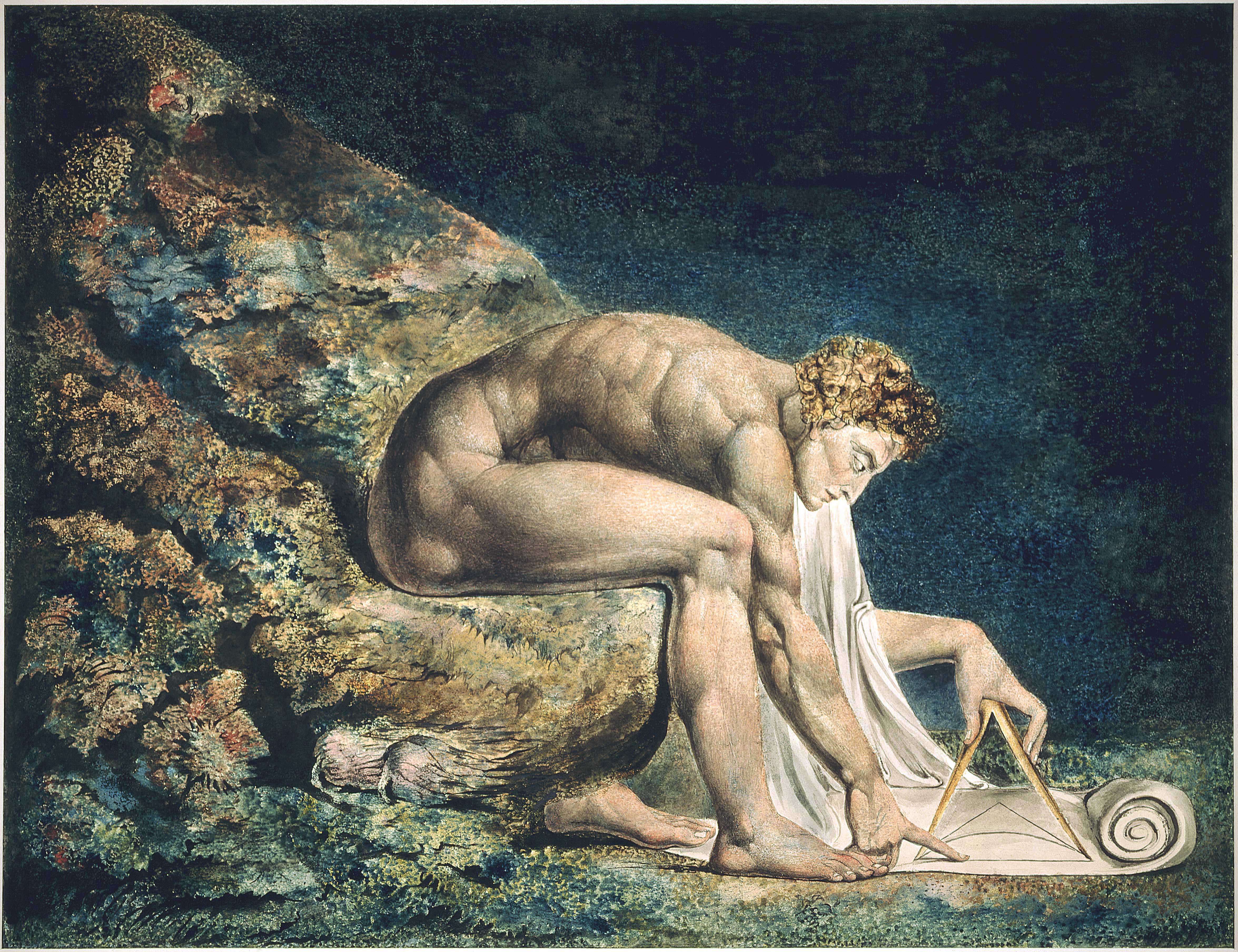

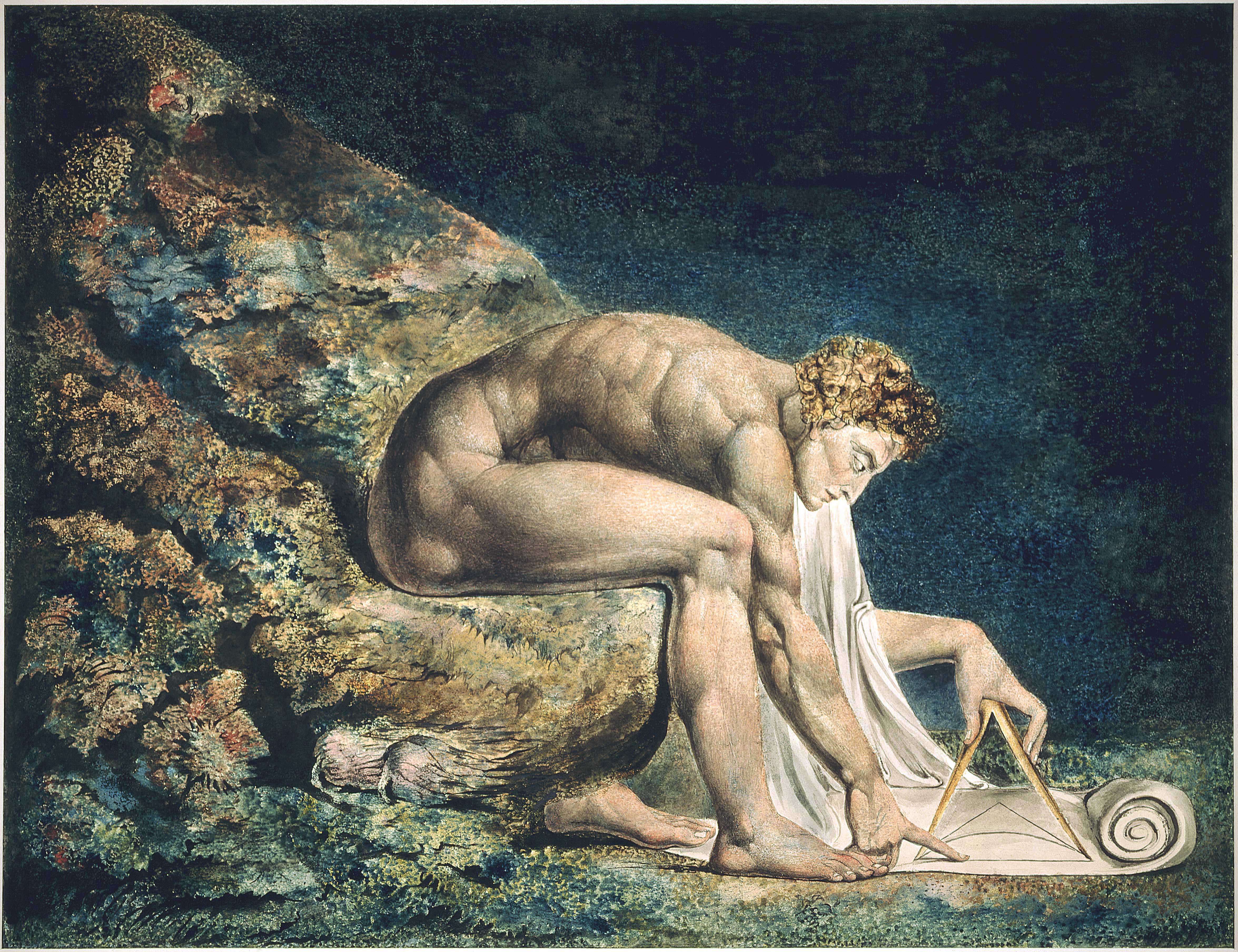

Blake’s Newton

William Blake’s rendering of Newton captures humans in a particular state. He is trance-like absorbed in simple geometric shapes. The feel of dreams is enhanced by the underwater location, and the nature of the focus is enhanced because he ignores the complexity of the sea life around him.

Figure: William Blake’s Newton. 1795c-1805

See Lawrence (2024) Blake, William Newton p. 121–123.

The caption in the Tate Britain reads:

Here, Blake satirises the 17th-century mathematician Isaac Newton. Portrayed as a muscular youth, Newton seems to be underwater, sitting on a rock covered with colourful coral and lichen. He crouches over a diagram, measuring it with a compass. Blake believed that Newton’s scientific approach to the world was too reductive. Here he implies Newton is so fixated on his calculations that he is blind to the world around him. This is one of only 12 large colour prints Blake made. He seems to have used an experimental hybrid of printing, drawing, and painting.

From the Tate Britain

See Lawrence (2024) Blake, William Newton p. 121–123, 258, 260, 283, 284, 301, 306.

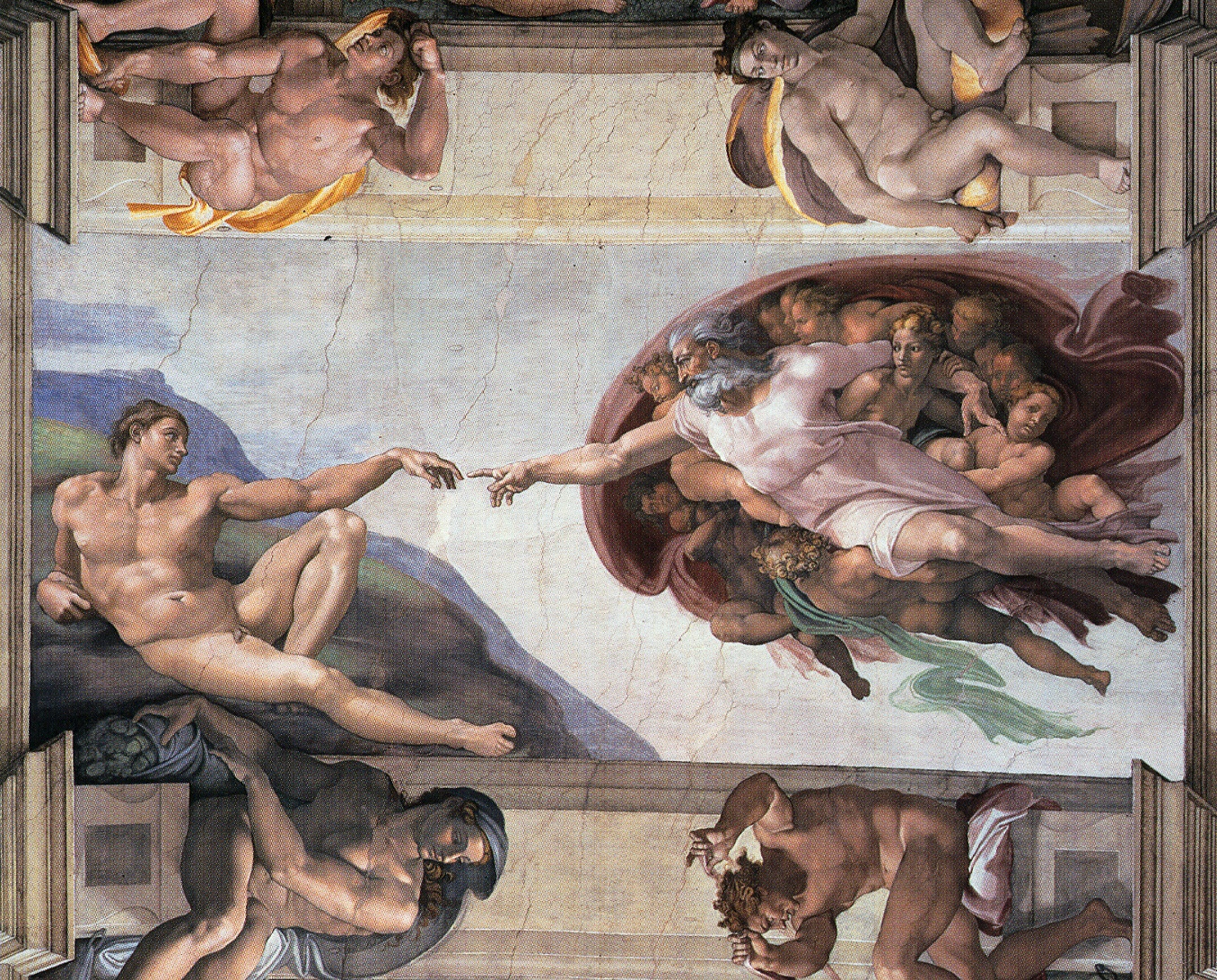

Sistine Chapel Ceiling

Shortly before I first moved to Cambridge, my girlfriend (now my wife) took me to the Sistine Chapel to show me the recently restored ceiling.

Figure: The ceiling of the Sistine Chapel.

When we got to Cambridge, we both attended Patrick Boyde’s talks on chapel. He focussed on both the structure of the chapel ceiling, describing the impression of height it was intended to give, as well as the significance and positioning of each of the panels and the meaning of the individual figures.

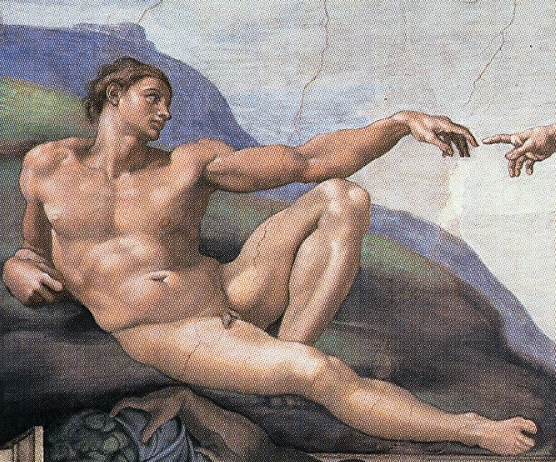

The Creation of Adam

Figure: Photo of Detail of Creation of Man from the Sistine chapel ceiling.

One of the most famous panels is central in the ceiling, it’s the creation of man. Here, God in the guise of a pink-robed bearded man reaches out to a languid Adam.

The representation of God in this form seems typical of the time, because elsewhere in the Vatican Museums there are similar representations.

Figure: Photo detail of God.

Photo from https://commons.wikimedia.org/wiki/File:Michelangelo,_Creation_of_Adam_04.jpg.

My colleague Beth Singler has written about how often this image of creation appears when we talk about AI (Singler, 2020).

See Lawrence (2024) Michelangelo, The Creation of Adam p. 7-9, 31, 91, 105–106, 121, 153, 206, 216, 350.

The way we represent this “other intelligence” in the figure of a Zeus-like bearded mind demonstrates our tendency to embody intelligences in forms that are familiar to us.

Lunette Rehoboam Abijah

Many of Blake’s works are inspired by engravings he saw of the Sistine chapel ceiling. The pose of Newton is taken from the Lunette depiction of Abijah, one of the Michelangeo’s ancestors of Christ.

Figure: Lunette containing Rehoboam and Abijah.

Elohim Creating Adam

Blake’s vision of the creation of man, known as Elohim Creating Adam, is a strong contrast to Michelangelo’s. The faces of both God and Adam show deep anguish. The image is closer to representations of Prometheus receiving his punishment for sharing his knowledge of fire than to the languid ecstasy we see in Michelangelo’s representation.

Figure: William Blake’s Elohim Creating Adam.

The caption in the Tate reads:

Elohim is a Hebrew name for God. This picture illustrates the Book of Genesis: ‘And the Lord God formed man of the dust of the ground.’ Adam is shown growing out of the earth, a piece of which Elohim holds in his left hand.

For Blake the God of the Old Testament was a false god. He believed the Fall of Man took place not in the Garden of Eden, but at the time of creation shown here, when man was dragged from the spiritual realm and made material.

From the Tate Britain

Blake’s vision is demonstrating the frustrations we experience when the (complex) real world doesn’t manifest in the way we’d hoped.

See Lawrence (2024) Blake, William Elohim Creating Adam p. 121, 217–18.

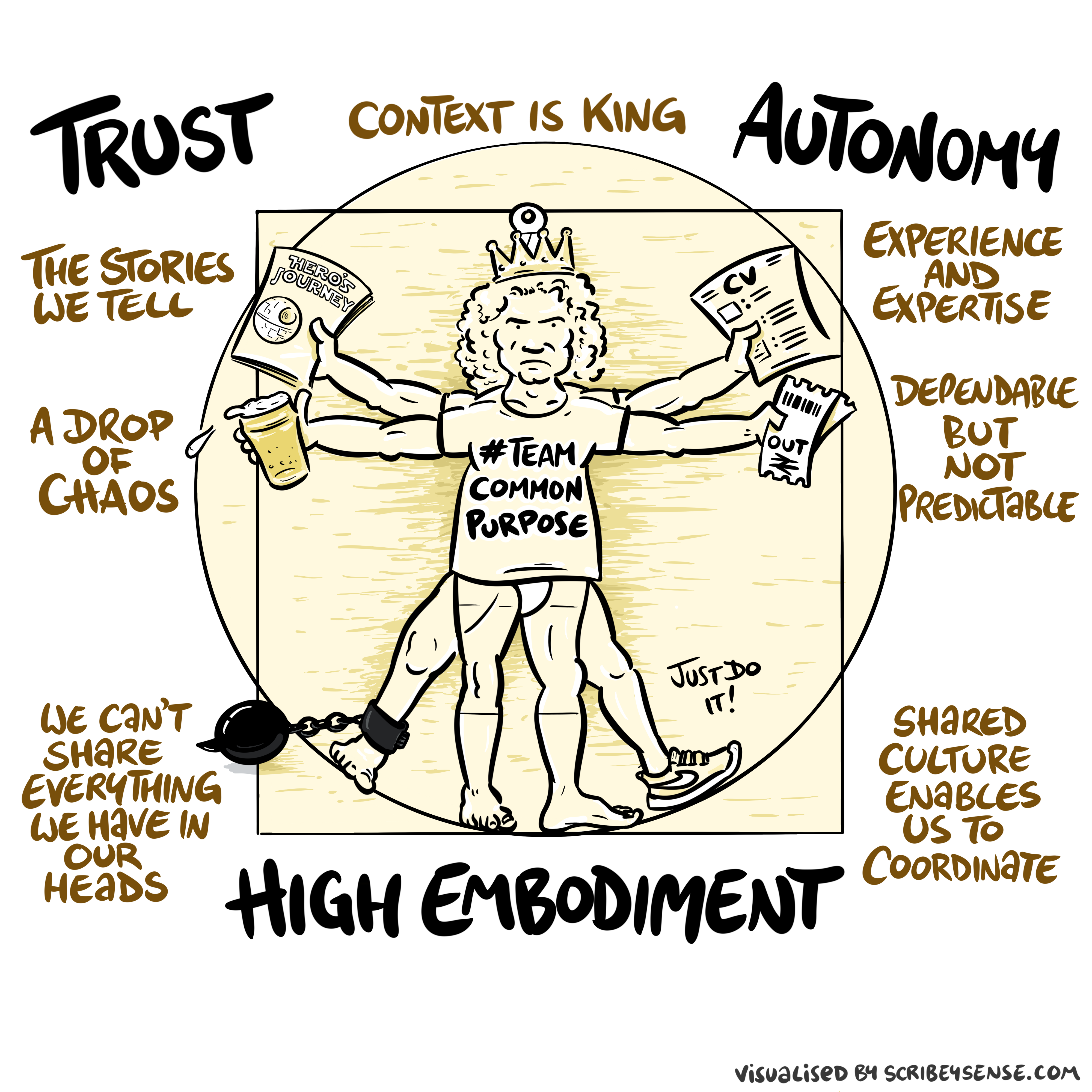

Figure: The relationships between trust, autonomy and embodiment are key to understanding how to properly deploy AI systems in a way that avoids digital autocracy. (Illustration by Dan Andrews inspired by Chapter 3 “Intent” of “The Atomic Human” Lawrence (2024))

This illustration was created by Dan Andrews after reading Chapter 3 “Intent” of “The Atomic Human” book. The chapter explores the concept of intent in AI systems and how trust, autonomy, and embodiment interact to shape our relationship with technology. Dan’s drawing captures these complex relationships and the balance needed for responsible AI deployment.

See blog post on Dan Andrews image from Chapter 3.

See blog post on Dan Andrews image from Chapter 3..

We communicate with each other through shared cultural reference points: stories, rituals, and artefacts. Great artworks are not just decoration: they are compressed cultural objects that can carry meaning across centuries.

The Sistine Chapel becomes a kind of public interface: a shared “model” that people can point at, interpret, dispute, and transmit. In that sense, the artwork itself is part of the communication system.

Figure: The creation of Adam and the Lunette of Abijah come to gether to influence Blake’s version of Newton, yet his view of creation is very different from Michelangelo’s.

What’s striking here is how influence works: a pose moves from a Michelangelo lunette into Blake’s Newton; a creation narrative is reinterpreted as anguish in Elohim Creating Adam. These are “links” in a human communication network.

Human communication is not only words passed between individuals. We also communicate through shared artefacts — images, stories, rituals, institutions — that act as a common reference frame. These artefacts stabilise meaning because they persist, they can be revisited, and they can be interpreted together.

This version of the “human culture interacting” diagram grounds the idea in a concrete chain: the Sistine Chapel as a shared cultural object; Michelangelo’s figures as a visual vocabulary; Blake’s Newton borrowing a pose from a lunette; and Blake’s Elohim Creating Adam reinterpreting the creation narrative. In other words: a human communication network made of artefacts.

Figure: Humans use culture, facts and artefacts to communicate.

A Six Word Novel

Figure: Consider the six-word novel, apocryphally credited to Ernest Hemingway, “For sale: baby shoes, never worn.” To understand what that means to a human, you need a great deal of additional context. Context that is not directly accessible to a machine that has not got both the evolved and contextual understanding of our own condition to realize both the implication of the advert and what that implication means emotionally to the previous owner.

See Lawrence (2024) baby shoes p. 368.

But this is a very different kind of intelligence than ours. A computer cannot understand the depth of the Ernest Hemingway’s apocryphal six-word novel: “For Sale, Baby Shoes, Never worn,” because it isn’t equipped with that ability to model the complexity of humanity that underlies that statement.

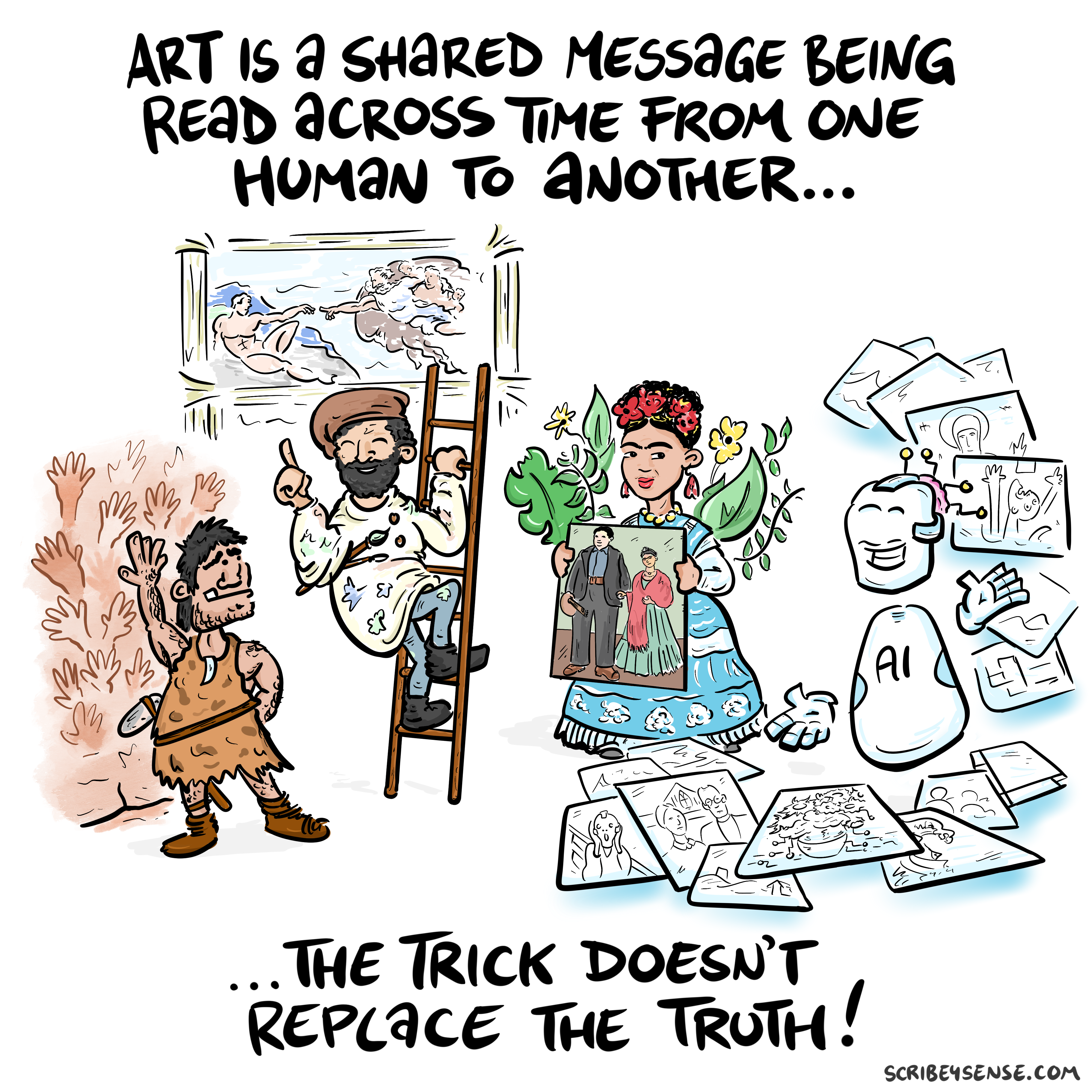

Figure: This is the drawing Dan was inspired to create for Chapter 4. It highlights how even if these machines can generate creative works the lack of origin in humans menas it is not the same as works of art that come to us through history.

See blog post on Art is Human..

For the Working Group for the Royal Society report on Machine Learning, back in 2016, the group worked with Ipsos MORI to engage in public dialogue around the technology. Ever since I’ve been struck about how much more sensible the conversations that emerge from public dialogue are than the breathless drivel that seems to emerge from supposedly more informed individuals.

There were a number of messages that emerged from those dialogues, and many of those messages were reinforced in two further public dialogues we organised in September.

However, there was one area we asked about in 2017, but we didn’t ask about in 2024. That was an area where the public unequivocal that they didn’t want the research community to pursue progress. Quoting from the report (my emphasis).

Art: Participants failed to see the purpose of machine learning-written poetry. For all the other case studies, participants recognised that a machine might be able to do a better job than a human. However, they did not think this would be the case when creating art, as doing so was considered to be a fundamentally human activity that machines could only mimic at best.

Public Views of Machine Learning, April, 2017

How right they were.

Questions

These questions are deliberately practical: they surface what “security” and “creativity” mean in lived contexts. Digital twins can be extraordinary tools for preservation and exploration, but they also shift authority into models and platforms.

For security, the aim isn’t “trust AI” or “ban AI,” but creating systems where autonomy is conditional, auditable, and reversible—where responsibility remains legible.

For creativity, the point isn’t whether machines can produce an image, but how we preserve authorship, meaning, and cultural value when imitation is cheap and ubiquitous.

We should not treat AI not as a creative agent. It is an infrastructure, like archives or broadcasting. We need to:

keep humans institutionally on the hook: whenever AI is used (restoration, recommendation, translation, even creation), a named person or institution remains accountable.

make delegation conditional: decide what can be automated, where supervision is required, and how decisions can be reversed. Preserve autonomy without banning tools.

protect meaning, not just output: focus policy on transparency, provenance, and fair compensation rather than trying to adjudicate what is “real art.”

The aim is not to slow innovation, but to anchor it. Ensure creativity and trust remain legible to the public. Artists worry about loss of creative control. Institutions can help by ensuring attribution, consent, and economic participation.

Thanks!

For more information on these subjects and more you might want to check the following resources.

- company: Trent AI

- book: The Atomic Human

- twitter: @lawrennd

- podcast: The Talking Machines

- newspaper: Guardian Profile Page

- blog: http://inverseprobability.com